Comparative Analysis of Retrosynthesis Tools: From AI Foundations to Practical Drug Development

This comprehensive analysis examines the evolving landscape of computer-aided retrosynthesis planning tools, comparing traditional rule-based systems with emerging AI and LLM-based approaches.

Comparative Analysis of Retrosynthesis Tools: From AI Foundations to Practical Drug Development

Abstract

This comprehensive analysis examines the evolving landscape of computer-aided retrosynthesis planning tools, comparing traditional rule-based systems with emerging AI and LLM-based approaches. Targeting researchers, scientists, and drug development professionals, the article explores foundational concepts, methodological innovations, optimization strategies, and validation frameworks. Through systematic comparison of tools like AOT*, RetroExplainer, and SYNTHIAâ„¢, we evaluate performance metrics, efficiency gains, and practical applications in reducing drug discovery timelines and costs while promoting greener chemistry principles.

Retrosynthesis Fundamentals: From Traditional Analysis to AI Revolution

Core Principles and Historical Foundations

Retrosynthesis, formally defined as the process of deconstructing a target organic molecule into progressively simpler precursors via imaginary bond disconnections or functional group transformations until commercially available starting materials are reached, is a cornerstone of organic synthesis and drug discovery [1] [2]. This systematic, backward-working strategy empowers chemists to plan viable synthetic routes for complex target molecules by navigating a vast and exponentially growing chemical space [1].

The intellectual foundation of retrosynthesis was profoundly shaped by the work of Nobel Laureate E.J. Corey. In 1967, his pioneering attempt to use computational tools for synthesis design marked the birth of computer-aided retrosynthesis [1]. Corey and his team developed early expert systems like LHASA, which relied on manually encoded reaction rules and logic-based synthesis trees where the target molecule formed the root node [1]. These early template-based endeavors established a framework that still serves as the backbone for many modern approaches, demonstrating a heavy reliance on expert knowledge and a reaction library whose size directly determined the searchable chemical space [1].

The past decade has witnessed a paradigm shift, driven by increased computing power, the establishment of large reaction databases (e.g., Reaxys, SciFinder, USPTO), and the rise of data-driven machine learning (ML) techniques [1]. These advancements have catalyzed the development of both enhanced template-based models and novel template-free methods, moving the field from purely knowledge-driven systems to models that can infer latent relationships from high-dimensional chemical data [3] [1].

Comparative Analysis of Modern Retrosynthesis Approaches

Contemporary retrosynthesis planning tools can be broadly categorized into three main methodologies: template-based, semi-template-based, and template-free. Each offers distinct mechanisms, advantages, and limitations, as detailed in the table below.

Table 1: Comparative Analysis of Modern Retrosynthesis Methodologies

| Methodology | Core Mechanism | Key Examples | Advantages | Limitations |

|---|---|---|---|---|

| Template-Based | Matches target molecules to a library of expert-defined or data-extracted reaction templates describing reaction rules [3] [1]. | GLN [3], RetroComposer [3] | High chemical interpretability; ensures chemically plausible reactions [3]. | Limited generalization; poor scalability; computationally expensive subgraph matching [3] [1]. |

| Semi-Template-Based | Predicts reactants through intermediates or synthons, often by first identifying reaction centers [3]. | SemiRetro [3], Graph2Edits [3] | Reduces template redundancy; improves interpretability [3]. | Handling of multicenter reactions remains challenging [3]. |

| Template-Free | Treats retrosynthesis as a translation task, directly generating reactant SMILES strings from product SMILES without explicit reaction rules [3] [1]. | seq2seq [3], SCROP [3], Chemformer [2] | No expert knowledge required at inference; strong generalization to novel reactions [3] [2]. | May generate invalid SMILES; can overlook structural information [3]. |

A key development in template-free approaches is the adoption of architectures from natural language processing (NLP), such as the Transformer model, which treats Simplified Molecular Input Line Entry System (SMILES) strings as a language to be translated [3]. This has enabled the emergence of large-scale models like RSGPT, a generative transformer pre-trained on 10 billion generated data points, showcasing how overcoming data scarcity can lead to substantial performance gains [3].

Performance Benchmarking of State-of-the-Art Tools

Evaluating retrosynthesis tools involves metrics like Top-1 accuracy for single-step prediction and solvability for multi-step routes. However, a more nuanced evaluation that includes route feasibility, reflecting practical laboratory executability, is crucial [2].

Table 2: Performance Benchmarking of Retrosynthesis Planning Tools and Models

| Tool / Model | Type | Key Feature | Reported Performance |

|---|---|---|---|

| RSGPT [3] | Template-free Generative Transformer | Pre-trained on 10 billion synthetic data points; uses RLAIF | Top-1 Accuracy: 63.4% (USPTO-50K) |

| Neuro-symbolic Model [4] | Neurosymbolic Programming | Learns reusable, multi-step patterns (cascade/complementary reactions) | Success Rate: ~98.4% (Retro*-190 dataset); Reduces inference time for similar molecules |

| Retro* [2] | Planning Algorithm | A* search guided by a neural network for cost estimation | High performance in balancing route finding and feasibility |

| MEEA* [2] | Planning Algorithm | Combines MCTS exploration with A* optimality | Solvability: ~95% (on tested datasets) |

| LocalRetro [2] | Template-based SRPM | Selects suitable reaction templates from a predefined set | Chemically plausible predictions |

| ReactionT5 [2] | Template-free SRPM | State-of-the-art template-free model on USPTO-50K | High Top-1 accuracy |

Comparative studies reveal that the highest solvability does not always equate to the most feasible routes. For instance, while MEEA* with a default SRPM demonstrated superior solvability (~95%), Retro* with a default SRPM performed better when considering a combined metric of both solvability and feasibility [2]. This underscores the necessity of multi-faceted evaluation in retrosynthetic planning.

Experimental Protocols and Methodologies

Data Generation and Pre-training for Large Models

The RSGPT model highlights a strategy to overcome data bottlenecks. Its pre-training relied on a massive dataset generated using the RDChiral template extraction algorithm on the USPTO-FULL dataset [3]. A fragment library was created by breaking down millions of molecules from PubChem and ChEMBL using the BRICS method. Templates were then matched to these fragments to generate over 10 billion synthetic reaction datapoints, creating a broad chemical space for effective model pre-training [3].

Another innovative approach involves a neurosymbolic workflow inspired by human learning, structured into three iterative phases [4]:

- Wake Phase: The system attempts to solve retrosynthetic planning tasks, constructing an AND-OR search graph guided by neural networks that decide where and how to expand the graph. Successful routes and failures are recorded [4].

- Abstraction Phase: The system analyzes the search graph from the wake phase to extract reusable, multi-step strategies. It identifies "cascade chains" (sequences of consecutive transformations) and "complementary chains" (interdependent reactions), defining them as new abstract reaction templates for the library [4].

- Dreaming Phase: To refine the neural network models without costly real-world experiments, the system generates "fantasies" - simulated retrosynthesis experiences. The models are then trained on these fantasies and replayed experiences to learn how to better apply the expanded template library in subsequent wake phases [4].

Evaluation Metrics and Feasibility Assessment

Robust evaluation extends beyond simple solvability. The Route Feasibility metric is calculated by averaging the feasibility scores of each single step within a proposed route [2]. This score is derived from metrics like the Feasibility Thresholded Count (FTC), which assesses the practical likelihood of a reaction step. This provides a more comprehensive measure of a route's real-world viability than solvability or length alone [2].

The development and application of modern retrosynthesis tools depend on several key digital reagents and databases.

Table 3: Key Research Reagents and Computational Resources

| Resource Name | Type | Function in Retrosynthesis Research |

|---|---|---|

| USPTO Dataset [3] [1] | Reaction Database | A foundational dataset (e.g., USPTO-50K, USPTO-FULL) for training and benchmarking retrosynthesis models. |

| Reaxys [1] | Reaction Database | A comprehensive commercial database of chemical reactions and substances used for knowledge extraction. |

| SciFinder [1] | Reaction Database | A scholarly research resource providing access to chemical literature and reaction data. |

| SMILES Strings [3] [1] | Molecular Representation | A line notation method for representing molecular structures, enabling template-free, NLP-based models. |

| RDChiral [3] | Software Tool | A rule-based tool for precise stereochemical handling in reverse synthesis template extraction. |

| BRICS Method [3] | Algorithm | Used for fragmenting molecules into synthons for generating synthetic reaction data. |

Visualizing Workflows and Relationships

The Neurosymbolic Programming Cycle

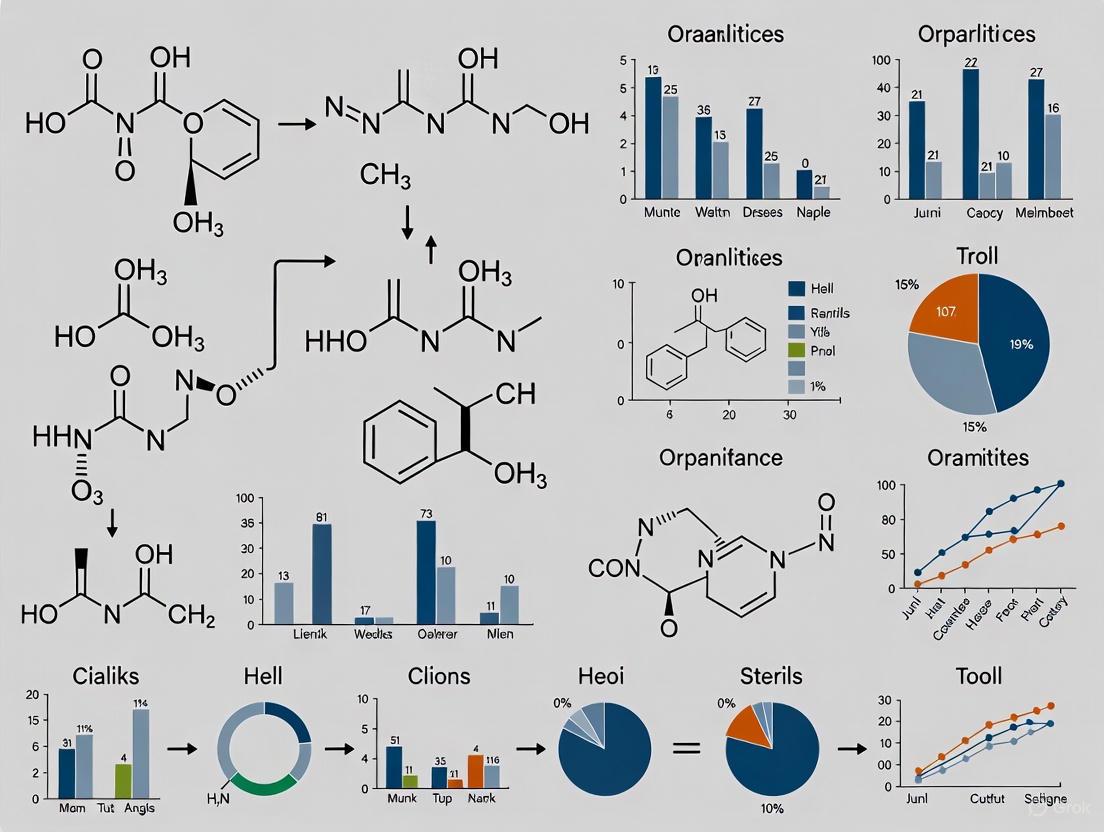

This diagram illustrates the three-phase iterative cycle of neurosymbolic programming used in advanced retrosynthesis systems [4].

Multi-Step Retrosynthesis Planning Framework

This flowchart outlines the generic decision-making process for a multi-step retrosynthesis planning algorithm [2].

A significant challenge in modern drug discovery is the critical gap between computationally designed molecules and their practical synthesizability. While deep generative models can efficiently propose molecules with ideal pharmacological properties, these candidates often prove challenging or infeasible to synthesize in the wet lab [5]. This synthesizability problem creates a major bottleneck, wasting valuable time and resources on molecules that cannot be practically produced. Retrosynthesis planning tools, which recursively decompose target molecules into simpler, commercially available precursors, have emerged as essential solutions for validating synthesizability and planning efficient routes before experimental work begins [4] [5]. This guide provides a comparative analysis of leading retrosynthesis planning tools, evaluating their performance, methodologies, and applicability to streamline drug development workflows.

Performance Comparison of Retrosynthesis Tools

Single-Step Retrosynthesis Accuracy

Single-step retrosynthesis prediction, which identifies immediate precursor reactants for a target molecule, forms the foundational building block of multi-step planning. Performance is typically measured by top-k exact-match accuracy, indicating whether the true reactants appear within the top k predictions [6].

Table 1: Top-k Accuracy (%) on USPTO-50K Benchmark Dataset

| Model | Type | Top-1 | Top-3 | Top-5 | Top-10 |

|---|---|---|---|---|---|

| RSGPT [7] | Template-free | 63.4 | - | - | - |

| RetroExplainer [6] | Molecular Assembly | 58.9 | 73.8 | 78.7 | 83.5 |

| LocalRetro [6] | Graph-based | - | - | - | 84.5 |

| R-SMILES [6] | Sequence-based | - | - | - | - |

RSGPT represents a groundbreaking advancement with its 63.4% Top-1 accuracy on the USPTO-50K dataset, substantially outperforming previous models which typically plateaued around 55% [7]. This performance leap is attributed to its generative pre-training on 10 billion synthetic reaction datapoints, overcoming data scarcity limitations that constrained earlier models [7]. RetroExplainer demonstrates robust performance across multiple top-k metrics, achieving particularly strong 78.7% Top-5 accuracy, indicating consistent coverage of plausible reactants [6].

Multi-Step Planning Efficiency and Success Rates

Multi-step planning evaluates a tool's ability to recursively decompose complex targets into purchasable building blocks, with success rates measured under constrained search iterations or time.

Table 2: Multi-Step Planning Performance on Retro-190 Dataset*

| Model | Approach | Success Rate (%) | Planning Cycles to First Route |

|---|---|---|---|

| NeuroSymbolic Group Planning [4] | Neurosymbolic Programming | 98.4 | Fastest |

| EG-MCTS [4] | Monte Carlo Tree Search | ~95.4 | Slower |

| PDVN [4] | Value Network | ~95.5 | Slower |

The neurosymbolic group planning model demonstrates superior efficiency, achieving the highest success rate (98.4%) while finding routes in the fewest planning cycles [4]. Its key innovation lies in abstracting and reusing common multi-step patterns (cascade and complementary reactions) across similar molecules, progressively decreasing marginal inference time as the system processes more targets [4].

Experimental Protocols and Evaluation Methodologies

Benchmarking Frameworks and Dataset Considerations

Robust evaluation requires standardized benchmarks and appropriate dataset splitting to prevent scaffold bias and information leakage:

- USPTO Datasets: The United States Patent and Trademark Office datasets (USPTO-50K, USPTO-FULL, USPTO-MIT) provide curated reaction data from patent literature, with USPTO-50K containing approximately 50,000 reactions and USPTO-FULL containing nearly 2 million entries [6] [7].

- Retro*-190 Dataset: A collection of 190 challenging molecules for evaluating multi-step planning algorithms [4] [8].

- Tanimoto Similarity Splitting: To prevent artificially inflated performance from structurally similar molecules appearing in both training and test sets, rigorous evaluations employ similarity-based splitting (e.g., 0.4, 0.5, 0.6 Tanimoto similarity thresholds) rather than random splitting [6].

The syntheseus Python package addresses inconsistent evaluation practices by providing a standardized framework for benchmarking both single-step and multi-step retrosynthesis algorithms [8].

Beyond Traditional Metrics: Addressing Hallucinations and Practical Feasibility

Traditional metrics like top-k accuracy and success rate have limitations—they don't assess whether predicted reactions are actually feasible in the laboratory. Recent approaches address this critical gap:

- Round-Trip Score: Proposed by Liu et al., this metric uses forward reaction prediction to simulate whether starting materials can successfully reproduce the target molecule through the proposed route, with similarity between original and reproduced molecules quantifying route feasibility [5].

- RetroTrim: This system combines diverse reaction scoring strategies to eliminate hallucinated reactions—nonsensical or erroneous predictions that plague many retrosynthesis models [9]. Expert validation on novel drug-like targets confirms its effectiveness as the sole method successfully filtering out hallucinations while maintaining the highest number of high-quality paths [9].

- Expert Validation: The most rigorous assessment involves synthetic chemists evaluating proposed routes. On this measure, RetroExplainer demonstrated that 86.9% of its predicted single-step reactions corresponded to literature-reported reactions [6].

Essential Workflows in Retrosynthesis Planning

Molecular Representation and Disconnection Planning

The initial phase of retrosynthesis involves representing molecular structures and identifying plausible disconnection sites, with different algorithmic approaches each having distinct advantages.

Neurosymbolic Programming for Group Retrosynthesis

Inspired by human learning, neurosymbolic programming alternates between expanding a library of synthetic strategies and refining neural models to guide the search process more effectively.

Table 3: Key Resources for Retrosynthesis Research and Implementation

| Resource | Type | Function & Application | Example Sources/References |

|---|---|---|---|

| USPTO Reaction Datasets | Chemical Data | Curated reaction data from patents for model training and validation | USPTO-50K, USPTO-FULL, USPTO-MIT [6] [7] |

| Purchasable Compound Databases | Chemical Data | Define feasible starting materials for synthetic routes | ZINC Database [5] |

| RDChiral | Algorithm | Template extraction and reaction validation | RetroSynth template extraction [7] |

| Syntheseus | Software Library | Standardized benchmarking of retrosynthesis algorithms | Python package for consistent evaluation [8] |

| AiZynthFinder | Software Tool | Multi-step retrosynthesis planning implementation | Popular open-source tool for route finding [5] |

| Template Libraries | Chemical Knowledge | Encoded reaction rules for template-based approaches | Expert-curated or data-mined reaction templates [4] |

The comparative analysis reveals distinct strengths across the retrosynthesis tool landscape, enabling informed selection based on specific drug discovery needs. RSGPT excels in raw single-step prediction accuracy, making it valuable for identifying plausible disconnections for novel targets. RetroExplainer offers exceptional interpretability through its molecular assembly process, providing transparent decision-making critical for experimental validation. NeuroSymbolic Group Planning demonstrates unmatched efficiency for projects involving structurally similar compound series, progressively accelerating as it processes more targets. For prioritizing practical synthesizability over purely computational metrics, approaches employing round-trip validation or diverse ensemble scoring (RetroTrim) offer superior protection against hallucinated reactions. The optimal tool choice ultimately depends on the specific application context: early-stage generative design with diverse outputs versus lead optimization with congeneric series, with the field increasingly moving toward integrated solutions that combine accuracy, interpretability, and practical feasibility to truly address the time and cost challenges in drug discovery.

The field of computer-aided synthesis planning (CASP) has undergone a profound transformation, evolving from early expert-driven rule-based systems to sophisticated data-driven machine learning (ML) models. Retrosynthesis planning—the process of recursively decomposing target molecules into simpler, commercially available precursors—represents a core challenge in organic chemistry and drug development [4] [10]. This evolution mirrors broader trends in artificial intelligence, shifting from symbolic systems encoding explicit human knowledge to subsymbolic models learning implicit patterns directly from data [11] [10]. This guide provides a comparative analysis of retrosynthesis planning tools, examining the performance, experimental methodologies, and practical applications of rule-based, ML-based, and hybrid approaches to inform researchers and development professionals in the pharmaceutical and chemical sciences.

Historical Progression: From Expert Systems to Data-Driven Learning

The development of computational retrosynthesis tools began with rule-based expert systems, which are examples of symbolic artificial intelligence. These systems operate on a set of predefined conditional statements (IF-THEN rules) manually curated by human experts [12] [13]. A typical rule-based system comprises several key components: a knowledge base storing rules and facts, an inference engine that applies rules to data, working memory holding current facts, and a user interface [12]. Famous early systems like MYCIN demonstrated the potential of this approach, though they were never widely adopted in practice for chemistry initially due to ethical and practical concerns [12]. These systems are highly transparent and interpretable because their decision-making logic is explicit, but they suffer from significant limitations in scalability and adaptability [12] [13]. Building and maintaining comprehensive rule sets for complex domains like organic chemistry is labor-intensive, and these systems cannot learn from new data or improve with experience [13].

The paradigm shifted with the rise of machine learning approaches, fueled by increased computational resources and the availability of large-scale chemical reaction datasets such as those from the United States Patent and Trademark Office (USPTO) [3] [10]. Unlike rule-based systems, ML models learn reaction patterns and transformation rules directly from historical reaction data, reducing reliance on manual rule encoding and enabling the discovery of novel reaction pathways [10]. This transition has led to the development of three primary ML-based retrosynthesis approaches:

- Template-Based Models: These models identify appropriate reaction templates—rules describing reaction cores based on fundamental chemical transformations—and apply them to target molecules [3] [14]. While offering good interpretability, they are constrained by the coverage of their template libraries [3].

- Template-Free Models: These approaches, often using sequence-to-sequence architectures, treat retrosynthesis as a machine translation problem, directly generating reactant SMILES strings from product SMILES without explicit reaction rules [3] [14]. They bypass template limitations but can struggle with invalid outputs and conserving atom mappings [14].

- Semi-Template-Based Models: This hybrid category predicts reactants through intermediates or synthons, first identifying reaction centers and then completing the molecular structures [3] [14]. Frameworks like State2Edits formulate the task as a graph editing problem, sequentially applying transformations to convert product graphs into reactants [14].

A more recent advancement is the emergence of neuro-symbolic programming, which aims to bridge the gap between these paradigms. Inspired by human learning, these systems alternately extend symbolic reaction template libraries and refine neural network models, creating a self-improving cycle [4]. For example, some modern systems operate through wake, abstraction, and dreaming phases—solving retrosynthesis tasks, extracting multi-step strategies like cascade and complementary reactions, and refining neural models through simulated experiences [4].

Comparative Performance Analysis of Retrosynthesis Approaches

Table 1: Top-K Accuracy Comparison of Various Retrosynthesis Methods on the USPTO-50K Benchmark Dataset

| Method | Category | Top-1 Accuracy (%) | Top-3 Accuracy (%) | Top-5 Accuracy (%) | Top-10 Accuracy (%)) |

|---|---|---|---|---|---|

| RSGPT [3] | Template-Free (LLM) | 63.4 | - | - | - |

| State2Edits [14] | Semi-Template-Based | 55.4 | 78.0 | - | - |

| RetroExplainer [6] | Molecular Assembly | 54.2 (Reaction Type Unknown) | 72.1 (Reaction Type Unknown) | 78.3 (Reaction Type Unknown) | 85.4 (Reaction Type Unknown) |

| LocalRetro [6] | Template-Based | ~54.0 (Reaction Type Unknown) | ~73.0 (Reaction Type Unknown) | ~79.0 (Reaction Type Unknown) | 86.4 (Reaction Type Unknown) |

| G2G [14] | Semi-Template-Based | 48.9 | 73.4 | - | - |

| GraphRetro [14] | Semi-Template-Based | 46.4 | 63.3 | - | - |

| MEGAN [14] | Semi-Template-Based | 44.0 | 65.0 | - | - |

Performance on standard benchmarks like USPTO-50K reveals clear differences between approaches. Recent large language model (LLM)-based approaches like RSGPT demonstrate state-of-the-art performance, achieving 63.4% top-1 accuracy through pre-training on ten billion generated reaction datapoints and reinforcement learning from AI feedback (RLAIF) [3]. Semi-template models like State2Edits strike a balance between template-based and template-free methods, achieving competitive top-1 accuracy (55.4%) while maintaining interpretability through an edit-based prediction process [14]. Interpretable frameworks like RetroExplainer, which formulates retrosynthesis as a molecular assembly process, achieve strong overall performance (top-1 accuracy of 54.2% when reaction type is unknown) while providing transparent decision-making [6].

Multi-Step Planning and Group Efficiency

Table 2: Performance Comparison in Multi-Step and Group Retrosynthesis Planning

| Method | Category | Planning Success Rate (%) | Key Strengths | Inference Time Trend |

|---|---|---|---|---|

| Neuro-symbolic Model [4] | Hybrid (Neuro-symbolic) | 98.42 (on Retro*-190) | Pattern reuse, Decreasing marginal time | Decreases with more molecules |

| Retro* [6] | Search Algorithm | - | Pathway validation, Literature alignment | Standard |

| EG-MCTS, PDVN [4] | Search Algorithm | ~95.4 | - | Standard |

For multi-step synthesis planning, search algorithms guided by neural networks play a crucial role. When extended to multi-step planning, RetroExplainer identified 101 pathways for complex drug molecules, with 86.9% of the single-step reactions corresponding to those reported in literature [6]. For planning groups of similar molecules—a common scenario with AI-generated compounds—neuro-symbolic models demonstrate particular advantage, achieving a 98.42% success rate on the Retro*-190 dataset and significantly reducing inference time by reusing synthesized patterns and pathways across similar molecules [4]. This capability to learn reusable multi-step reaction processes (cascade and complementary reactions) allows for progressively decreasing marginal inference time, a significant efficiency gain for drug discovery pipelines dealing with similar molecular scaffolds [4].

Experimental Protocols and Methodologies

Benchmarking Standards and Data Preparation

Experimental evaluation of retrosynthesis tools primarily uses standardized datasets derived from patent literature, with USPTO-50K being the most widely adopted benchmark [14] [6]. This dataset contains 50,000 high-quality reactions with correct atom mapping, classified into 10 reaction types [14]. Standard evaluation protocols employ top-k exact match accuracy, measuring whether the ground-truth reactants exactly match any of the top k predictions [6].

To address potential scaffold bias in random data splits, researchers increasingly use similarity-based splitting methods. For example, the Tanimoto similarity threshold method (with thresholds of 0.4, 0.5, and 0.6) ensures that structurally similar molecules don't appear in both training and test sets, providing a more rigorous assessment of model generalizability [6].

Training Methodologies Across Paradigms

Template-based and semi-template models typically employ specialized neural architectures for their specific tasks. State2Edits uses a directed message passing neural network (D-MPNN) to predict edit sequences, integrating reaction center identification and synthon completion into a unified framework [14]. It introduces state transformation edits (main state and generate state) to handle complex multi-atom edits through a combination of single-atom and bond edits [14].

Large language models (LLMs) for retrosynthesis, such as RSGPT, employ sophisticated multi-stage training reminiscent of natural language processing:

- Synthetic Data Pre-training: Using template-based algorithms (e.g., RDChiral) to generate billions of reaction datapoints from molecular fragments and known reaction templates [3].

- Reinforcement Learning from AI Feedback (RLAIF): The model generates reactants and templates, with validation tools like RDChiral providing feedback through reward mechanisms, helping the model learn relationships between products, reactants, and templates [3].

- Task-Specific Fine-tuning: Final optimization on specific benchmark datasets (e.g., USPTO-50K, USPTO-MIT, USPTO-FULL) to maximize performance [3].

Neuro-symbolic systems implement a cyclic learning process inspired by human cognition:

- Wake Phase: Attempting to solve retrosynthesis tasks and recording the process [4].

- Abstraction Phase: Extracting useful multi-step strategies (cascade chains for consecutive transformations, complementary chains for interacting reactions) and adding them as abstract reaction templates to the library [4].

- Dreaming Phase: Generating synthetic retrosynthesis data ("fantasies") to refine neural models through experience replay, improving their ability to select and apply strategies [4].

Diagram Title: Neuro-symbolic System Learning Cycle

Table 3: Key Research Reagents and Computational Tools for Retrosynthesis

| Resource Name | Type | Primary Function | Relevance in Research |

|---|---|---|---|

| USPTO Datasets [3] [14] [6] | Data | Benchmarking and training | Provides standardized reaction data for model development and evaluation (e.g., USPTO-50K, USPTO-FULL, USPTO-MIT). |

| RDChiral [3] | Software Algorithm | Template extraction and validation | Enforces chemical rules; used for generating synthetic training data and validating model predictions in RLAIF. |

| SYNTHIA [15] | Software Platform | Retrosynthesis planning | Commercial tool combining chemist-encoded rules with ML; database of 12+ million building blocks. |

| Tanimoto Similarity [6] | Evaluation Metric | Assessing molecular similarity | Implements rigorous dataset splitting to prevent scaffold bias and test model generalizability. |

| Reaction Templates [4] [3] | Knowledge Base | Encoding transformation rules | Fundamental to template-based and neuro-symbolic approaches; can be expert-curated or data-derived. |

| SciFindern [6] | Literature Database | Reaction validation | Validates predicted synthetic routes against published chemical literature. |

The evolution from rule-based systems to machine learning has fundamentally transformed retrosynthesis planning, offering researchers increasingly powerful tools for synthetic route design. Each approach presents distinct advantages: rule-based systems provide interpretability and reliability for well-understood chemical transformations; machine learning models offer superior predictive accuracy and the ability to discover novel pathways; hybrid neuro-symbolic approaches combine the strengths of both, enabling knowledge reuse and efficient planning for molecular families.

For drug development professionals, the choice of tool depends on specific research needs. When working with novel molecular scaffolds or seeking unprecedented disconnections, data-driven ML models offer the most creative solutions. For optimizing routes around established chemical space, template-based and semi-template methods provide reliable and interpretable predictions. Most promisingly, neuro-symbolic systems that learn and reuse synthetic patterns present a compelling future direction, particularly for pharmaceutical discovery pipelines that frequently explore groups of structurally similar molecules. As these technologies continue to mature, the integration of retrosynthesis planning with generative molecular design will further accelerate the development of new therapeutics and functional materials.

Retrosynthesis planning, the process of deconstructing complex target molecules into simpler, commercially available precursors, is a cornerstone of organic chemistry and drug discovery [16]. The field is currently powered by two main types of tools: commercial software platforms used in industrial settings and advanced research frameworks emerging from academic and corporate R&D. Commercial platforms like SciFinder-n, Reaxys, and SYNNTHIA often integrate vast databases of known reactions with predictive algorithms, while research frameworks such as RSGPT and AOT* push the boundaries with novel artificial intelligence (AI) and large language models (LLMs) [17] [3] [18]. This guide provides a comparative analysis of these tools, focusing on their methodologies, performance, and applicability for researchers and drug development professionals.

Commercial Platforms at a Glance

Commercial retrosynthesis tools are designed for practical application, offering robust, user-friendly interfaces backed by extensive reaction databases and expert-curated rules.

Table 1: Overview of Leading Commercial Retrosynthesis Platforms

| Platform | Key Strengths | Primary Limitations | Ideal Use Case |

|---|---|---|---|

| SciFinder-n (CAS) [17] | Unrivaled data from the CAS Content Collection; dynamic, interactive plans; stereoselective labeling. | Focuses on known routes; premium subscription cost. | Deep dives into known, published chemistry. |

| Reaxys (Elsevier) [17] | Combines high-quality reaction data with AI (Iktos/PendingAI); access to experimental procedures & supplier links. | High subscription cost; some AI processes are "black box." | Diverse predictions and practical sourcing. |

| SYNNTHIA (Merck) [17] | Combines expert rules & machine learning for practical, green synthesis; custom inventory-friendly planning. | Requires significant enterprise investment. | Industry-focused, green chemistry with custom inventory. |

These platforms are integral to workflow in many pharmaceutical and chemical companies. Their strength lies in leveraging vast repositories of known chemical knowledge, such as the CAS Content Collection for SciFinder-n, which provides high confidence in the validity of suggested routes [17]. However, a common limitation is their potential bias towards known chemistry, which might constrain the discovery of novel or more efficient synthetic pathways.

Cutting-Edge Research Frameworks

Research frameworks often prioritize algorithmic innovation and performance on benchmark datasets, demonstrating state-of-the-art results in generating novel retrosynthetic pathways.

Performance Comparison

Table 2: Performance Metrics of Selected Research Frameworks

| Framework | Core Innovation | Reported Top-1 Accuracy | Key Advantage |

|---|---|---|---|

| RSGPT [3] | Generative Transformer pre-trained on 10B synthetic datapoints; uses RLAIF. | 63.4% (USPTO-50K) | State-of-the-art accuracy from massive, diverse data. |

| AOT* [18] | Integrates LLM-generated pathways with AND-OR tree search. | Competitive SOTA | 3-5x higher search efficiency; excels with complex molecules. |

| Neuro-symbolic Model [4] | Learns reusable, multi-step patterns (cascade/complementary reactions). | High success rate | Progressively decreases inference time for similar molecules. |

| Retro*-Default [2] | A* search with a neural value network. | Not Specified | Better balance of Solvability and Route Feasibility. |

In-Depth Framework Analysis

RSGPT: This model addresses the data bottleneck in retrosynthesis by using a template-based algorithm to generate over 10 billion synthetic reaction datapoints for pre-training [3]. Its training strategy mirrors that of large language models, involving pre-training, Reinforcement Learning from AI Feedback (RLAIF) to validate generated reactants, and fine-tuning. This approach allows it to achieve a top-1 accuracy of 63.4% on the USPTO-50K benchmark, substantially outperforming previous models [3].

AOT*: This framework tackles the computational challenges of multi-step planning by combining the reasoning capabilities of LLMs with the systematic efficiency of AND-OR tree search [18]. It maps complete synthesis pathways generated by an LLM onto an AND-OR tree, enabling structural reuse of intermediates and dramatically reducing redundant searches. The result is a state-of-the-art performance achieved with 3-5 times fewer iterations than other LLM-based approaches, making it particularly effective for complex targets [18].

Human-Guided AiZynthFinder: Enhancing the widely used tool AiZynthFinder, this research introduces "prompting" for human-guided synthesis planning [19]. Chemists can specify bonds to break or bonds to freeze, and the tool incorporates these constraints via a multi-objective search and a disconnection-aware transformer. This strategy successfully satisfied bond constraints for 75.57% of targets in the PaRoutes dataset, compared to 54.80% for the standard search, effectively incorporating chemists' prior knowledge into AI-driven planning [19].

Experimental Protocols & Evaluation Metrics

Understanding how these tools are evaluated is critical for interpreting their performance claims. Benchmarking typically involves standardized datasets and specific metrics that measure both efficiency and route quality.

Common Experimental Protocols

- Dataset: The most common datasets used for benchmarking are derived from the United States Patent and Trademark Office (USPTO), such as USPTO-50K (containing 50,000 reactions) and the larger USPTO-FULL (with about two million reactions) [3] [2].

- Evaluation Metric - Solvability: This is the most basic metric, measuring the algorithm's ability to find a complete route from the target molecule to commercially available building blocks within a limited number of planning cycles [4] [2].

- Evaluation Metric - Route Feasibility: Recognizing that a solved route is not necessarily practical, this metric assesses the likelihood that the generated route can be successfully executed in a real laboratory. It is often calculated by averaging the feasibility scores of each single-step reaction in the route [2].

The following workflow diagram illustrates the standard process for evaluating a multi-step retrosynthesis framework, from the target molecule to the final assessment of the proposed route.

Critical Insight: Solvability vs. Feasibility

A key finding in recent literature is that the model combination with the highest solvability does not always produce the most feasible routes [2]. For instance, while one algorithm (MEEA-Default) demonstrated a high solvability of ~95%, another (Retro-Default) performed better when considering a combined metric of both solvability and feasibility [2]. This underscores the necessity of using nuanced, multi-faceted metrics for a true assessment of a tool's practical utility.

The Scientist's Toolkit: Essential Research Reagents

In computational retrosynthesis, "research reagents" refer to the key software components, datasets, and algorithms that are combined to build and evaluate planning systems.

Table 3: Key Reagents in Retrosynthesis Research

| Reagent / Component | Type | Function in the Workflow |

|---|---|---|

| Single-Step Retrosynthesis Prediction Model (SRPM) [2] | Algorithm | Predicts possible reactants for a single product molecule. The core building block of multi-step planners. |

| Planning Algorithm [2] | Algorithm | Manages the multi-step decision process, guiding which molecule to break down next using strategies like A* or MCTS. |

| AND-OR Tree [18] | Data Structure | Represents the search space; OR nodes are molecules, AND nodes are reactions that decompose a molecule into precursors. |

| USPTO Datasets [3] | Dataset | Standard benchmark datasets (e.g., USPTO-50K, USPTO-FULL) for training and evaluating models. |

| Building Block Set (e.g., ZINC) [18] | Dataset | A catalog of commercially available molecules used as the stopping condition for the retrosynthetic search. |

| Reaction Templates [3] | Knowledge Base | Expert-defined or automatically extracted rules that describe how a reaction center is transformed. |

| LM-030 | Klk7/ela2-IN-1|Dual Protease Inhibitor|For Research | Klk7/ela2-IN-1 is a dual inhibitor of kallikrein-related peptidase 7 (KLK7) and elastase 2 (ELA2). For research use only. Not for human or veterinary diagnostic or therapeutic use. |

| Phenthoate | Phenthoate Analytical Standard|C12H17O4PS2 | Phenthoate (CAS 2597-03-7) is an organothiophosphate insecticide. This analytical standard is for research use only (RUO). Not for human or veterinary use. |

The landscape of retrosynthesis planning is diverse, with clear trade-offs between commercial platforms and research frameworks. Commercial tools like SciFinder-n, Reaxys, and SYNNTHIA offer reliability, extensive curated data, and practical features for industrial chemists [17]. In contrast, research frameworks like RSGPT and AOT* demonstrate superior raw performance and algorithmic efficiency on benchmarks, often by leveraging massive data generation or novel LLM integrations [3] [18]. A critical trend is the move beyond simple "solvability" metrics towards more holistic evaluations that consider Route Feasibility, ensuring that predicted routes are not just theoretically sound but also practically executable [2]. The choice between a commercial platform and a research framework ultimately depends on the user's specific needs: proven reliability and integration for day-to-day tasks versus cutting-edge performance and novelty for pushing the boundaries of synthesizable chemical space.

The choice of molecular representation is a foundational step in computational chemistry and computer-assisted synthesis planning, directly influencing the performance of models in predicting molecular properties, generating novel compounds, and planning retrosynthetic pathways. Representations translate the physical structure of a molecule into a format that machine learning algorithms can process. Within the specific context of retrosynthesis planning—a core task in validating and prioritizing molecules generated by AI models—the representation dictates how effectively a model can recognize key functional groups and suggest plausible synthetic routes. This guide provides a comparative analysis of the dominant molecular representation paradigms, supported by recent experimental data, to inform researchers and drug development professionals.

Comparative Analysis of Representation Methods

The following representations are the most prevalent in modern computational chemistry, each with distinct strengths and weaknesses.

String-Based Representations

String-based representations encode molecular structures as linear text sequences, making them compatible with natural language processing models and transformer architectures.

- SMILES (Simplified Molecular-Input Line-Entry System): Represents a molecule's atomic structure and connectivity as a compact string of characters [20] [21]. Its primary weakness is that it does not explicitly encode molecular topology and can sometimes generate invalid strings upon generation [21] [22].

- SELFIES (SELF-referencing Embedded Strings): A newer string-based format designed to guarantee 100% validity in molecular generation tasks, addressing a key limitation of SMILES [23] [22].

- IUPAC Names: The systematic nomenclature developed by the International Union of Pure and Applied Chemistry. These names are human-readable and describe the molecular structure unambiguously [24] [23].

- InChI (International Chemical Identifier): A standardized, non-proprietary identifier designed to provide a unique and permanent representation of molecular structures [25].

Graph-Based Representations

Graph-based representations explicitly capture the topology of a molecule, treating atoms as nodes and bonds as edges in a graph [21]. This format has become the backbone for Graph Neural Networks (GNNs).

- Atom Graph: The most fundamental graph representation, where each node represents an atom with features like element type, and edges represent chemical bonds [26] [21].

- Group Graph (Substructure-Level Graph): A more advanced representation where nodes correspond to chemically significant substructures (e.g., functional groups, aromatic rings), and edges represent the connections between them [26]. This method provides a higher level of abstraction, enhancing interpretability and efficiency.

Advanced and Hybrid Representations

To overcome the limitations of single-modality representations, researchers are developing more sophisticated approaches.

- 3D-Aware Representations: These models incorporate the three-dimensional geometry of molecules, which is critical for modeling quantum properties and molecular interactions [20] [21]. Methods like 3D Infomax enhance GNNs by using 3D structural data during pre-training [21].

- Hypergraph Representations: Frameworks like OmniMol formulate molecules and their properties as a hypergraph, which can capture complex many-to-many relationships between molecules and various chemical properties, making them particularly suited for imperfectly annotated data [27].

- Fragment-Enhanced SMILES: Models like MLM-FG use a novel pre-training strategy on SMILES strings by randomly masking subsequences that correspond to functional groups, forcing the model to learn richer, more chemically contextualized representations [20].

Quantitative Performance Comparison

Performance on Molecular Property Prediction Tasks

Table 1: Performance Comparison of Representation Methods on MoleculeNet Benchmarks (Classification Tasks, Metric: AUC-ROC)

| Representation Method | BBBP | ClinTox | Tox21 | HIV | Average Performance |

|---|---|---|---|---|---|

| MLM-FG (SMILES-based) [20] | Outperforms baselines | ~0.94 (AUC-ROC) | Outperforms baselines | Outperforms baselines | State-of-the-art |

| Graph Neural Networks (GNNs) [20] | Baseline | ~0.92 (AUC-ROC) | Baseline | Baseline | Strong baseline |

| 3D Graph-Based Models (e.g., GEM) [20] | Outperformed by MLM-FG | Outperformed by MLM-FG | Outperformed by MLM-FG | Outperformed by MLM-FG | Strong, but computationally expensive |

| Group Graph (GIN) [26] | Higher accuracy & 30% faster runtime than atom graph | Information Not Available | Information Not Available | Information Not Available | High performance & efficiency |

Table 2: Performance of LLMs with Different String Representations in Few-Shot Learning (Metric: Accuracy) [25]

| Molecular String Representation | GPT-4o | Gemini 1.5 Pro | Llama 3.1 | Mistral Large 2 |

|---|---|---|---|---|

| IUPAC | Statistically significant preference | Statistically significant preference | Statistically significant preference | Statistically significant preference |

| InChI | Statistically significant preference | Statistically significant preference | Statistically significant preference | Statistically significant preference |

| SMILES | Lower performance | Lower performance | Lower performance | Lower performance |

| SELFIES | Lower performance | Lower performance | Lower performance | Lower performance |

| DeepSMILES | Lower performance | Lower performance | Lower performance | Lower performance |

Table 3: Specialized Model Performance in Retrosynthesis Planning

| Model / Algorithm | Retro*-190 Success Rate | Key Innovation | Applicability to Group of Similar Molecules |

|---|---|---|---|

| Data-Driven Group Planning [4] | ~98.4% | Reusable synthesis patterns; Cascade & Complementary reactions | Significantly reduces inference time |

| EG-MCTS [4] | ~96.9% | Neural-guided search | Not Specifically Designed |

| PDVN [4] | ~95.5% | Value network for route selection | Not Specifically Designed |

Key Performance Insights

- SMILES vs. Graphs: While SMILES-based models like MLM-FG can outperform even 3D-graph models on many property prediction tasks [20], graph-based models like the Group Graph offer superior interpretability by highlighting substructure contributions and can achieve higher computational efficiency [26].

- The LLM Preference: Contrary to conventional assumptions in cheminformatics, recent studies on large language models (LLMs) show a statistically significant preference for IUPAC and InChI representations over SMILES in zero- and few-shot property prediction tasks. This is potentially due to their granularity, more favorable tokenization, and higher prevalence in the models' general pre-training corpora [25].

- The Consistency Problem: A critical challenge with LLMs is their representation inconsistency. State-of-the-art models exhibit strikingly low consistency (≤1%), often producing different predictions for the same molecule when presented as a SMILES string versus its IUPAC name, indicating a reliance on surface-level textual patterns rather than intrinsic chemical understanding [24].

Experimental Protocols and Methodologies

Pre-training with Functional Group Masking (MLM-FG)

Objective: To improve the model's learning of chemically meaningful contexts from SMILES strings [20]. Workflow:

- Input: A large corpus of unlabeled SMILES strings (e.g., 100 million molecules from PubChem).

- Parsing and Identification: The SMILES string for a molecule is parsed to identify subsequences corresponding to chemically significant functional groups (e.g., carboxylic acid, ester).

- Random Masking: A certain proportion of these identified functional group subsequences are randomly masked.

- Pre-training Task: A transformer-based model (e.g., based on MoLFormer or RoBERTa) is trained to predict the masked functional groups. This forces the model to infer missing structural units based on the surrounding molecular context.

- Evaluation: The pre-trained model is fine-tuned and evaluated on downstream molecular property prediction tasks from benchmarks like MoleculeNet using scaffold splits to test generalizability.

Constructing a Group Graph

Objective: To create a substructure-level molecular graph that retains structural information with minimal loss while enhancing interpretability and efficiency [26]. Workflow:

- Group Matching:

- Identify all aromatic atoms and group bonded ones into aromatic rings.

- Use pattern matching (e.g., with RDKit) to find atom IDs for "active groups" (broken functional groups like carbonyl, halogens).

- Group the remaining bonded atoms into "fatty carbon groups."

- Substructure Extraction:

- Extract the identified active groups and fatty carbon groups as distinct substructures, adding them to a vocabulary.

- Establish links between substructures that are bonded in the original atom graph. The bonded atom pairs are recorded as "attachment atom pairs."

- Graph Formation:

- Represent each substructure as a node.

- Represent each link between substructures as an edge.

- The features of the attachment atom pairs become the features of the edges.

- Model Training and Evaluation:

- A Graph Isomorphism Network (GIN) is typically applied to the group graph.

- The model is evaluated on tasks like molecular property prediction and drug-drug interaction prediction, where it has demonstrated higher accuracy and efficiency compared to atom-level graphs.

Evaluating LLM Consistency

Objective: To systematically benchmark whether LLMs perform representation-invariant reasoning for chemical tasks [24]. Workflow:

- Dataset Curation: Create a benchmark with paired representations of molecules (SMILES strings and IUPAC names) for tasks like property prediction, forward reaction prediction, and retrosynthesis.

- Model Querying: For each molecule in the test set, query the LLM twice—once with the SMILES input and once with the IUPAC input—while keeping the task instruction equivalent.

- Metric Calculation:

- Consistency: The percentage of cases where the model produces identical predictions for both representations of the same molecule. An adjusted consistency accounts for chance-level agreement.

- Accuracy: The percentage of cases where the model's prediction matches the ground truth for each representation.

- Intervention (Consistency Regularization): To improve consistency, a sequence-level symmetric Kullback–Leibler (KL) divergence loss can be added during fine-tuning. This loss penalizes the model when its output distributions differ for the same molecule in different formats.

Visualization of Representation Relationships and Workflows

The Scientist's Toolkit: Key Research Reagents and Solutions

Table 4: Essential Software and Libraries for Molecular Representation Research

| Tool / Library | Type | Primary Function | Relevance to Representations |

|---|---|---|---|

| RDKit [26] | Open-Source Cheminformatics | Chemical information manipulation | Fundamental for parsing SMILES, generating molecular graphs, substructure matching, and descriptor calculation. |

| PyTor | Deep Learning Framework | Model building and training | The foundation for implementing custom GNNs, Transformers, and other deep learning models. |

| Deep Graph Library (DGL) | Library for GNNs | Graph neural network development | Simplifies the implementation of GNNs on molecular graph data (atom graphs, group graphs). |

| Transformers Library | NLP Library | Pre-trained transformer models | Provides access to architectures (e.g., RoBERTa) and tools for training chemical language models on SMILES and other string representations. |

| PubChem [20] | Public Database | Repository of chemical molecules | A primary source for large-scale, unlabeled molecular data used in pre-training models like MLM-FG. |

| MoleculeNet [20] | Benchmark Suite | Curated molecular property datasets | The standard benchmark for objectively evaluating the performance of different representation methods on tasks like property prediction. |

| Immunoproteasome inhibitor 1 | Immunoproteasome inhibitor 1, MF:C20H26N2O4, MW:358.4 g/mol | Chemical Reagent | Bench Chemicals |

| Hdac10-IN-1 | Hdac10-IN-1, MF:C18H23N3O2, MW:313.4 g/mol | Chemical Reagent | Bench Chemicals |

Methodologies in Action: Algorithmic Approaches and Real-World Applications

Retrosynthetic planning is a fundamental process in organic chemistry and drug development, where the goal is to recursively decompose a target molecule into simpler, commercially available precursors. This process is naturally represented as an AND-OR tree: an OR node represents a molecule that can be synthesized through multiple different reactions, while an AND node represents a reaction that produces multiple reactant molecules, all of which are required to proceed [4]. Efficiently searching this combinatorial space is critical for identifying viable synthetic routes within reasonable computational time. The AND-OR tree structure allows synthesis planning algorithms to systematically explore alternative pathways while respecting the logical dependencies between reactions and their required precursors.

Recent advancements have integrated machine learning with traditional symbolic search to create neurosymbolic frameworks that significantly enhance planning efficiency [4]. These approaches combine the explicit reasoning of symbolic systems with the pattern recognition capabilities of neural networks. For example, these frameworks use neural networks to guide the search process—one model helps choose where to expand the graph, and another guides how to expand it at a specified point [4]. This hybrid approach has demonstrated substantial improvements in success rates and computational efficiency compared to earlier methods, particularly when planning synthesis for groups of structurally similar molecules.

Algorithmic Frameworks and Comparative Analysis

The AOT* Framework and Neurosymbolic Programming

Inspired by human learning and neurosymbolic programming, a recent framework draws parallels to the DreamCoder system, which alternately extends a language for expressing domain concepts and trains neural networks to guide program search [4]. This approach, designed specifically for retrosynthetic planning, operates through three continuously alternating phases that create a learning and adaptation cycle:

- Wake Phase: The system attempts to solve retrosynthetic planning tasks, constructing an AND-OR search graph from target molecules. Neural network models guide the planning process, selecting expansion points and determining how to expand them. Successful synthesis routes and failures are recorded for subsequent analysis [4].

- Abstraction Phase: The system analyzes successful routes from the wake phase to extract reusable multi-step reaction patterns. It specifically identifies "cascade chains" for consecutive transformations and "complementary chains" for reactions that serve as precursors to others. The most useful strategies are formalized as abstract reaction templates and added to the expanding library [4].

- Dreaming Phase: To address the data-hungry nature of machine learning models, this phase generates synthetic retrosynthesis data ("fantasies") by simulating experiences from both bottom-up and top-down approaches. These fantasies, combined with replayed wake phase experiences, refine the neural models to improve their performance in subsequent cycles [4].

This framework demonstrates the core principle of AOT*: building expertize by alternately extending the strategy library and training neural networks to better utilize these strategies. The abstraction phase specifically enables the discovery of commonly used chemical patterns, which significantly expedites the search for synthesis routes of similar molecules [4].

Comparative Analysis of Retrosynthesis Planning Algorithms

Table 1: Performance Comparison of Retrosynthesis Planning Algorithms on the Retro*-190 Dataset

| Algorithm | Success Rate (%) | Average Iterations to Solution | Key Features | Limitations |

|---|---|---|---|---|

| AOT*-Inspired Neurosymbolic | 98.42% | ~120 | Three-phase wake-abstraction-dreaming cycle; Abstract template library; Cascade & complementary chains | Complexity in implementation; Requires extensive training data |

| EG-MCTS | ~95.4% | ~180 | Monte Carlo Tree Search with expert guidance; Exploration-exploitation balance | Slower convergence on similar molecules; Less reuse of patterns |

| PDVN | ~95.5% | ~190 | Value networks for route evaluation; Policy-guided search | Limited knowledge transfer between molecules |

| Retro* | ~92.0% | ~220 | A* search with neural cost estimation; Global perspective | Template-dependent; Less adaptive to new patterns |

| Graph-Based MCTS | ~90.0% | ~250 | Graph representation of search space; Shared intermediate detection | Computational overhead with large graphs |

The AOT*-inspired neurosymbolic approach demonstrates superior performance, solving approximately three more retrosynthetic tasks than EG-MCTS and 2.9 more tasks than PDVN under a 500-iteration limit [4]. This performance advantage stems from its ability to abstract and reuse synthetic patterns, which becomes increasingly valuable when processing multiple similar molecules. The framework identifies that reusable synthesis patterns lead to progressively decreasing marginal inference time as the algorithm processes more molecules, creating an efficiency gain that compounds across similar planning tasks [4].

Convergent Retrosynthesis Planning for Compound Libraries

Another significant advancement in AND-OR search applications addresses the practical need in medicinal chemistry to synthesize libraries of related compounds rather than individual molecules. Traditional retrosynthesis approaches generally focus on single targets, but convergent retrosynthesis planning extends AND-OR search to multiple targets simultaneously, prioritizing routes applicable to all target molecules where possible [28].

This approach uses a graph-based representation rather than a tree structure, allowing it to identify common intermediates shared across multiple target molecules. When applied to industry data, this method demonstrated that over 70% of all reactions are involved in convergent synthesis, covering over 80% of all projects in Johnson & Johnson Electronic Laboratory Notebook data [28]. The graph-based multi-step approach can produce convergent retrosynthesis routes for up to hundreds of molecules, identifying a singular convergent route for multiple compounds in most compound sets [28].

Table 2: Performance of Convergent Retrosynthesis Planning on Industry Data

| Metric | Performance | Significance |

|---|---|---|

| Compound Solvability | >90% | Individual compound solvability remains high despite convergence requirement |

| Route Solvability | >80% | Percentage of test routes for which a convergent route could be identified |

| Simultaneous Compound Synthesis | +30% | Increase in compounds that can be synthesized simultaneously compared to individual search |

| Common Intermediate Utilization | Significant increase | Enhanced use of shared precursors across multiple target molecules |

Experimental Protocols and Benchmarking

Evaluation Methodologies for Retrosynthesis Algorithms

Robust evaluation of retrosynthesis algorithms requires standardized benchmarks and metrics. The computer-aided synthesis planning community has increasingly recognized the importance of consistent evaluation practices, leading to the development of benchmarking frameworks like syntheseus [8]. This Python library promotes best practices by default, enabling consistent evaluation of both single-step models and multi-step planning algorithms.

Key evaluation metrics include:

- Success Rate: The percentage of target molecules for which a complete synthesis route is found within a specified computational budget (e.g., iteration limit) [4] [8].

- Inference Time: The time required to find a solution, particularly important for practical applications [8].

- Route Diversity: The variety of chemically distinct pathways discovered, measured using monotonic metrics that ensure finding additional routes never causes diversity to decrease [8].

- Search Efficiency: Often measured in planning cycles or iterations needed to find the first workable route [4].

For the AOT-inspired neurosymbolic approach, evaluation typically involves comparison against baseline algorithms on standardized datasets like Retro-190, which contains 190 challenging molecules for retrosynthesis planning [4]. Experiments are run multiple times (e.g., 10 independent trials) to account for stochastic elements in the search process, with success rates and computational requirements averaged across these trials [4].

Syntheseus Benchmarking Framework

The syntheseus library addresses several pitfalls in previous retrosynthesis evaluation practices, including inconsistent implementations and non-comparable metrics [8]. It provides:

- Model-agnostic and algorithm-agnostic evaluation infrastructure

- Support for both single-step and multi-step retrosynthesis algorithms

- Standardized implementation of common benchmarks (USPTO-50K for single-step, Retro*-190 for multi-step)

- Monotonic diversity metrics that properly reflect algorithm performance

When syntheseus was used to re-evaluate several existing retrosynthesis algorithms, it revealed that the ranking of state-of-the-art models can change under controlled evaluation conditions, highlighting the importance of consistent benchmarking practices [8].

Visualization of Algorithmic Frameworks

AND-OR Tree Structure for Retrosynthesis

The following diagram illustrates the fundamental AND-OR tree structure used in retrosynthesis planning, showing how target molecules decompose through reactions into precursors:

AND-OR Tree for Retrosynthesis Planning

Neurosymbolic AOT*-Inspired Framework Workflow

The following diagram visualizes the three-phase wake-abstraction-dreaming cycle of the neurosymbolic AOT*-inspired framework:

AOT*-Inspired Neurosymbolic Framework Cycle

Essential Research Reagents and Computational Tools

Table 3: Research Reagent Solutions for Retrosynthesis Algorithm Development

| Tool/Resource | Type | Function | Application in AOT* |

|---|---|---|---|

| USPTO Datasets | Chemical Reaction Data | Provides standardized reaction data for training and evaluation | Training neural network models; Validating template extraction |

| Syntheseus Library | Benchmarking Framework | Enables consistent evaluation of retrosynthesis algorithms | Comparing performance against baseline methods; Ensuring reproducible results |

| Abstract Template Library | Algorithm Component | Stores discovered multi-step reaction patterns | Accelerating search for similar molecules; Encoding chemical knowledge |

| Graph Representation | Data Structure | Enables convergent route identification across multiple targets | Finding shared intermediates; Efficiently representing chemical space |

| Single-Step Retrosynthesis Models | ML Model | Proposes plausible reactants for a given product | Core expansion mechanism in AND-OR tree search |

| Purchasable Building Block Sets | Chemical Database | Defines search termination criteria | Ensuring practical synthetic routes; Commercial availability checking |

AND-OR tree search algorithms, particularly those incorporating AOT*-inspired neurosymbolic approaches, represent a significant advancement in retrosynthesis planning capability. By combining the explicit reasoning of symbolic systems with the pattern recognition of neural networks, these frameworks achieve higher success rates and greater computational efficiency, especially when planning synthesis for groups of similar molecules. The three-phase wake-abstraction-dreaming cycle enables continuous improvement through pattern extraction and model refinement.

Future research directions include improving the scalability of these approaches to handle increasingly complex molecules, enhancing the diversity of discovered routes, and better integrating practical synthetic considerations such as cost, safety, and environmental impact. As benchmarking practices mature through frameworks like syntheseus, and as convergent synthesis approaches address the practical needs of medicinal chemistry, AND-OR search algorithms are poised to become increasingly valuable tools in accelerating drug discovery and development.

Retrosynthesis planning, the process of deconstructing a target molecule into feasible precursor reactants, is a foundational task in organic chemistry and drug development [10]. While artificial intelligence (AI) has dramatically accelerated this process, many deep-learning models operate as "black boxes," providing high-quality predictions but few insights into their decision-making process [6]. This lack of transparency limits the reliability and practical adoption of AI tools in experimental research, where understanding the rationale behind a proposed synthetic route is as crucial as the route itself.

RetroExplainer represents a paradigm shift in this landscape. It formulates retrosynthesis as a molecular assembly process, containing several retrosynthetic actions guided by deep learning [6]. This framework not only achieves state-of-the-art performance but also provides quantitative interpretability, offering researchers transparent decision-making and substructure-level insights that bridge the gap between computational predictions and chemical intuition.

Performance Comparison: RetroExplainer vs. State-of-the-Art Alternatives

To objectively assess RetroExplainer's capabilities, we compare its performance against other leading retrosynthesis models across standard benchmark datasets. The evaluation is primarily based on top-k exact-match accuracy, which measures whether the model's predicted reactants exactly match the ground truth reactants within the top k suggestions.

Table 1: Performance Comparison on USPTO-50K Dataset (Reaction Class Known)

| Model | Type | Top-1 Accuracy | Top-3 Accuracy | Top-5 Accuracy | Top-10 Accuracy |

|---|---|---|---|---|---|

| RetroExplainer | Molecular Assembly | 56.0% | 75.8% | 81.6% | 86.2% |

| LocalRetro | Graph-based | 52.2% | 70.8% | 76.7% | 86.4% |

| R-SMILES | Sequence-based | 51.1% | 70.0% | 76.3% | 83.2% |

| G2G | Graph-based | 48.9% | 67.6% | 72.5% | 75.5% |

| GraphRetro | Graph-based | 45.7% | 60.2% | 63.6% | 66.4% |

| Transformer | Sequence-based | 43.7% | 60.0% | 65.2% | 68.7% |

Table 2: Performance Comparison on USPTO-50K Dataset (Reaction Class Unknown)

| Model | Type | Top-1 Accuracy | Top-3 Accuracy | Top-5 Accuracy | Top-10 Accuracy |

|---|---|---|---|---|---|

| RetroExplainer | Molecular Assembly | 53.2% | 72.1% | 78.0% | 83.1% |

| R-SMILES | Sequence-based | 50.3% | 69.1% | 75.2% | 84.1% |

| LocalRetro | Graph-based | 46.3% | 62.6% | 67.8% | 73.4% |

| G2G | Graph-based | 39.4% | 55.1% | 59.8% | 63.8% |

| GraphRetro | Graph-based | 37.1% | 50.9% | 54.7% | 58.1% |

| Transformer | Sequence-based | 35.6% | 51.2% | 56.9% | 61.0% |

The data demonstrates that RetroExplainer achieves competitive, and often superior, performance across most evaluation metrics [6]. Notably, it achieves the highest averaged accuracy across top-1, top-3, top-5, and top-10 predictions when reaction class is known. Its strong performance under the "unknown reaction class" scenario is particularly significant, as this better reflects real-world conditions where the type of reaction needed is not pre-specified.

Beyond these established models, the field is rapidly advancing with new approaches. Very recent models like RSGPT, a generative transformer pre-trained on 10 billion generated data points, report a top-1 accuracy of 63.4% on USPTO-50K [7]. Another cutting-edge approach, RetroDFM-R, a reasoning-driven large language model, claims a top-1 accuracy of 65.0% on the same benchmark [29]. These models leverage massive data generation and advanced reasoning techniques to push accuracy boundaries, though RetroExplainer remains notable for its strong performance combined with its unique interpretability features.

RetroExplainer's Architectural Innovation and Experimental Protocol

Core Methodology: The Molecular Assembly Framework

RetroExplainer's performance stems from its innovative architecture, specifically designed to address limitations in existing sequence-based and graph-based approaches. Its methodology can be broken down into three core units:

- Multi-Sense and Multi-Scale Graph Transformer (MSMS-GT): This unit overcomes the limitations of traditional GNNs (which focus on local structures) and sequence-based models (which lose structural information) by capturing both local molecular structures and long-range atomic interactions within molecules [6].

- Structure-Aware Contrastive Learning (SACL): This component enhances the model's ability to capture and retain essential molecular structural information during the learning process [6].

- Dynamic Adaptive Multi-Task Learning (DAMT): This unit balances the optimization of multiple objectives during training, ensuring that no single task dominates at the expense of others, leading to more robust overall performance [6].

The "molecular assembly process" itself is an energy-based approach that breaks down the retrosynthesis prediction into a series of interpretable, discrete actions. This process generates an energy decision curve, providing visibility into each stage of the prediction and allowing for substructure-level attribution [6].

Experimental Workflow and Robustness Evaluation

The experimental validation of RetroExplainer followed rigorous protocols to ensure fair comparison and assess real-world applicability.

Table 3: Key Experimental Datasets and Protocols

| Dataset | Size | Splitting Method | Evaluation Metric |

|---|---|---|---|

| USPTO-50K | 50,000 reactions | Random split by previous studies [6] | Top-k exact-match accuracy |

| USPTO-FULL | ~1.9 million reactions | Random split by previous studies [6] | Top-k exact-match accuracy |

| USPTO-MIT | 479,035 reactions | Random split by previous studies [6] | Top-k exact-match accuracy |

| USPTO-50K Similarity Splits | 50,000 reactions | Tanimoto similarity threshold (0.4, 0.5, 0.6) [6] | Top-k exact-match accuracy |

A critical aspect of the evaluation addressed the scaffold evaluation bias present in random dataset splits, where very similar molecules in training and test sets can lead to inflated performance metrics [6]. To validate true robustness, researchers employed Tanimoto similarity splitting methods, creating nine more challenging test scenarios with varying similarity thresholds (0.4, 0.5, 0.6) between training and test molecules [6]. RetroExplainer maintained strong performance across these challenging splits, demonstrating its ability to generalize to novel molecular scaffolds rather than merely memorizing training examples.

For multi-step synthesis planning, RetroExplainer was integrated with the Retro* algorithm to plan synthetic routes for 101 complex drug molecules [6]. The validity of these routes was verified using the SciFindern search engine, with 86.9% of the proposed single-step reactions corresponding to literarily reported reactions, underscoring the practical utility of the predictions [6].

Figure 1: RetroExplainer's Core Workflow. The model processes a target molecule through specialized modules for representation learning and outputs reactants via an interpretable molecular assembly process.

Successful implementation and evaluation of retrosynthesis models require access to both computational resources and chemical data.

Table 4: Key Research Reagents and Computational Resources

| Resource Name | Type | Function/Purpose | Availability |

|---|---|---|---|

| USPTO Datasets | Chemical Reaction Data | Provides standardized benchmark data for training and evaluating retrosynthesis models [6] [7] | Publicly available |

| RDChiral | Algorithm/Tool | Template extraction algorithm used to generate chemical reaction data and validate reactant plausibility [7] | Open source |

| AiZynthFinder | Software Tool | Template-based retrosynthetic planning tool used for validation and generating training data for accessibility scores [30] | Open source |

| RAscore | Evaluation Metric | Machine learning-based classifier that rapidly estimates synthetic feasibility, useful for pre-screening virtual compounds [30] | Open source |

| SciFindern | Chemical Database | Used for verification of predicted reactions against reported literature, validating real-world applicability [6] | Commercial |

| ECFP6 Fingerprints | Molecular Representation | Extended-connectivity fingerprints with radius 3; used as feature inputs for various machine learning models in chemistry [30] | Open source (RDKit) |

RetroExplainer establishes a compelling paradigm in retrosynthesis prediction by successfully balancing state-of-the-art performance with unprecedented interpretability. Its molecular assembly process provides researchers with transparent, quantifiable insights into prediction rationale, moving beyond the "black box" limitations of previous approaches.

The comparative analysis reveals that while newer models like RSGPT and RetroDFM-R achieve marginally higher raw accuracy on some benchmarks—leveraging massive synthetic data and reinforcement learning—RetroExplainer remains highly competitive, particularly when considering its analytical transparency [7] [29]. For drug development professionals and researchers, this interpretability is invaluable, fostering trust and enabling deeper chemical insight.

Future progress in the field will likely involve integrating the strengths of these diverse approaches: the explainable, assembly-based reasoning of RetroExplainer, the massive data utilization capabilities of models like RSGPT, and the advanced chain-of-thought reasoning emerging in LLM-based systems [29]. As these technologies mature, the focus will increasingly shift toward practical metrics like pathway success rates in laboratory validation and integration with high-throughput experimental platforms, ultimately accelerating the design and synthesis of novel therapeutic compounds.

Retrosynthesis planning is a foundational process in organic chemistry, wherein target molecules are deconstructed into simpler precursor molecules through a series of theoretical reaction steps. This methodical breakdown continues until readily available starting materials are identified. Traditionally, this complex task has relied exclusively on the expertise and intuition of highly skilled chemists. However, the exponential growth of chemical space and the increasing complexity of target molecules (particularly in pharmaceutical development) has necessitated computational assistance. Computer-aided synthesis planning (CASP) systems have emerged as indispensable tools for navigating this complexity [31].

Contemporary CASP methodologies can be broadly categorized into three paradigms: rule-based systems, data-driven/machine learning systems, and hybrid systems. Rule-based expert systems, an early approach pioneered by Corey et al. in 1972, rely on a foundation of manually curated reaction and selectivity rules derived from chemical knowledge [32]. These systems encode human expertise into a machine-readable format, enabling logical deduction of potential synthetic pathways. In contrast, purely data-driven or machine learning models, such as Sequence-to-Sequence Transformers and Graph Neural Networks (GNNs), learn reaction patterns directly from large databases of known reactions without pre-defined rules [32] [3]. While these models can uncover subtle, data-driven patterns, they often function as "black boxes" and can struggle with generating chemically feasible or novel reactions [32]. Hybrid systems seek to synergize the strengths of both approaches, and SYNTHIA (formerly known as Chematica) stands as a prominent example, integrating a vast network of hand-coded reaction rules with machine learning and quantum mechanical methods to optimize its search and evaluation functions [31]. This guide provides a comparative analysis of SYNTHIA's performance against other retrosynthesis tools, underpinned by experimental data and detailed methodology.

System Architectures and Methodologies

SYNTHIA's Hybrid Architecture