Strategies for Mitigating Spatial Bias in Microtiter Plate Reactions: From Detection to Correction

Spatial bias presents a significant challenge in microtiter plate-based assays, potentially compromising data quality and leading to increased false positives and negatives in high-throughput screening campaigns.

Strategies for Mitigating Spatial Bias in Microtiter Plate Reactions: From Detection to Correction

Abstract

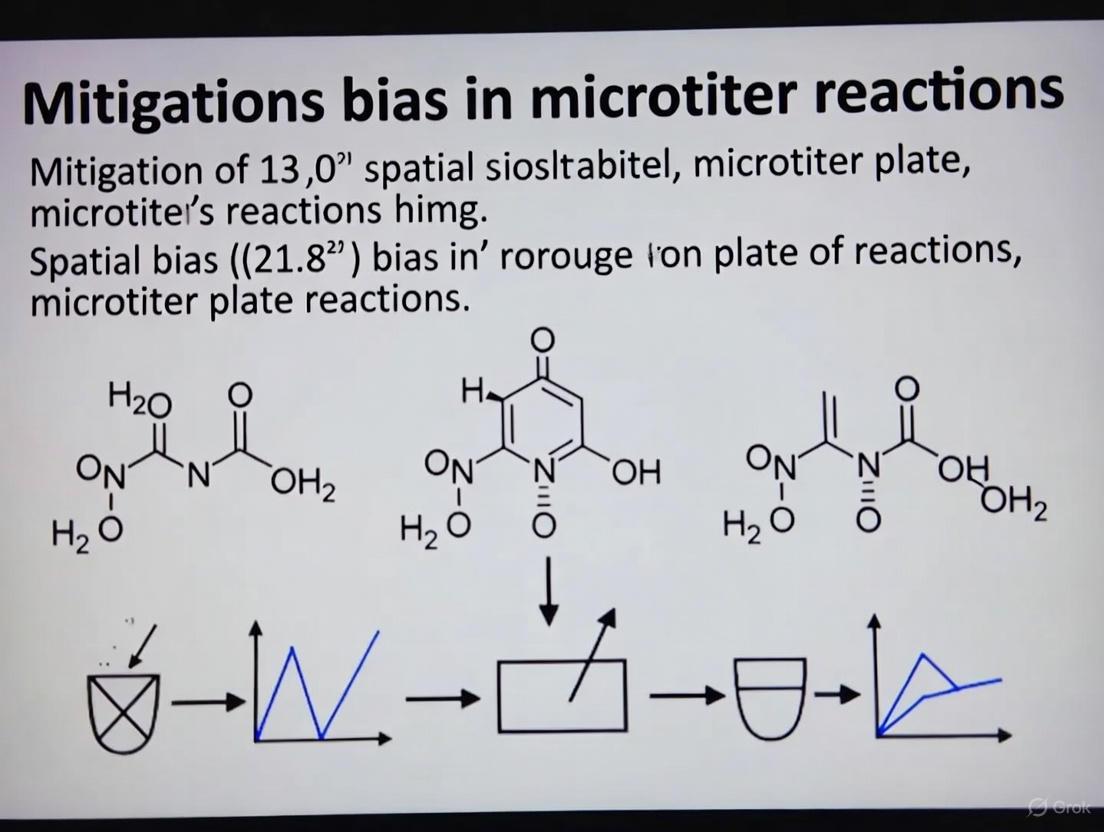

Spatial bias presents a significant challenge in microtiter plate-based assays, potentially compromising data quality and leading to increased false positives and negatives in high-throughput screening campaigns. This comprehensive article addresses the critical need for effective bias mitigation strategies tailored for researchers, scientists, and drug development professionals. Covering the full scope from foundational concepts to advanced validation techniques, we explore the common sources of spatial bias including edge effects, evaporation gradients, liquid handling inconsistencies, and meniscus formation. The content provides practical methodological approaches for bias detection and correction, including statistical normalization techniques, experimental design modifications, and specialized hardware solutions. Through troubleshooting guidance and comparative analysis of correction methods, this resource equips scientists with the knowledge to implement robust quality control measures, ultimately enhancing data reliability and decision-making in drug discovery pipelines.

Understanding Spatial Bias: Sources and Impact on Microtiter Plate Data Quality

Defining Spatial Bias in Microtiter Plate Assays

FAQs: Understanding Spatial Bias

What is spatial bias in microtiter plate assays? Spatial bias is a systematic error in microtiter plate-based assays where the raw signal measurements are not uniform across all regions of the plate. This variability is often caused by factors such as reagent evaporation, temperature gradients, uneven heating or cooling, cell decay, pipetting errors, and inconsistencies in incubation times or measurement timing across the plate [1] [2]. These effects often manifest as row or column patterns, with the edge wells (especially on the outer perimeter) being most frequently affected [2].

Why is identifying and correcting spatial bias critical in research? Uncorrected spatial bias severely impacts data quality and can lead to both false positive and false negative results during the hit identification process in screening campaigns like drug discovery [2]. It distorts the true biological or chemical signal, compromising the reliability of the data and leading to inaccurate conclusions. This can increase the length and cost of research projects by pursuing incorrect leads or missing genuine effects [2]. Proper mitigation is therefore essential for data integrity.

How can I detect spatial bias in my assay data? Spatial bias can be detected through visual inspection of plate heat maps, which often reveal clear patterns such as row, column, or edge effects [2]. Statistical methods like the B-score and Z'-factor are also commonly used to quantify the presence and extent of these biases [3] [2]. A low Z'-factor can indicate significant well-to-well variation, often stemming from spatial bias [3].

What are the main types of spatial bias? Research indicates that spatial bias can primarily fit one of two statistical models [2]:

- Additive Bias: A constant value is added to or subtracted from the measurements in specific well locations.

- Multiplicative Bias: The measurements in specific well locations are multiplied by a factor, causing a proportional increase or decrease.

The choice of correction method can depend on which type of bias is present in your data [2].

Are some plate areas more prone to bias than others? Yes, the outer rows and columns, particularly the edge wells, are notoriously prone to bias due to increased exposure to environmental fluctuations like evaporation and temperature changes [2]. This is often referred to as the "edge effect."

Troubleshooting Guides

Guide 1: Mitigating Edge Effects and Evaporation

Symptoms: Strong systematic differences between outer and inner wells; gradual signal drift from center to edge. Possible Causes: Evaporation in edge wells; temperature gradients across the plate during incubation. Solutions:

- Use a Lid or Sealing Film: Always use a lid or a high-quality, sealed film during incubation steps to minimize evaporation.

- Humidified Incubation: If possible, perform incubations in a humidified chamber to further reduce evaporation.

- Plate Randomization: Avoid placing all critical controls or samples on the edges. Use block randomization or predefined layouts that distribute treatments across the plate [1].

- Statistical Correction: Apply post-assay statistical correction methods, such as the B-score or the PMP (Plate Model Pattern) algorithm, to remove systematic spatial patterns from the data [2].

Guide 2: Correcting for Liquid Handling Inconsistencies

Symptoms: Systematic row-wise or column-wise patterns. Possible Causes: miscalibrated or malfunctioning pipettes; uneven dispensing or aspiration by automated liquid handlers. Solutions:

- Regular Calibration: Implement a strict schedule for the calibration and maintenance of all pipettes and liquid handling robots.

- Dye Tests: Perform uniformity tests using a colored dye to visualize dispensing patterns and identify problematic channels.

- Use of Controls: Distribute positive and negative controls across the entire plate to help identify and correct for positional trends [3].

Guide 3: Addressing Cell-Based Assay Inconsistencies

Symptoms: Uneven cell growth or response, particularly in specific plate regions. Possible Causes: Temperature gradients in incubators; uneven coating of plate surfaces. Solutions:

- Ensure Plate Flatness: Verify that plates are perfectly level during incubation to prevent media pooling.

- Validate Coating Uniformity: Confirm that surface coatings (e.g., poly-L-lysine, collagen) are applied uniformly across all wells.

- Pre-warm Media: Pre-warm culture media before adding to cells to prevent temperature shock that can affect adhesion and growth.

Experimental Protocols for Bias Mitigation

Protocol: Block Randomization Scheme for Plate Layout

This protocol outlines a method to mitigate positional bias by strategically placing samples and standards, rather than using a completely random or simplistic layout [1].

Principle: The block randomization scheme coordinates the placement of specific curve regions (e.g., standard concentrations in an ELISA) into pre-defined blocks on the plate. This design is based on assumptions about the distribution of assay bias and variability, ensuring that no single treatment group is consistently exposed to more favorable or unfavorable plate positions [1].

Procedure:

- Define Blocks: Divide the microtiter plate into logical blocks (e.g., 2x2 or 4x3 well groups).

- Assign Treatments to Blocks: Assign your experimental treatments, controls, and standard curve concentrations to these blocks in a randomized fashion. The randomization should ensure that each block is representative of the entire experimental condition set.

- Replicate Across Blocks: Replicate critical measurements across different blocks to ensure that the effect of a treatment is not confounded by its position on the plate.

Expected Outcomes: Implementation of this scheme in a sandwich ELISA demonstrated a reduction in mean bias of relative potency estimates from 6.3% to 1.1% and a decrease in imprecision from 10.2% to 4.5% CV [1].

Protocol: Identification and Correction Using Additive/Multiplicative PMP Algorithm

This protocol uses a statistical approach to correct for both assay-wide and plate-specific spatial biases, which can be either additive or multiplicative [2].

Principle: The method involves identifying the pattern of spatial bias on each plate and then applying a correction based on whether the bias is best modeled as an additive or multiplicative effect.

Procedure:

- Data Collection: Run your assay and collect raw data from all wells.

- Pattern Recognition: For each plate, analyze the data to determine the number of biased rows and columns and the strength of the bias.

- Model Selection: Statistically determine whether the bias follows an additive or multiplicative model for each plate.

- Apply Correction:

- For an additive bias, subtract the estimated row and column effects from the raw measurements.

- For a multiplicative bias, divide the raw measurements by the estimated row and column effects.

- Normalize Data: Follow the plate-specific correction with a robust Z-score normalization across the entire assay to correct for assay-wide spatial effects.

Expected Outcomes: This combined method (PMP + robust Z-scores) has been shown through simulation to yield a higher true positive hit detection rate and a lower total count of false positives and false negatives compared to methods like B-score or Well Correction alone [2].

Data Presentation

Table 1: Comparison of Spatial Bias Correction Methods

| Method | Principle | Best For | Advantages | Limitations |

|---|---|---|---|---|

| Block Randomization [1] | Experimental design that distributes treatments in predefined blocks across the plate. | All assay types, particularly dose-response curves (e.g., ELISA). | Proactive; reduces bias at the experimental design stage; improves accuracy and precision of potency estimates. | Requires careful pre-planning; does not correct for bias after data collection. |

| B-score [2] | A plate-specific correction that uses median polish to remove row and column effects. | High-Throughput Screening (HTS) data with row/column effects. | Well-established and widely used in HTS; effective for additive biases. | May not perform well with strong edge effects or multiplicative biases. |

| Well Correction [2] | An assay-specific correction that removes systematic error from biased well locations. | Assays with consistent bias patterns across all plates in an assay. | Corrects for systematic location-based errors common to an entire assay set. | Does not address plate-specific bias patterns. |

| Additive/Multiplicative PMP with Robust Z-scores [2] | A two-step method: plate-specific correction (additive or multiplicative) followed by assay-wide normalization. | Data with a mix of assay-specific and plate-specific biases, and either additive or multiplicative bias types. | Comprehensive; addresses multiple bias sources and types; shown to improve hit detection in simulations. | More complex to implement than simpler methods. |

Table 2: The Scientist's Toolkit - Essential Materials and Reagents

| Item | Function & Importance in Mitigating Bias |

|---|---|

| Optical Microplates [3] [4] | The foundation of the assay. Choice of material (e.g., PS, COP), color (clear, black, white), and well shape (flat, round) is critical for compatibility with detection mode and to minimize background (e.g., autofluorescence). |

| Plate Seals / Lids | Essential for reducing evaporation, a major cause of edge effect bias, during incubation steps. |

| Liquid Handling Systems [3] | Automated or manual pipettes must be precisely calibrated to ensure uniform reagent dispensing across all wells, preventing row/column bias. |

| Validated Assay Reagents | Using reagents with known performance and low variability (e.g., low autofluorescence) helps reduce well-to-well and lot-to-lot variability that can compound spatial bias [5]. |

| Positive & Negative Controls | Controls distributed across the plate are vital for identifying the presence and pattern of spatial bias and for normalizing data. |

| 2-Butenoic acid, phenylmethyl ester | 2-Butenoic acid, phenylmethyl ester, CAS:65416-24-2, MF:C11H12O2, MW:176.21 g/mol |

| N1,N4-Dicyclohexylterephthalamide | N1,N4-Dicyclohexylterephthalamide, CAS:15088-29-6, MF:C20H28N2O2, MW:328.4 g/mol |

Workflow and Relationship Diagrams

Spatial Bias Mitigation Workflow

Spatial Bias Causes and Solutions

Frequently Asked Questions

What is the "edge effect" in microplate assays? The "edge effect" refers to the phenomenon where wells located at the edges of a microplate yield different results compared to wells in the interior. This is caused by increased evaporation from edge wells, which leads to changes in reagent concentration, pH, and osmolarity. It can result in both higher and lower measured values and creates greater standard deviations, negatively impacting data reliability. [6]

What are the primary causes of the edge effect? The main causes are evaporation and temperature gradients across the plate. [6] Evaporation rates are higher in edge wells, particularly in incubators with high airflow or when plates are stacked. [7] Temperature gradients can form during incubation, especially in sensitive assays like PCR or cell culture. [8] [6]

Does the edge effect affect all types of assays? Yes, the edge effect can plague both biochemical assays (e.g., ELISA, targeted proteomics) and cell-based assays. [8] [6] It has been reported across all microplate formats, including 96-well, 384-well, and 1536-well plates. The effect is often more pronounced in plates with a higher number of wells due to their lower sample volumes. [6]

Can't I just avoid the problem by not using the edge wells? While leaving the outer wells empty is a common practice, it is an inefficient solution. This approach wastes a significant portion of the plate (e.g., 37.5% of a 96-well plate) and does not fully resolve the issue, as evaporation can still create a concentric gradient affecting the next rows inward. [7] A better approach is to implement strategies that allow the use of the entire plate. [7]

Troubleshooting Guide: Identifying and Mitigating the Edge Effect

The following table outlines common symptoms and their recommended solutions.

| Observed Problem | Potential Cause | Recommended Solutions |

|---|---|---|

| No or low signal amplification (PCR/qPCR) [9], inconsistent cell growth [7], or altered dose-response curves [10] | Sample evaporation from edge wells, changing concentration and reaction efficiency. [6] [9] | • Use an effective plate seal (e.g., silicone/PTFE cap mat, sealing tape). [8] [11]• Utilize low-evaporation lids. [6]• For PCR, ensure wells are not underfilled, leaving excessive headspace. [9] |

| Poor reproducibility & high well-to-well variability across the plate, even when controls appear normal. [8] [10] | Temperature gradients across the microplate during incubation, leading to uneven reaction rates. [8] [6] | • Ensure all reagents are at room temperature before addition. [12]• Validate heating devices for uniform heat distribution. [8]• Avoid stacking plates during incubation to ensure uniform air flow. [7] |

| High background or inconsistent signal in fluorescence/luminescence assays. [5] | Meniscus formation affecting light path, or uneven distribution of cells or precipitates within wells. [5] | • Use hydrophobic plates to reduce meniscus formation. [5]• Use well-scanning mode on plate readers to average signals across the well. [5]• For cell assays, set the focal height at the cell layer. [5] |

| Systematic spatial artifacts (e.g., column-wise striping) missed by control-based quality metrics. [10] | Liquid handling irregularities or position-dependent effects that only impact sample wells. [10] | • Implement advanced QC metrics like Normalized Residual Fit Error (NRFE) to detect systematic errors in sample wells. [10]• Use automated liquid handlers with calibrated performance. |

Experimental Protocol: Assessing and Ameliorating Intraplate Variation

The following methodology, adapted from a clinical proteomics study, provides a detailed framework for investigating the edge effect in your own assays. [8]

1. Objective To evaluate intraplate variation (the "edge effect") in a high-throughput bottom-up proteomics workflow and test the efficacy of different heating methods and sealing techniques to ameliorate it. [8]

2. Experimental Setup

- Sample Type: Human plasma samples. [8]

- Sample Preparation: A standardized plasma digestion protocol is performed directly in multiwell plates, involving steps of denaturation, reduction, alkylation, and tryptic digestion. [8]

- Experimental Variables: The key to this protocol is testing different combinations of variables across multiple experiments. A previous study used the following design: [8]

| Experiment | Multiwell Plate | Heating Device | Sealing Method |

|---|---|---|---|

| 1 | Standard 700 μL plate | Incubator hood | Clear polystyrene lid + heat-resistant tape |

| 2 | Standard 700 μL plate | Incubator hood | Silicone/PTFE cap mat + lid + tape |

| 3 | Standard 700 μL plate | Grant water bath | Silicone/PTFE cap mat + lid + tape |

| 4 | Standard 700 μL plate | Dry bath with heating beads | Silicone/PTFE cap mat + lid + tape |

| 5 | Eppendorf twin.tec 250 μL plate | Thermal cycler | Flat capillary strips |

| 6 | Eppendorf twin.tec 250 μL plate | Thermal cycler | Flat capillary strips (with adjusted reagents) |

3. Data Acquisition and Analysis

- Analysis: Samples are analyzed using Liquid Chromatography coupled to tandem Mass Spectrometry (LC-MS/MS) with Multiple Reaction Monitoring (MRM) for precise quantification. [8]

- Data Normalization: Incorporate stable isotope-labeled surrogate standards into your assay. These standards are added at the beginning of sample preparation and their measured signals are used to normalize the target analyte signals, correcting for variations introduced during processing. [8]

- Quality Assessment: Compare the precision and accuracy of quantitative measurements between edge wells and interior wells for each experimental condition. Plates with effective mitigation strategies will show minimal difference between these positions. [8]

The Scientist's Toolkit: Key Reagent Solutions

This table lists essential materials for combating the edge effect, as cited in the experimental protocols. [8]

| Item | Function | Example from Literature |

|---|---|---|

| Silicone/PTFE Cap Mat | Provides a superior chemical-resistant and low-evaporation seal compared to standard lids and tape. [8] | Waters 96-well 7 mm round plug silicone/PTFE cap mat. [8] |

| Low-Evaporation Lids | Specially designed lids that minimize evaporation while allowing for gas exchange in cell-based assays. [6] | Automated cellular and compound microplate lids from Wako Lab Automation. [6] |

| Stable Isotope-Labeled Standards | Synthetic peptides/proteins with heavy isotopes used for data normalization; they correct for technical variation during sample processing. [8] | Added to each sample prior to digestion in MRM proteomic assays. [8] |

| Thermal Cycler | Provides highly uniform and precise temperature control across the entire plate, minimizing temperature gradients. [8] [9] | Thermo Scientific Hybaid PX2 thermal cycler. [8] |

| Sealing Tape / Films | Adhesive films that create a complete seal over the plate to prevent evaporation. Opt for optically clear films for fluorescence reads. [9] [11] | Nunc Sealing Tape (polyolefin silicone, -40°C to + 90°C). [11] |

| Uniform Microplates | Plates designed with optimal lid geometry and material to ensure consistent gas and temperature exchange across all wells. [7] | TPP 96-well plates, which demonstrate uniform evaporation (∼10%) across the entire plate. [7] |

| Pentamidine dihydrochloride | Pentamidine dihydrochloride, CAS:50357-45-4, MF:C19H26Cl2N4O2, MW:413.3 g/mol | Chemical Reagent |

| Cloperidone | Cloperidone, CAS:4052-13-5, MF:C21H23ClN4O2, MW:398.9 g/mol | Chemical Reagent |

Mitigating Spatial Bias: A Strategic Workflow

The following diagram illustrates a logical workflow for diagnosing and addressing spatial bias in microplate experiments, integrating the tools and strategies discussed.

Liquid Handling Inconsistencies and Meniscus Formation

Frequently Asked Questions

Q1: How does meniscus formation specifically lead to spatial bias in microtiter plate assays? A meniscus forms due to surface tension between the liquid and well wall, creating a curved liquid surface. This curvature alters the effective path length for absorbance measurements, as the depth the light must travel through the liquid is no longer uniform [5]. Inconsistent path lengths across the plate lead to variations in absorbance readings, creating a positional bias where wells with more pronounced menisci yield different results than those with flatter surfaces, even with identical sample concentrations [5].

Q2: Which plate materials and reagents are known to exacerbate meniscus formation? Using cell culture-treated plates, which are hydrophilic to enhance cell adhesion, increases meniscus formation [5]. Furthermore, reagents such as TRIS buffers, EDTA, sodium acetate, and detergents like Triton X are known to increase meniscus formation as their concentrations rise [5]. The table below summarizes the key materials and their effects.

Table: Microplate Types and Their Impact on Meniscus and Assay Performance

| Microplate Type / Material | Recommended Assay Type | Impact on Meniscus & Assay |

|---|---|---|

| Standard Polystyrene (Hydrophobic) | General absorbance assays [5] | Minimizes meniscus formation; suitable for most applications [5]. |

| Cell Culture-Treated (Hydrophilic) | Cell-based assays [5] | Increases meniscus formation; should be avoided for absorbance measurements [5]. |

| Cyclic Olefin Copolymer (COC) | UV absorbance (e.g., DNA/RNA quantification) [5] | Provides optimal transparency at short wavelengths; meniscus effect depends on surface treatment [5]. |

| Black | Fluorescence intensity [5] | Reduces background noise and autofluorescence [5]. |

| White | Luminescence [5] | Reflects light to amplify weak signals [5]. |

Q3: What is a block randomization scheme and how does it mitigate positional bias? A block randomization scheme is a novel plate layout design that strategically coordinates the placement of specific curve regions (e.g., standards, samples) into pre-defined blocks on the plate. Unlike complete randomisation, which scatters treatments haphazardly, this method systematically accounts for the known distribution of assay bias and variability [1]. In one study, applying this layout to a sandwich ELISA reduced mean bias in relative potency estimates from 6.3% to 1.1% and decreased imprecision from 10.2% to 4.5% CV [1]. This scheme more effectively mitigates positional effects than simply avoiding the use of outer wells.

Troubleshooting Guides

Problem: Inconsistent Absorbance Readings Due to Meniscus

Background: A curved liquid meniscus distorts the light path in absorbance measurements, leading to inaccurate concentration calculations and significant well-to-well variability, which contributes to spatial bias [5].

Investigation: Visually inspect wells for a curved liquid surface. Check if you are using a hydrophilic plate (common for cell culture) or reagents known to promote meniscus formation [5].

Solutions:

- Use Hydrophobic Plates: Opt for standard hydrophobic polystyrene plates instead of hydrophilic cell culture plates for absorbance measurements [5].

- Avoid Problematic Reagents: Minimize the use of TRIS, EDTA, acetate, and detergents where possible [5].

- Maximize Well Volume: Fill wells to their maximum capacity to minimize the space available for a meniscus to form [5].

- Apply Path Length Correction: If your microplate reader has the setting, use a path length correction protocol. This tool detects the actual path length and normalizes the absorbance readings to the fill volume [5].

Problem: High Well-to-Well Variability in Liquid Delivery

Background: Inaccurate and imprecise pipetting is a fundamental source of liquid handling inconsistency, directly affecting data quality and increasing spatial bias.

Investigation: Calibrate pipettes regularly and observe user technique for common errors.

Solutions: Implement the following proper pipetting techniques to improve accuracy and precision [13]:

- Pre-wet Tips: Aspirate and fully expel the liquid at least three times before taking the actual delivery volume. This increases humidity within the tip, reducing evaporation [13].

- Work at Constant Temperature: Allow liquids and equipment to equilibrate to ambient temperature before pipetting, as volume delivery is temperature-dependent [13].

- Use Standard (Forward) Mode: For most aqueous solutions, use standard pipetting mode for better accuracy and precision. Reverse mode can lead to over-delivery [13].

- Pause Consistently After Aspiration: After aspirating, pause for one second before removing the tip from the liquid. This allows liquid flow into the tip to stabilize and balances evaporation effects [13].

- Immerse Tips Correctly: Hold the pipette vertically and immerse the tip adequately below the meniscus without touching the bottom of the container [13].

- Use High-Quality, Matched Tips: Use tips specifically designed for your pipette brand and model to ensure an airtight seal and accurate liquid delivery [13].

Table: Effects of Common Reagents on Meniscus Formation

| Reagent | Effect on Meniscus | Suggested Mitigation |

|---|---|---|

| TRIS Buffer | Increases formation with higher concentration [5] | Use alternative buffers where possible; use path length correction [5]. |

| Detergents (e.g., Triton X) | Increases formation with higher concentration [5] | Use minimum necessary concentration [5]. |

| EDTA | Increases formation with higher concentration [5] | Use alternative chelating agents if viable [5]. |

| Sodium Acetate | Increases formation with higher concentration [5] | Use alternative salts if viable [5]. |

| Glycerol (Viscous) | Presents general pipetting challenges [13] | Use reverse pipetting mode for improved precision [13]. |

Problem: Spatial Bias (Positional Effects) Across the Microtiter Plate

Background: Variability in raw signal measurements is not uniform across the plate, often due to edge effects, temperature gradients, or uneven evaporation. This can disproportionately affect assay results, such as relative potency estimates [1].

Investigation: Analyze data from a control sample placed across the entire plate to identify patterns of bias.

Solutions:

- Implement a Block Randomization Layout: Move beyond simple randomization. This advanced scheme involves placing specific curve regions (e.g., standard concentrations, test samples) into pre-defined blocks on the plate based on the known distribution of assay variability [1]. The diagram below illustrates the conceptual workflow for addressing spatial bias, integrating both plate layout and liquid handling considerations.

Diagram Title: Strategy for Mitigating Spatial Bias

- Address Meniscus Formation: As detailed in the first troubleshooting guide, mitigating the meniscus effect is a critical part of reducing overall well-level variability [5].

- Ensure Pipetting Precision: As detailed in the second troubleshooting guide, consistent liquid delivery is fundamental to minimizing one source of random error that can compound positional effects [13].

The Scientist's Toolkit

Table: Essential Reagents and Materials for Mitigating Liquid Handling Errors

| Item | Function & Rationale |

|---|---|

| Hydrophobic Microplates | Minimizes meniscus formation by repelling water, leading to a flatter liquid surface and more consistent absorbance path lengths [5]. |

| High-Quality Matched Pipette Tips | Ensure an airtight seal with the pipette shaft, which is critical for accurate and precise volume delivery. Prevents leaks and aspiration errors [13]. |

| Electronic Pipette | Automates plunger movement to minimize user-induced variability and personal technique effects, ensuring highly consistent aspiration and dispensing [13]. |

| Non-Interfering Reagents | Using alternatives to meniscus-promoting reagents like TRIS and detergents helps maintain a consistent liquid surface and measurement path length [5]. |

| Path Length Correction Tool | A software feature on advanced microplate readers that automatically measures and corrects for the actual liquid depth in each well, neutralizing the meniscus effect [5]. |

| 5-Aminonaphthalene-1-sulfonamide | 5-Aminonaphthalene-1-sulfonamide|CAS 32327-47-2 |

| Albanin A | Albanin A, CAS:73343-42-7, MF:C20H18O6, MW:354.4 g/mol |

Troubleshooting Guides

FAQ 1: How does spatial bias specifically lead to false positives and false negatives in my high-throughput screening (HTS) data?

Spatial bias systematically distorts measurements from their true values in specific, patterned locations on microtiter plates. This distortion directly impacts hit identification by shifting measurements above or below critical activity thresholds.

- False Positives occur when the spatial bias artificially inflates the signal of an inactive compound, causing it to rise above the hit threshold. For example, an edge effect on a 384-well plate might consistently increase signals in the first and last columns. An inactive compound located in these columns could have its signal boosted enough to be mistakenly classified as a hit [14].

- False Negatives occur when the spatial bias artificially suppresses the signal of an active compound, causing it to fall below the hit threshold. A potent compound located in a row affected by a liquid handling error that under-dispenses reagent would have a diminished signal and might be incorrectly missed as a hit [14].

The underlying issue is that this bias is not random noise but a systematic error, which means it creates predictable zones of over-estimation and under-estimation on the plate, severely compromising the reliability of the hit selection process [14] [15].

FAQ 2: Can spatial bias really reduce the dynamic range of my assay, and how can I detect it?

Yes, spatial bias can compress the effective dynamic range of your assay. The dynamic range is the interval between the minimum and maximum quantifiable signal. Spatial bias narrows this window by raising the baseline noise (for additive bias) or by disproportionately affecting high or low signals (for multiplicative bias).

- Additive Bias: This type of bias adds a fixed amount of signal (positive or negative) to wells regardless of their true signal level. For instance, background fluorescence from a plate edge effect adds a constant value to all measurements. This elevates the baseline, reducing the signal-to-noise ratio and compressing the usable range from the bottom [14].

- Multiplicative Bias: This bias scales with the true signal. An example is uneven heating across a plate, which might affect enzyme kinetics more profoundly in wells with high activity. This can dampen the strongest signals and amplify differences in low-signal regions, effectively compressing the range from the top and distorting the relative relationships between samples [14] [15].

You can detect this by visually inspecting the plate layout of raw signals. A heatmap of the measured values should look random if no spatial bias exists. The presence of clear patterns, such as gradients, strong row/column effects, or "hot" and "cold" zones, indicates spatial bias that is likely reducing your assay's dynamic range [14].

FAQ 3: What is the difference between additive and multiplicative spatial bias, and why does it matter for correction?

Choosing the wrong correction model can leave residual bias or even introduce new errors into your data. The core difference lies in how the bias interacts with the true signal.

Additive Bias: The bias is a fixed value that is added to the true signal. It is independent of the signal's magnitude. A common source is background interference or static reader effects [14].

- Model:

Observed Signal = True Signal + Bias

- Model:

Multiplicative Bias: The bias is a proportion or factor applied to the true signal. It depends on the signal's magnitude. Common sources include uneven reagent dispensing or evaporation that concentrates samples [14] [15].

- Model:

Observed Signal = True Signal × Bias Factor

- Model:

The following table summarizes the key differences:

| Feature | Additive Bias | Multiplicative Bias |

|---|---|---|

| Effect on Signal | Adds a constant value | Scales the signal by a factor |

| Impact on Low Signals | Can cause a large relative error, potentially creating false positives | Effect is proportional; less risk of creating extreme false positives/negatives |

| Impact on High Signals | Causes a small relative error | Can cause a large absolute error, compressing the high end of the dynamic range |

| Common Causes | Background fluorescence, plate reader drift | Pipetting errors, evaporation, uneven heating |

| Typical Correction | B-score, median polishing | Normalization using a ratio, log-transformation followed by additive correction |

Why it matters: Applying an additive correction (like a B-score) to data with multiplicative bias will not fully correct the data. Advanced correction methods now exist that can automatically identify and apply the appropriate model, including complex models where biases interact in both additive and multiplicative ways [15].

Experimental Protocols

Detailed Methodology: Block Randomization Scheme for Mitigating Positional Bias

This protocol describes the implementation of a block-randomized plate layout, which has been proven to effectively reduce positional bias more successfully than full randomization or avoiding plate edges [1].

1. Principle: Instead of completely randomizing sample locations, this scheme coordinates the placement of samples with specific properties (e.g., standard curve points) into pre-defined blocks on the plate. This design is based on the knowledge that assay bias and variability are not randomly distributed but follow a spatial pattern. By systematically distributing the key measurements across these patterns, the bias averages out for the critical calculated results, such as relative potency [1].

2. Procedure:

- Step 1: Define Blocks. Divide the microtiter plate into logical blocks. For a 96-well plate, a common block size is 2 columns (e.g., 16 blocks of 6 wells). The size should be chosen based on the assumed pattern of bias [1].

- Step 2: Assign Critical Samples to Blocks. Identify the samples most critical to your final calculation. In a potency assay, this is the standard curve. Assign each point of the standard curve to a specific, pre-determined block.

- Example: For an 8-point standard curve, you would use 8 blocks. Each block contains one replicate of each standard concentration, but the location of each concentration within the block is randomized.

- Step 3: Randomize Within Blocks. Within each block, randomize the placement of the assigned standard curve point and the test samples. This controls for local variability within the block.

- Step 4: Replicate Across Plate. The entire block structure is replicated across the plate to ensure sufficient replication for statistical power.

3. Outcome: A study using this scheme in a sandwich ELISA for vaccine release demonstrated a dramatic improvement:

- Mean bias of relative potency estimates reduced from 6.3% to 1.1%.

- Imprecision (CV) of relative potency estimates reduced from 10.2% to 4.5% [1].

Detailed Methodology: Identification and Correction of Additive and Multiplicative Spatial Biases

This protocol outlines a statistical procedure for detecting and removing complex spatial biases, which is applicable to various screening technologies (HTS, HCS) [14] [15].

1. Principle: The method involves a two-step correction process. First, it corrects for plate-specific bias (which may be additive or multiplicative and can vary from plate to plate) using an algorithm called PMP (Pattern-based Multiwell Plate normalization). Second, it corrects for assay-specific bias (a consistent bias pattern across all plates in an experiment) using robust Z-score normalization [14].

2. Procedure:

- Step 1: Data Preparation. Compile the raw measurement data from all plates, preserving the well identities (e.g., A01, P24).

- Step 2: Plate-Specific Bias Correction (PMP).

- For each plate, the algorithm tests whether the data is better described by an additive, multiplicative, or no-bias model.

- It uses statistical tests (like Mann-Whitney U or Kolmogorov-Smirnov) to identify rows and columns significantly affected by bias.

- The algorithm then estimates the bias for each affected row and column and subtracts (additive) or divides (multiplicative) it from the raw data. Advanced models can also account for interactions between row and column biases [15].

- Step 3: Assay-Specific Bias Correction.

- After plate-specific effects are removed, the data is further normalized using a robust Z-score method. This step corrects for any persistent location-dependent bias that is present across all plates in the assay (e.g., a specific well that is consistently too high or too low across all plates) [14].

- The robust Z-score is calculated per well location across all plates, using the median and median absolute deviation (MAD) to minimize the influence of true hits or outliers.

- Step 4: Hit Identification. The final corrected data is used for hit selection, typically by applying a threshold based on the mean and standard deviation of the normalized data (e.g., μ - 3σ) [14].

3. Outcome: Simulation studies show that this combined approach (PMP + robust Z-score) yields a higher true positive hit detection rate and a lower total count of false positives and false negatives compared to using B-score or well correction methods alone [14].

Workflow Visualization

The following diagram illustrates the logical decision process for diagnosing and mitigating spatial bias in microtiter plate experiments.

The Scientist's Toolkit: Research Reagent Solutions

The following table details key materials and their functions in experiments designed to mitigate spatial bias.

| Item | Function in Mitigating Spatial Bias |

|---|---|

| Microtiter Plates | The physical platform for assays. The choice of well count (96, 384, 1536) defines the spatial grid where bias manifests. Using plates with low autofluorescence and uniform binding characteristics is the first step to minimizing intrinsic bias [14]. |

| Liquid Handling Robots | A primary source of multiplicative bias if imprecise. Automated, calibrated systems are essential for reproducible dispensing of samples and reagents across all wells, reducing biases from pipetting errors [14]. |

| Plate Readers | Instruments for signal detection. Their optics and detectors can be a source of additive bias (e.g., edge effects). Regular calibration and using readers with homogeneous light paths and detection are critical [16]. |

| Control Samples | Used to map and quantify bias. Including negative/positive controls, standard curves, and blank wells distributed across the plate (e.g., via block randomization) provides the necessary data to model and correct for spatial effects [1] [14]. |

| Statistical Software (R/Python) | Essential for implementing advanced correction algorithms. Tools like the AssayCorrector R package [15] allow researchers to apply the PMP and robust Z-score methods to identify and remove both additive and multiplicative spatial biases. |

| Digital PCR (dPCR) Platforms | While subject to volume variability bias, advanced modeling methods like NPVolMod can correct for it. This nonparametric method accounts for arbitrary forms of volume variability, increasing trueness and the linear dynamic range of quantification [17]. |

| Isorhapontin | Isorhapontin, CAS:32727-29-0, MF:C21H24O9, MW:420.4 g/mol |

| 11-Chlorodibenzo[b,f][1,4]oxazepine | 11-Chlorodibenzo[b,f][1,4]oxazepine, CAS:62469-61-8, MF:C13H8ClNO, MW:229.66 g/mol |

Economic Consequences in High-Throughput Screening Campaigns

Troubleshooting Guides

FAQ: Identifying and Classifying Spatial Bias

Q1: What are the most common types of spatial bias in HTS, and how do they impact data quality and costs?

Spatial bias systematically distorts measurements from specific well locations, directly increasing false positive/negative rates. This wastes resources on validating erroneous hits and can cause promising compounds to be overlooked [2]. The main types are:

- Additive Bias: A constant value is added or subtracted from measurements in affected wells (e.g., from consistent pipetting errors) [2].

- Multiplicative Bias: The signal in affected wells is scaled by a factor (e.g., from reagent evaporation) [2] [18].

- Plate-Specific vs. Assay-Specific Bias: Bias can affect individual plates or be consistent across all plates in an assay, requiring different correction strategies [2].

Q2: How can I quickly diagnose if my HTS data is affected by spatial bias?

Visual inspection of plate heatmaps is the first step. Look for:

- Edge Effects: Systematically higher or lower signals in the outer rows and columns [2].

- Row/Column Effects: Clear striping patterns across the plate [19].

- Gradient Effects: A continuous slope of signal intensity across the plate [19]. Statistical tests like the Mann-Whitney U test or Kolmogorov-Smirnov test can be used to confirm the significance of row and column effects [2].

Q3: Our HTS campaign yielded a low hit confirmation rate upon retesting. Could spatial bias be the cause?

Yes, this is a classic symptom. Spatial bias can inflate the activity of inactive compounds (increasing false positives) or suppress the signal of active ones (increasing false negatives) [2] [20]. This leads to wasted resources on retesting and following up on invalid leads. Implementing robust bias correction methods during primary data analysis is crucial for improving the confirmation rate and reducing these economic costs [2].

Experimental Protocol: A Workflow for Comprehensive Bias Correction

Objective: To detect, classify, and correct for both additive and multiplicative spatial bias in HTS data, thereby improving hit selection accuracy and reducing economic waste.

Materials:

- Raw HTS data from microtiter plates (e.g., 384-well format).

- Statistical software (e.g., R with packages like

AssayCorrector[18] [15]).

Procedure:

- Data Profiling and Visualization: Generate heatmaps for each plate and for the assay overall to visually identify patterns like edge effects, gradients, or striping [2] [19].

- Bias Type Diagnosis: Apply statistical tests to determine the nature of the bias.

- Use the Mann-Whitney U test to compare the distribution of row medians versus the plate median, and column medians versus the plate median [2].

- A significant result (e.g., p < 0.05) indicates the presence of systematic row or column effects.

- Model Selection: Based on the diagnosis, choose an appropriate correction method.

- Bias Correction: Apply the selected correction method to the raw data.

- The general principle for median filters is:

Corrected Value = (Global Plate Median / Local Filter Median) * Raw Value[19].

- The general principle for median filters is:

- Normalization: After bias correction, normalize the data using a method like robust Z-score to identify hits based on median absolute deviation (MAD), which is less sensitive to outliers [2].

- Hit Identification: Apply a statistically defined threshold (e.g., μ - 3σ for each plate) to the corrected and normalized data to select candidate hits [2].

This integrated protocol, which corrects for both plate-specific and assay-specific biases, has been shown to yield the highest true positive rate and the lowest false positive/negative count compared to methods that do not correct for bias or only use B-score [2].

The following workflow diagram illustrates the logical sequence of this comprehensive bias mitigation strategy:

Data Presentation: Comparing Bias Correction Methods

The economic impact of spatial bias is directly tied to the effectiveness of the correction method used. The table below summarizes the performance of different methods in a simulation study, measured by their ability to correctly identify true hits (True Positive Rate) and minimize incorrect identifications (Total Error Count) [2].

Table 1: Performance Comparison of HTS Spatial Bias Correction Methods

| Correction Method | Key Principle | True Positive Rate (Example) | Total False Positives & Negatives (Example) | Best for Bias Type |

|---|---|---|---|---|

| No Correction | Uses raw, uncorrected data | Lowest | Highest | N/A |

| B-score | Uses median polish to remove row/column effects [21] | Low | High | Additive, Plate-specific |

| Well Correction | Corrects systematic error from specific well locations [2] | Medium | Medium | Assay-specific |

| PMP with Robust Z-score | Corrects for both additive and multiplicative biases, followed by robust normalization [2] | Highest | Lowest | Additive, Multiplicative, Plate & Assay-specific |

The Scientist's Toolkit: Key Research Reagent Solutions

Implementing effective bias correction requires both computational tools and practical experimental strategies. The following table lists key solutions to integrate into your HTS campaign.

Table 2: Essential Tools and Reagents for Mitigating Spatial Bias

| Tool / Reagent | Function / Description | Role in Bias Mitigation |

|---|---|---|

R package AssayCorrector |

A statistical program for detecting and removing both additive and multiplicative spatial biases [18] [15]. | Provides a direct implementation of advanced correction protocols for data analysis. |

| Automated Liquid Handler (e.g., I.DOT) | Non-contact dispenser with integrated droplet verification technology [20]. | Reduces variability and human error at the source, a major cause of spatial bias. |

| Bayesian HTS Package (BHTSpack) | An R package using Bayesian nonparametric modeling to identify hits from multiple plates simultaneously [21]. | Shares statistical strength across plates, providing more robust activity estimates and better FDR control. |

| Constraint Programming AI | A method for designing optimized microplate layouts using artificial intelligence [22]. | Reduces initial bias by strategically randomizing samples and controls, limiting batch effects. |

| Robust Z-Score Normalization | A normalization method using median and Median Absolute Deviation (MAD) instead of mean and standard deviation. | Reduces the influence of outlier compounds (hits) when setting the baseline activity level for a plate [2]. |

| 1-O-Methylemodin | 1-O-Methylemodin, CAS:3775-08-4, MF:C16H12O5, MW:284.26 g/mol | Chemical Reagent |

| Prasugrel (Maleic acid) | Prasugrel (Maleic acid), CAS:389574-20-3, MF:C24H24FNO7S, MW:489.5 g/mol | Chemical Reagent |

Detection and Correction Methods: Practical Approaches for Reliable Data

Spatial bias in microtiter plate-based assays represents a significant challenge in biochemical and drug development research. Positional effects, where variability in raw signal measurements is not uniform across all regions of the plate, can disproportionately affect assay results and compromise data reliability [1]. The edge effect—a well-documented phenomenon where outer wells experience increased evaporation during culturing—leads to variations in cell growth and concentration of media components that can harm cells [23]. This technical guide explores block randomization schemes as a systematic approach to mitigate these spatial biases while maintaining experimental throughput.

Frequently Asked Questions (FAQs)

1. What is positional bias in microtiter plate experiments? Positional bias refers to systematic variability in measurement signals across different regions of a microtiter plate. This includes the edge effect, where outer wells exhibit different experimental conditions due to increased evaporation, potentially leading to concentrated media components and variations in cell growth [23]. These biases can significantly impact data reproducibility and robustness if not properly addressed.

2. How does block randomization differ from complete randomization? Unlike complete randomization, which places treatments randomly across the entire plate without constraints, block randomization coordinates placement of specific curve regions into pre-defined blocks on the plate based on key experimental findings and assumptions about the distribution of assay bias and variability [1]. This approach maintains the benefits of randomization while systematically controlling for spatial biases.

3. What are the limitations of commonly used mitigation strategies? Common strategies like excluding outer wells, using humidified secondary containers, or decreasing incubation time often introduce complexity while only partially mitigating positional effects and significantly reducing assay throughput [1] [23]. While these approaches provide some benefit, they fail to address the fundamental spatial distribution of treatments across the plate.

4. When should I consider implementing a block randomization scheme? Block randomization is particularly valuable when:

- Your assay demonstrates significant positional bias or edge effects

- Experimental throughput is a concern and well exclusion is not feasible

- You require high precision in relative potency estimates

- Automated liquid handling systems are available for implementation

5. How does block randomization improve assay performance? Research demonstrates that implementing a block-randomized plate layout reduced mean bias of relative potency estimates from 6.3% to 1.1% in a sandwich ELISA used for vaccine release. Additionally, imprecision in relative potency estimates decreased from 10.2% to 4.5% CV [1].

Troubleshooting Guides

Problem: Inconsistent Results Across Plate Regions

Symptoms:

- Systematic variation between outer and inner well measurements

- Inconsistent replicate data depending on plate position

- Poor reproducibility between experimental runs

Solution: Implement a block randomization scheme with the following workflow:

Implementation Steps:

- Analyze historical data to identify patterns of spatial bias

- Divide plate into blocks based on bias distribution patterns

- Assign treatment types to balance across bias zones

- Randomize within blocks to maintain statistical validity

- Implement using automation to minimize pipetting errors

Validation Metrics:

- Compare relative potency estimates across plate regions

- Calculate coefficient of variation between replicates

- Assess bias reduction through control samples distributed across plate

Problem: Decreased Throughput from Well Exclusion Methods

Symptoms:

- Reduced experimental capacity from avoiding outer wells

- Insufficient replication due to well availability constraints

- Compromised statistical power from limited sample size

Solution: Implement balanced block randomization that utilizes all wells while controlling for positional effects:

Block Design Strategy:

- Create blocks that contain both edge and interior positions

- Balance treatments across positional bias zones

- Maintain randomization within constraints to prevent confounding

Problem: Complex Implementation Logistics

Symptoms:

- Difficulty in manual pipetting according to randomization scheme

- Increased potential for human error in treatment placement

- Time-consuming experimental setup

Solution: Utilize automated liquid handling systems programmed with your block randomization scheme:

Automation Requirements:

- Pre-programmed plate maps defining block structure

- Liquid handler compatible with randomization protocols

- Integration with experimental design software

Research Reagent Solutions

Table: Essential Materials for Block Randomization Experiments

| Item | Function | Selection Considerations |

|---|---|---|

| Microplates | Platform for assays | Choose color based on detection method: transparent for absorbance, black for fluorescence (reduces background), white for luminescence (enhances signal) [3] [5] |

| Hydrophobic Plates | Reduce meniscus formation | Critical for absorbance measurements; avoid cell culture plates with hydrophilic treatments [5] |

| Automated Liquid Handler | Implement complex randomization | Enables precise dispensing according to block randomization schemes [23] |

| Plate Sealing Materials | Minimize evaporation | Particularly important for edge wells to reduce edge effects [23] |

| Humidified Chambers | Control evaporation | Secondary containers to maintain humidity during incubation [23] |

Performance Data

Table: Block Randomization Efficacy in ELISA Assay

| Parameter | Standard Layout | Block Randomization | Improvement |

|---|---|---|---|

| Mean Bias of Relative Potency | 6.3% | 1.1% | 82.5% reduction |

| Imprecision (% CV) | 10.2% | 4.5% | 55.9% reduction |

| Positional Effect Impact | Significant | Minimal | Enhanced data reliability |

Advanced Implementation Protocols

Protocol 1: Block Randomization Scheme Development

Objective: Create a block randomization scheme tailored to your specific assay system.

Materials:

- Historical assay data demonstrating positional effects

- Microplate documentation

- Statistical software or programming environment

Procedure:

- Characterize Positional Bias: Analyze control data across multiple plates to identify patterns of spatial variability [1]

- Define Block Structure: Partition plate into blocks based on bias patterns while maintaining practical implementation

- Assign Treatment Categories: Balance critical treatments across different bias zones

- Generate Randomization Schedule: Create randomized treatment placement within each block

- Validate Through Simulation: Test scheme with historical data to confirm bias reduction

Protocol 2: Multi-Center Reproducibility Enhancement

Objective: Implement block randomization across multiple experimental sites.

Materials:

- Standardized microplate type across sites [3]

- Centralized randomization schedule

- Interactive Response Technology (IRT) or Interactive Web Response Systems (IWRS) [24]

Procedure:

- Establish Central Randomization: Generate master randomization schedule

- Distribute to Sites: Provide site-specific allocation sequences

- Implement Consistent Protocols: Standardize plate handling across all locations

- Monitor Balance: Regularly check treatment distribution across positions

- Analyze with Stratification: Include site as a stratification factor in final analysis

Block randomization schemes represent a sophisticated methodological approach to mitigating spatial bias in microtiter plate-based research. By systematically controlling for positional effects while maintaining randomization benefits, researchers can significantly enhance data quality, reproducibility, and reliability. Implementation requires careful planning and typically benefits from automation, but the resulting improvement in assay performance justifies this investment. As the field moves toward increasingly sensitive assays and higher throughput requirements, such rigorous experimental designs become essential for generating scientifically valid and reproducible results.

Troubleshooting Guides

G1: How do I detect and quantify spatial bias in my microtiter plate data?

Issue: Unexplained systematic errors or patterns in assay results that correlate with well position rather than biological reality.

Solution: Implement variogram analysis to objectively quantify spatial structure and dependence within your plate data [25] [26].

Experimental Protocol:

- Data Collection: Run your standard assay across a full microtiter plate, ensuring all wells contain the same sample type and concentration to isolate positional effects [1].

- Calculate Semivariance: For each pair of wells, compute the squared difference in measured values: γ(h) = ½ × [z(xₑ) - z(xₑ+h)]², where z(xₑ) is the value at well position xₑ, and h is the distance (lag) between wells [25].

- Bin Distance Pairs: Group well pairs into lag distance bins (e.g., 0-2 mm, 2-4 mm, etc.) and calculate average semivariance for each bin [27].

- Plot Experimental Variogram: Create a scatterplot of average semivariance (γ(h)) versus lag distance (h) [25].

- Model Fitting: Fit a theoretical model (spherical, exponential, or Gaussian) to the experimental variogram. The model parameters (nugget, sill, range) quantify different aspects of spatial structure [25].

Interpretation:

- Nugget Effect (y-intercept): Represents micro-scale variation or measurement error [25].

- Sill (plateau): Total population variance when spatial correlation ceases [25].

- Range (x-value at sill): Distance over which spatial dependence exists [25].

Table 1: Variogram Parameters and Their Interpretation for Microtiter Plate Analysis

| Parameter | Definition | Indicates | Optimal Pattern |

|---|---|---|---|

| Nugget | Micro-scale variance at zero distance | Measurement error or well-to-well variability | Low value relative to sill |

| Sill | Maximum semivariance | Total variability in the absence of spatial correlation | Stable plateau in variogram |

| Range | Distance where sill is reached | Scale of spatial influence | Should align with expected effect range |

| Nugget:Sill Ratio | Proportion of unstructured to structured variance | Strength of spatial autocorrelation | <0.25 indicates strong spatial structure |

G2: How can I distinguish true spatial bias from random noise?

Issue: Uncertainty whether observed patterns represent significant spatial effects or random variation.

Solution: Combine Moran's I statistical testing with variogram analysis to validate spatial autocorrelation significance [25] [27].

Experimental Protocol:

- Global Moran's I Calculation: Compute using the formula: I = (n/W) × Σ(xᵢ - x̄)(xⱼ - x̄)wᵢⱼ / Σ(xᵢ - x̄)², where n is observations, W is the sum of spatial weights, and wᵢⱼ defines the spatial weight matrix [27].

- Hypothesis Testing: Test Hâ‚€: No spatial autocorrelation versus Hâ‚: Significant spatial autocorrelation.

- Z-score Calculation: Determine statistical significance using z-scores where values beyond ±1.96 indicate 95% confidence [27].

- Local Indicators of Spatial Association (LISA): Decompose Global Moran's I into local components to identify specific well locations contributing to spatial clustering [27].

Interpretation:

- Positive Moran's I (0 to +1): Clustering of similar values (indicating positional bias) [27].

- Negative Moran's I (-1 to 0): Dispersion of similar values [27].

- Values near zero: Random spatial distribution [27].

Table 2: Statistical Tests for Spatial Bias Detection

| Test | Application | Threshold for Significance | Advantages | Limitations |

|---|---|---|---|---|

| Global Moran's I | Detects overall spatial clustering | |Z-score| > 1.96 (p < 0.05) | Global assessment, standardized metric | May miss local patterns |

| Local Moran's I (LISA) | Identifies local hotspots/coldspots | |Z-score| > 1.96 (p < 0.05) | Pinpoints specific biased regions | Multiple testing corrections needed |

| Variogram Analysis | Quantifies spatial dependence structure | Visual model fit and parameters | Models spatial scale of effects | Requires sufficient data points |

| Getis-Ord Gi* | Detects local clusters of high/low values | |Z-score| > 1.96 (p < 0.05) | Specifically identifies hotspot wells | Sensitive to weight matrix choice |

G3: What experimental design effectively mitigates confirmed spatial bias?

Issue: Confirmed spatial bias is affecting assay accuracy and precision.

Solution: Implement block randomization schemes to distribute positional effects systematically [1] [22].

Experimental Protocol:

- Plate Zoning: Divide the microtiter plate into logical blocks based on variogram range analysis [1].

- Treatment Assignment: Randomly assign treatments and controls within each block rather than across the entire plate [1].

- Replication Strategy: Ensure each treatment appears in multiple spatial blocks to decorrelate biological signal from positional effects [1].

- Validation Measurement: Run a control plate with uniform samples to verify bias reduction using the same variogram and Moran's I analyses [1].

Performance Metrics:

- In a sandwich ELISA assay, this approach demonstrated reduction in mean bias of relative potency estimates from 6.3% to 1.1% [1].

- Imprecision in relative potency estimates decreased from 10.2% to 4.5% CV [1].

Frequently Asked Questions

FAQ 1: What is the minimum sample size needed for reliable variogram analysis in microtiter plates?

For stable variogram estimation, a minimum of 50-100 data points is recommended, though 96-well plates provide sufficient data points. For higher-density plates (384-well, 1536-well), ensure adequate representation across all plate regions. The critical factor is having enough well pairs at each lag distance to compute stable semivariance estimates [25].

FAQ 2: How does microplate color selection interact with spatial bias detection?

Microplate color primarily affects optical measurements but doesn't directly cause spatial bias. However, inappropriate color selection can exacerbate detectable spatial patterns:

- Transparent plates: Optimal for absorbance assays but susceptible to meniscus effects that create positional variability [5].

- Black plates: Reduce background noise in fluorescence assays, improving signal-to-blank ratios [5].

- White plates: Enhance weak signals in luminescence assays through reflection [5].

Always control for plate color effects when investigating spatial bias.

FAQ 3: Can spatial statistics be applied to cell-based assays in microplates?

Yes, but with special considerations:

- Account for edge effects in cell culture plates where evaporation can create spatial gradients [3].

- Use well-scanning settings to correct for heterogeneous cell distribution within wells [5].

- Consider focal height adjustments for adherent cells at the well bottom [5].

- Monitor media components like Fetal Bovine Serum and phenol red that can cause autofluorescence with spatial patterns [5].

FAQ 4: What software tools are available for implementing these spatial analyses?

Multiple platforms support these techniques:

- R: Comprehensive packages (gstat, spdep) for variograms, Moran's I, and spatial analysis [25] [27].

- Python: Libraries (scikit-learn, PySAL) with spatial statistics capabilities [22].

- Specialized tools: PLAID for designing bias-resistant microplate layouts [22].

- GIS software: QGIS and ArcGIS for advanced spatial analysis [25].

Experimental Workflow Visualization

Research Reagent Solutions

Table 3: Essential Materials for Spatial Bias Analysis in Microplate Assays

| Material/Reagent | Function in Spatial Analysis | Key Considerations |

|---|---|---|

| Standard Reference Material | Uniform sample for positional effect mapping | Use same concentration across all wells to isolate spatial effects |

| Hydrophobic Microplates | Reduce meniscus-induced variability | Minimizes path length variations in absorbance measurements [5] |

| Optically Optimized Plates | Control for autofluorescence spatial patterns | Black for fluorescence (reduce background), white for luminescence (enhance signal) [5] |

| Spatial Analysis Software | Implement variogram and autocorrelation calculations | R (gstat, spdep), Python (PySAL), or specialized tools like PLAID [22] |

| Barcode-labeled Plates | Track plate orientation and positioning | Ensures consistent orientation across experiments and readers [3] |

| Automated Liquid Handlers | Ensure consistent sample distribution | Reduces volume-based spatial artifacts [3] |

High-throughput screening (HTS), an indispensable tool in modern drug discovery and functional genomics, generates vast datasets by testing thousands of chemical compounds or microbial strains. However, these datasets inherently contain systematic and random errors that can lead to both false positive and false negative results [28]. A significant source of this error is spatial bias within microtiter plates, often manifesting as edge effects (where outer wells behave differently from inner wells) or stack effects [28] [29]. Normalization techniques are essential statistical corrections applied to HTS data to minimize these plate-to-plate and within-plate variations, ensuring that true biological signals are accurately identified.

This guide focuses on three non-control normalization methods—B-score, Z-score, and Robust Z-score—which operate on the principle that the majority of samples on a screening plate are inactive and represent a neutral baseline [28]. The following sections provide a detailed technical breakdown, troubleshooting advice, and protocols to help you effectively implement these methods in your research.

Methodologies at a Glance

The table below summarizes the core principles, key advantages, and limitations of the three normalization techniques.

Table 1: Comparison of B-score, Z-score, and Robust Z-score Normalization Methods

| Method | Core Principle | Key Advantages | Primary Limitations |

|---|---|---|---|

| B-score | Fits a two-way median polish model to account for row (Rip) and column (Cjp) effects on a per-plate basis. Calculates residuals: ( r{ijp} = y{ijp} - (\mup + R{ip} + C_{jp}) ) [28] [30]. | Effectively corrects for strong spatial biases (systematic row/column effects) [28] [30]. Robust to outliers due to use of medians [28]. | Implementation is more complex, requiring statistical software like R [28]. Can be overly aggressive and remove biological signal if spatial effects are mild [21]. |

| Z-score | Standardizes data based on the mean (μz) and standard deviation (σz) of all compound values on a plate: ( Z = \frac{z - \muz}{\sigmaz} ) [28] [21]. | Simple and intuitive calculation, easy to implement in a spreadsheet [28]. Does not rely on control wells [28]. | Highly sensitive to outliers and the number of active compounds on a plate, which can inflate the standard deviation and mask hits [28] [21]. Assumes normally distributed data [21]. |

| Robust Z-score | A variation of the Z-score that uses median and Median Absolute Deviation (MAD) instead of mean and standard deviation: ( Z_{\text{robust}} = \frac{x - \text{median}(x)}{\text{MAD}(x)} ) [21]. | Resistant to the influence of outliers and a large number of hits on a plate [21]. More reliable for hit identification in screens with high hit rates. | Less commonly available as a built-in function in some software packages, potentially requiring manual calculation. |

The following workflow diagram illustrates the general decision-making process for selecting and applying these normalization methods.

The Scientist's Toolkit: Essential Research Reagents and Materials

Successful screening and normalization depend on the quality of the underlying assay and materials.

Table 2: Key Research Reagent Solutions for HTS Assay Development

| Item | Function | Considerations for Mitigating Spatial Bias |

|---|---|---|

| Microplates (96-, 384-, 1536-well) | Platform for conducting miniaturized assays. | Material (e.g., polystyrene, polypropylene) and surface treatment can affect evaporation and cell binding. Use plates with low evaporation lids [29]. |

| Positive/Negative Controls | Reference points for defining high and low assay signals. | Strategic placement throughout the plate (not just edges) helps correct for spatial gradients. Format of library plates may limit control placement [28] [29]. |

| Cell Viability Assay Kits | Measure cellular metabolic activity or cytotoxicity (e.g., CellTiter-Glo, MTT). | Cell-based assays are more variable. Validate using Z' factor to ensure robust performance despite spatial effects [31]. |

| Enzyme Activity Assay Reagents | Measure target enzyme inhibition or activation (e.g., kinase assays). | Biochemical assays are generally less variable. Use to establish a high-quality baseline for normalization [31]. |

| Humidity-Controlled Incubator | Provides stable environment for cell-based assays. | Critical for reducing edge effects caused by evaporation in outer wells [28] [29]. |

| Bis(dodecylthio)dimethylstannane | Bis(dodecylthio)dimethylstannane, CAS:51287-84-4, MF:C26H56S2Sn, MW:551.6 g/mol | Chemical Reagent |

| Quinine hydrobromide | Quinine hydrobromide, CAS:549-49-5, MF:C20H25BrN2O2, MW:405.3 g/mol | Chemical Reagent |

Frequently Asked Questions (FAQs) and Troubleshooting

What defines an acceptable Z' factor for my assay, and how does it relate to normalization?

An acceptable Z' factor is typically greater than 0.5, which indicates an excellent assay suitable for HTS. A Z' between 0 and 0.5 may be acceptable, while a value less than 0 indicates an unusable assay [31].

- Relationship to Normalization: The Z' factor evaluates assay quality based on control wells before screening. A high Z' factor means your assay has a wide dynamic range and low variability, creating a favorable foundation for any subsequent normalization method. Normalization techniques like B-score and Z-score are then applied to the raw sample data from the screening run to correct for spatial and plate-to-plate biases [31].

My B-score normalization removed all signal from my plate. What went wrong?

This can happen if the B-score's median polish algorithm mistakes a strong, consistent biological signal for a systematic spatial effect.

- Solution: Verify if your screen has a very high hit rate or if active compounds are clustered in specific rows/columns. The B-score is best for correcting technical artifacts (e.g., dispenser tip error) and assumes most compounds are inactive [28] [21]. For screens with many active features, consider methods like Control-Plate Regression (CPR), which uses dedicated control plates to estimate systematic error without relying on sample data [32].

After Z-score normalization, my positive control is no longer a hit. Is this normal?

Yes, this is a known limitation of the Z-score method. The Z-score calculates the mean and standard deviation from all wells on the plate, including your positive controls and other potential hits.

- Solution: If your positive controls are true strong activators/inhibitors, they are outliers that will inflate the plate's standard deviation (σ_z). This pulls the Z-scores of all compounds toward zero, potentially masking true hits [28]. Use the Robust Z-score (using median and MAD) or the Interquartile Mean (IQM) normalization method, as these are resistant to outliers and will preserve the signal of your controls [28].

How can I proactively design my microplate layout to minimize spatial bias?

Intelligent plate design is a powerful first step in reducing the burden on normalization.

- Best Practice: Where possible, randomize the placement of samples and controls across the plate. This prevents biological signals from being confounded with positional effects. For dose-response experiments, use specialized software or constraint programming models to design layouts that reduce bias and limit the impact of batch effects before normalization is applied [22].

Experimental Protocols

Protocol 1: Implementing B-score Normalization in R

The B-score correction is a robust method for addressing spatial bias on a per-plate basis.

Methodology:

- Data Input: Begin with a configured

cellHTSobject containing your raw intensity data (xraw) [30]. - Model Fitting: For each plate, a two-way median polish is fitted to the matrix of measured values. This model estimates:

- ( \mup ): The overall plate median.

- ( R{ip} ): The systematic offset for row ( i ).

- ( C_{jp} ): The systematic offset for column ( j ) [30].

- Residual Calculation: The residual ( r{ijp} ) for each well is calculated as: ( r{ijp} = y{ijp} - (\mup + R{ip} + C{jp}) ) This residual represents the value after removing the estimated row and column effects [30].

- Scaling (Optional): To standardize for plate-to-plate variability, the residuals can be divided by the Median Absolute Deviation (MAD) of the residuals on the plate, yielding the final B-score: ( \text{B-score}{ijp} = r{ijp} / \text{MAD}_p ) [30].

R Code Example:

Protocol 2: Calculating Z-score and Robust Z-score

These methods provide a simpler, plate-based standardization, with the Robust Z-score offering greater protection against outliers.

Methodology for Z-score:

- For a given plate, calculate the mean (μz) and standard deviation (σz) of all sample well values [28] [21].

- For each well value ( z ), apply the formula: ( Z = \frac{z - \muz}{\sigmaz} ) [28] [21].

Methodology for Robust Z-score:

- For a given plate, calculate the median and Median Absolute Deviation (MAD) of all sample well values. The MAD is calculated as ( \text{MAD} = \text{median}( | x_i - \text{median}(x) | ) ) [21].

- For each well value ( x ), apply the formula: ( Z_{\text{robust}} = \frac{x - \text{median}(x)}{\text{MAD}(x)} ) [21].

Spreadsheet/Excel Example:

| A | B | C | |

|---|---|---|---|

| 1 | Well | Raw Value | Formula |

| 2 | A1 | 105 | =(B2-AVERAGE($B$2:$B$97))/STDEV.P($B$2:$B$97) |

| 3 | A2 | 98 | ... (copy down) |

| ... | ... | ... | ... |

| 98 | H12 | 110 |

For Robust Z-score, replace AVERAGE with MEDIAN and STDEV.P with a calculated MAD value.

Frequently Asked Questions (FAQs)

Q1: What is spatial bias in microtiter plate-based assays, and why is it a problem? Spatial bias is a systematic error where measurements in specific well locations on a microtiter plate are consistently over or under-estimated due to factors like reagent evaporation, liquid handling errors, or cell decay. This bias often manifests as row or column effects, particularly on plate edges, and can significantly increase false positive and false negative rates during hit identification, jeopardizing the reliability and cost-efficiency of drug discovery campaigns [14].

Q2: How do I know if my data is affected by additive or multiplicative bias? Statistical testing is required to determine the bias type. A common method involves applying both additive and multiplicative Plate Effect Model (PMP) correction algorithms and then using statistical tests like the Mann-Whitney U test or the Kolmogorov-Smirnov two-sample test to see which model better normalizes the data. The model that results in a higher p-value (e.g., > 0.05) is typically selected as the better fit for that specific plate [14].

Q3: What is the difference between assay-specific and plate-specific bias?

- Assay-specific bias occurs when a consistent bias pattern appears across all plates within a given assay.

- Plate-specific bias is unique to an individual plate within an assay. Both types of bias can coexist, and it is critical to correct for both to ensure high-quality hit selection [14].

Q4: Are there experimental designs that can help mitigate spatial bias? Yes, alongside computational correction, specialized plate layouts can reduce bias. Block randomization is one such scheme, where specific curve regions are coordinated into pre-defined blocks on the plate, which has been shown to reduce mean bias in relative potency estimates from 6.3% to 1.1% [1]. More recently, methods using artificial intelligence and constraint programming have been developed to design optimal layouts that proactively reduce the impact of spatial bias [22].

Q5: What software is available to implement these corrections? The AssayCorrector program, implemented in R and available on the Comprehensive R Archive Network (CRAN), includes the proposed additive and multiplicative PMP algorithms for spatial bias correction [15].

Troubleshooting Guides

Issue 1: High False Positive/Negative Rates After Hit Selection

Problem: The hit selection process, using methods like μp − 3σp, identifies an unexpected number of active compounds, which may be driven by spatial bias rather than true biological activity.

Solution: Apply a robust correction procedure that accounts for both additive and multiplicative biases.

Investigation & Resolution Steps:

- Visual Inspection: Create a heatmap of the raw measurements from each plate. Look for clear spatial patterns, such as gradient effects from the center to the edges or systematic variations in specific rows or columns.

- Statistical Testing for Bias Type:

- For each plate, apply both the additive and multiplicative PMP correction algorithms.

- Follow this with a statistical test (e.g., Mann-Whitney U test) to compare the residuals from both models.

- Select the correction model (additive or multiplicative) that provides a better fit (higher p-value) for each individual plate [14].

- Apply Assay-Specific Correction: After correcting for plate-specific effects, apply an assay-level correction, such as robust Z-score normalization, to address systematic bias affecting specific well locations across all plates in the assay [14].

- Re-evaluate Hits: Perform hit selection on the fully corrected data and compare the results with the initial list.