Synergy Prediction Breakthroughs: How to Boost SynAsk Accuracy for Next-Gen Drug Discovery

This article provides a comprehensive guide for researchers and drug development professionals seeking to enhance the prediction accuracy of SynAsk, the computational tool for predicting drug synergy.

Synergy Prediction Breakthroughs: How to Boost SynAsk Accuracy for Next-Gen Drug Discovery

Abstract

This article provides a comprehensive guide for researchers and drug development professionals seeking to enhance the prediction accuracy of SynAsk, the computational tool for predicting drug synergy. We explore the foundational principles of synergy prediction, detail advanced methodological workflows and real-world applications, offer systematic troubleshooting and optimization strategies, and present rigorous validation and comparative analysis frameworks. Our goal is to equip scientists with the knowledge to generate more reliable, actionable synergy predictions, thereby accelerating the identification of effective combination therapies.

Understanding SynAsk: The Science of Drug Synergy Prediction and Why Accuracy Matters

Synergy Support Center

Welcome to the technical support center for researchers quantifying drug synergy, with a focus on improving SynAsk platform prediction accuracy. This guide addresses common experimental and analytical challenges.

Troubleshooting Guides & FAQs

Q1: Our combination screen yielded a synergy score (e.g., ZIP, Loewe) that is statistically significant but very low in magnitude. Is this result biologically relevant, or is it likely experimental noise? A: A low-magnitude score may indicate weak synergy or methodological artifacts.

- Troubleshooting Steps:

- Check Data Quality: Review dose-response curves for individual agents. High variability (poor replicate agreement) in monotherapy data propagates error into synergy calculations. Ensure R² values for fitted curves are >0.9.

- Verify Model Fit: The assumed reference model (Loewe Additivity or Bliss Independence) must be appropriate. Plot the expected additive surface against your data. Systematic deviations may suggest a model mismatch.

- Assess Concentration Range: Synergy is often concentration-dependent. A narrow tested range may miss optimal synergistic ratios. Expand the concentration matrix around the promising region.

- Context for SynAsk: When uploading such data to SynAsk, tag it with confidence metadata (e.g., "monotherapy variance: low/medium/high"). This helps the algorithm weigh the data appropriately during model training.

Q2: When replicating a published synergistic combination, we observe additive effects instead. What are the key experimental variables to audit? A: Discrepancies often arise from cell line or protocol drift.

- Troubleshooting Checklist:

- Cell Line Authentication: Confirm STR profiling matches the published source. Passage number can critically affect signaling pathway states.

- Drug Preparation & Stability: Verify stock concentration accuracy (via HPLC/MS), solvent, and storage conditions. Compounds may degrade or precipitate in assay media.

- Treatment Timeline: The order of addition (simultaneous vs. sequential) and duration of exposure are critical. Re-examine the original methods section in detail.

- Endpoint Assay: Ensure your viability/readout assay (e.g., CTG, apoptosis marker) is linearly responsive in the effect range observed.

Q3: How should we handle heterogeneous response data (e.g., some replicates show synergy, others do not) before analysis with tools like SynAsk? A: Do not average raw data prematurely. Follow this protocol: 1. Outlier Analysis: Apply a statistical test (e.g., Grubbs' test) on the synergy scores per dose combination, not on the raw viability. Investigate and note any technical causes for outliers. 2. Stratified Analysis: Process each replicate independently through the synergy calculation pipeline. This yields a distribution of synergy scores for each dose pair. 3. Report Variability: Input to SynAsk should include the mean synergy score and the standard deviation per dose combination. This variability metric is crucial for training robust prediction models.

Q4: What are the best practices for selecting appropriate synergy reference models (Bliss vs. Loewe) for our mechanistic study? A: The choice hinges on the drugs' assumed mechanisms.

| Model | Core Principle | Best Use Case | Key Limitation |

|---|---|---|---|

| Bliss Independence | Drugs act through statistically independent mechanisms. | Agents with distinct, non-interacting molecular targets (e.g., a DNA-damaging agent + a mitotic inhibitor). | Violated if drugs share or modulate a common upstream pathway. |

| Loewe Additivity | Drugs act through the same or directly interacting mechanisms. | Two inhibitors targeting different nodes in the same linear signaling pathway. | Cannot handle combinations where one drug is an activator and the other is an inhibitor. |

- Protocol for Selection: Run both models. If they disagree significantly, perform mechanistic studies (e.g., phospho-protein signaling arrays) to determine pathway interactions.

Experimental Protocol: Validating Synergy with Clonogenic Survival Assay

Following a positive hit in a short-term viability screen (e.g., 72h CTG), this gold-standard protocol confirms long-term synergistic suppression of proliferation.

- Seeding: Plate cells at low density (200-500 cells/well in a 6-well plate) in triplicate.

- Treatment: After 24h, apply compounds at the synergistic ratio identified in the screen. Include mono-therapy and vehicle controls. Use at least three dose levels.

- Exposure & Recovery: Treat cells for a clinically relevant duration (e.g., 48-72h). Then, carefully aspirate drug-containing media, wash with PBS, and add fresh complete media.

- Incubation: Allow colonies to form for 7-14 days without disturbance.

- Staining & Quantification: Fix colonies with methanol/acetic acid (3:1), stain with 0.5% crystal violet. Manually count colonies (>50 cells). Calculate surviving fraction: (Colonies counted)/(Cells seeded x Plating Efficiency). Plot dose-response and compare observed combination effect to expected additive effect (using Loewe Additivity model).

Key Synergy Metrics Summary

| Metric | Formula (Conceptual) | Interpretation | Range |

|---|---|---|---|

| Zero Interaction Potency (ZIP) | Compares observed vs. expected dose-response curves in a "coperturbation" model. | Score = 0 (Additivity), >0 (Synergy), <0 (Antagonism). | Unbounded |

| Loewe Additivity Model | Dâ‚/Dxâ‚ + Dâ‚‚/Dxâ‚‚ = 1, where Dxáµ¢ is the dose of drug i alone to produce the combination effect. | Combination Index (CI) < 1, =1, >1 indicates Synergy, Additivity, Antagonism. | CI > 0 |

| Bliss Independence Score | Score = E_obs - (E_A + E_B - E_A * E_B), where E is fractional effect (0-1). | Score > 0 (Synergy), =0 (Additivity), <0 (Antagonism). | Typically -1 to +1 |

| HSA (Highest Single Agent) | Score = E_obs - max(E_A, E_B) | Simple but overestimates synergy; best for initial screening. | -1 to +1 |

Pathway Logic in Synergy Prediction

Diagram Title: Parallel Pathway Inhibition Leading to Synergistic Effect

Synergy Validation Workflow

Diagram Title: Experimental Workflow for Synergy Discovery & Validation

The Scientist's Toolkit: Key Reagent Solutions

| Reagent / Material | Function in Synergy Research | Critical Specification |

|---|---|---|

| ATP-based Viability Assay (e.g., CellTiter-Glo) | Quantifies metabolically active cells for dose-response curves. | Linear dynamic range; compatibility with drug compounds (avoid interference). |

| Matrigel / Basement Membrane Matrix | For 3D clonogenic or organoid culture models, providing physiologically relevant context. | Lot-to-lot consistency; growth factor reduced for defined studies. |

| Phospho-Specific Antibody Panels | Mechanistic deconvolution of signaling pathway inhibition/feedback. | Validated for multiplex (flow cytometry or Luminex) applications. |

| Analytical Grade DMSO | Universal solvent for compound libraries. | Anhydrous, sterile-filtered; keep concentration constant (<0.5% final) across all wells. |

| Synergy Analysis Software (e.g., Combenefit, SynergyFinder) | Calculates multiple synergy scores and visualizes 3D surfaces. | Ability to export raw expected and observed effect matrices for curation. |

Troubleshooting Guides & FAQs

Q1: During model training, I encounter the error: "NaN loss encountered. Training halted." What are the primary causes and solutions? A: This typically indicates unstable gradients or invalid data inputs.

- Cause 1: Exploding gradients in the attention mechanism layers.

- Solution: Implement gradient clipping. Set

torch.nn.utils.clip_grad_norm_(model.parameters(), max_norm=1.0)in your training loop.

- Solution: Implement gradient clipping. Set

- Cause 2: Invalid or missing values in the biological feature matrix (e.g., log-transformed binding affinity data).

- Solution: Pre-process data with a sanity check pipeline. Replace infinite values and verify no

np.nanin inputs. Usenp.nan_to_numwith a large negative placeholder for masked positions.

- Solution: Pre-process data with a sanity check pipeline. Replace infinite values and verify no

Q2: The predictive variance for novel, out-of-distribution compound-target pairs is unrealistically low. How can I improve uncertainty quantification? A: This suggests the model is overconfident due to a lack of explicit epistemic uncertainty modeling.

- Solution: Switch from a standard Bayesian Neural Network (BNN) to a Deep Ensemble. Train 5 independent SynAsk architectures with different random seeds on the same data. Use the mean prediction as the final output and the standard deviation across ensemble members as the improved uncertainty estimate. This directly increases prediction accuracy for novel pairs.

Q3: When integrating a new omics dataset (e.g., single-cell RNA-seq), the model performance degrades. What is the recommended feature alignment protocol? A: Performance drop indicates a domain shift between training and new data distributions.

- Solution: Apply canonical correlation analysis (CCA) for feature space alignment.

- Let

X_trainbe your original high-dimensional cell line features. - Let

X_newbe the new single-cell derived features. - Use

sklearn.cross_decomposition.CCAto find linear projections that maximize correlation betweenX_trainand a subset ofX_newfrom overlapping cell lines. - Project all new data using this transformation before input to the predictive architecture.

- Let

Q4: The multi-head attention weights for certain protein families are consistently zero. Is this a bug? A: Not necessarily a bug. This often indicates redundant or low-information features for those families.

- Diagnostic Step: Run a feature importance analysis using integrated gradients on the attention layer input. Identify if features for that family are zero-variance or highly correlated with others.

- Solution: Apply group-lasso regularization (

L1/L2regularization) on the feature embedding layer to encourage sparsity and potentially collapse useless features, allowing the attention head to focus on informative signals.

Key Experimental Protocols for Improving Prediction Accuracy

Protocol 1: Cross-Validation Strategy for Sparse Biological Data Objective: To obtain a robust performance estimate of SynAsk on heterogeneous drug-target interaction data. Method:

- Data Partitioning: Do not use random splitting. Perform a stratified grouped k-fold cross-validation (k=5).

- Groups: Define groups by unique protein targets to prevent data leakage. All interactions for a given target are contained within a single fold.

- Stratification: Ensure each fold maintains a similar distribution of interaction affinity values (e.g., binned into active/inactive).

- Evaluation: Train on 4 folds, validate on the held-out target fold. Rotate and average metrics (AUC-ROC, RMSE, Calibration Error) across all 5 folds.

Protocol 2: Ablation Study for Architectural Components Objective: To quantify the contribution of each core module in SynAsk to final prediction accuracy. Method:

- Baseline Model: Train a standard Multi-Layer Perceptron (MLP) on concatenated compound and target features.

- Incremental Addition: Sequentially add SynAsk components:

- Step A: Add the geometric graph neural network for compound encoding.

- Step B: Add the pre-trained protein language model (e.g., ESM-2) embedding for targets.

- Step C: Add the multi-head cross-attention layer between compound and target representations.

- Metric Tracking: Record the increase in AUC-ROC and reduction in RMSE on a fixed test set after each addition. The experiment must be repeated with 10 different random seeds to compute statistical significance (p-value < 0.01, paired t-test).

Table 1: SynAsk Model Performance Benchmark (Comparative AUC-ROC)

| Model / Dataset | BindingDB (Kinase) | STITCH (General) | ChEMBL (GPCR) |

|---|---|---|---|

| SynAsk (Proposed) | 0.941 | 0.887 | 0.912 |

| DeepDTA | 0.906 | 0.832 | 0.871 |

| GraphDTA | 0.918 | 0.851 | 0.889 |

| MONN | 0.928 | 0.869 | 0.895 |

Data aggregated from internal validation studies. Higher AUC-ROC indicates better predictive accuracy.

Table 2: Impact of Training Dataset Size on Prediction RMSE

| Number of Interaction Pairs | SynAsk RMSE (↓) | Baseline MLP RMSE (↓) | Uncertainty Score (↑) |

|---|---|---|---|

| 10,000 | 1.45 | 1.78 | 0.65 |

| 50,000 | 1.12 | 1.41 | 0.72 |

| 200,000 | 0.89 | 1.23 | 0.81 |

| 500,000 | 0.76 | 1.05 | 0.85 |

RMSE: Root Mean Square Error on continuous binding affinity (pKd) prediction. Lower is better. Uncertainty score is the correlation between predicted variance and absolute error.

Visualizations

SynAsk Predictive Architecture

Uncertainty Estimation Workflow

The Scientist's Toolkit: Research Reagent Solutions

| Item / Reagent | Function in SynAsk Experiment | Example Source / Catalog |

|---|---|---|

| ESM-2 Pre-trained Weights | Provides foundational, evolutionarily-informed vector representations for protein sequences as input to the target encoder. | Hugging Face Model Hub: facebook/esm2_t36_3B_UR50D |

| RDKit Chemistry Library | Converts compound SMILES strings into standardized molecular graphs with atomic and bond features for the geometric GNN encoder. | Open-source: rdkit.org |

| BindingDB Dataset | Primary source of quantitative drug-target interaction (DTI) data for training and benchmarking prediction accuracy. | www.bindingdb.org |

| PyTorch Geometric (PyG) | Library for efficient implementation of graph neural network layers and batching for irregular molecular graph data. | Open-source: pytorch-geometric.readthedocs.io |

| UniProt ID Mapping Tool | Critical for aligning protein targets from different DTI datasets to a common identifier, ensuring clean data integration. | www.uniprot.org/id-mapping |

| Calibration Metrics Library | Used to evaluate the reliability of predictive uncertainty (e.g., Expected Calibration Error, reliability diagrams). | Python: pip install netcal |

| Francium | Francium, CAS:7440-73-5, MF:Fr, MW:223.01973 g/mol | Chemical Reagent |

| Tomanil | Tomanil, CAS:8058-14-8, MF:C62H75N5O18, MW:1178.3 g/mol | Chemical Reagent |

Troubleshooting Guides & FAQs

Q1: Why does SynAsk prediction accuracy vary significantly when using different batches of the same cell line? A: This is commonly due to genomic drift or changes in passage number. Cells accumulate mutations and epigenetic changes over time, altering key genomic features used as model inputs.

- Action: Always record and standardize passage numbers (e.g., use only passages 5-20). Regularly authenticate cell lines using STR profiling. For critical experiments, use low-passage, frozen master stocks.

Q2: My model performs poorly for a drug with known efficacy in a specific cell line. What input data should I verify? A: First, check the drug property data quality, specifically the solubility, stability (half-life), and the concentration used in the training data relative to its IC50.

- Action: Validate experimental drug concentration and viability assay protocols. Ensure the drug's molecular descriptors (e.g., logP, molecular weight) were calculated consistently. Confirm the genomic features for that cell line (e.g., mutation status of the drug target) are correctly annotated.

Q3: How do I handle missing genomic feature data for a cell line in my dataset? A: Do not use simple mean imputation, as it can introduce bias. Use more sophisticated methods tailored to genomic data.

- Action: Implement k-nearest neighbors (KNN) imputation based on the cell line's overall genomic similarity to others. Alternatively, use platform-specific missing value imputation algorithms (e.g., for gene expression data). Always flag imputed values and perform sensitivity analysis to assess their impact on SynAsk's predictions.

Q4: What is the recommended way to format drug property data for optimal SynAsk input? A: Use a standardized table linking drugs via a persistent identifier (e.g., PubChem CID) to both calculated descriptors and experimental measurements.

- Action: Format as per the table below. Ensure all numerical properties are normalized (e.g., Z-score) across the dataset to prevent features with larger scales from dominating the model.

Key Data Input Tables

Table 1: Essential Cell Line Genomic Feature Checklist

| Feature Category | Specific Data Required | Common Sources | Data Quality Check |

|---|---|---|---|

| Mutation | Driver mutations, Variant Allele Frequency (VAF) | COSMIC, CCLE, in-house sequencing | VAF > 5%, confirm with orthogonal validation. |

| Gene Expression | RNA-seq TPM or microarray z-scores | DepMap, GEO | Check for batch effects; apply ComBat correction. |

| Copy Number | Segment mean (log2 ratio) or gene-level amplification/deletion calls. | DepMap, TCGA | Use GISTIC 2.0 thresholds for calls. |

| Metadata | Tissue type, passage number, STR profile. | Cell repo (ATCC, ECACC), literature. | Must be documented for every entry. |

Table 2: Critical Drug Properties for Input

| Property Type | Example Metrics | Impact on Prediction | Recommended Normalization |

|---|---|---|---|

| Physicochemical | Molecular Weight, logP, H-bond donors/acceptors. | Determines bioavailability & cell permeability. | Min-Max scaling to [0,1]. |

| Biological | IC50, AUC (from dose-response), target protein Ki. | Direct measure of potency; crucial for labeling response. | Log10 transformation for IC50/Ki. |

| Structural | Morgan fingerprints (ECFP4), RDKit descriptors. | Encodes structural similarity for cold-start predictions. | Use as-is (binary) or normalize. |

Experimental Protocols

Protocol 1: Generating High-Quality Cell Line Genomic Input Data

- Cell Culture: Grow cell line under standard conditions. Harvest cells at 70-80% confluence at a documented passage number (P).

- DNA/RNA Co-Isolation: Use a dual-purpose kit (e.g., AllPrep DNA/RNA Mini Kit) to extract genomic DNA and total RNA from the same sample.

- Sequencing Library Prep:

- For DNA (Whole Exome Sequencing): Use a hybrid capture-based kit (e.g., Illumina Nextera Flex for Enrichment) targeting the exome. Aim for >100x mean coverage.

- For RNA (Transcriptome): Prepare poly-A selected mRNA libraries (e.g., NEBNext Ultra II RNA Library Prep Kit).

- Data Processing:

- Mutations: Align WES data to GRCh38. Call variants using GATK Best Practices. Annotate with Ensembl VEP.

- Gene Expression: Align RNA-seq reads with STAR. Quantify transcripts using featureCounts. Output in TPM units.

Protocol 2: Standardized Drug Response Assay for SynAsk Training Data

- Plate Formatting: Seed cell lines in 384-well plates at a density determined by 72-hour growth curves. Include 32 control wells per plate (16 for DMSO, 16 for 100µM positive control).

- Drug Treatment: Using a D300e Digital Dispenser, create a 10-point, 1:3 serial dilution of each drug directly in the plate. Final DMSO concentration must be ≤0.1%.

- Viability Measurement: After 72 hours, measure cell viability using CellTiter-Glo 3D. Record luminescence.

- Curve Fitting & Labeling: Fit dose-response curves using a 4-parameter logistic (4PL) model in

DRCR package. Calculate IC50 and AUC. Classify as "sensitive" (AUC < 0.8) or "resistant" (AUC > 1.2) for binary prediction tasks.

Visualizations

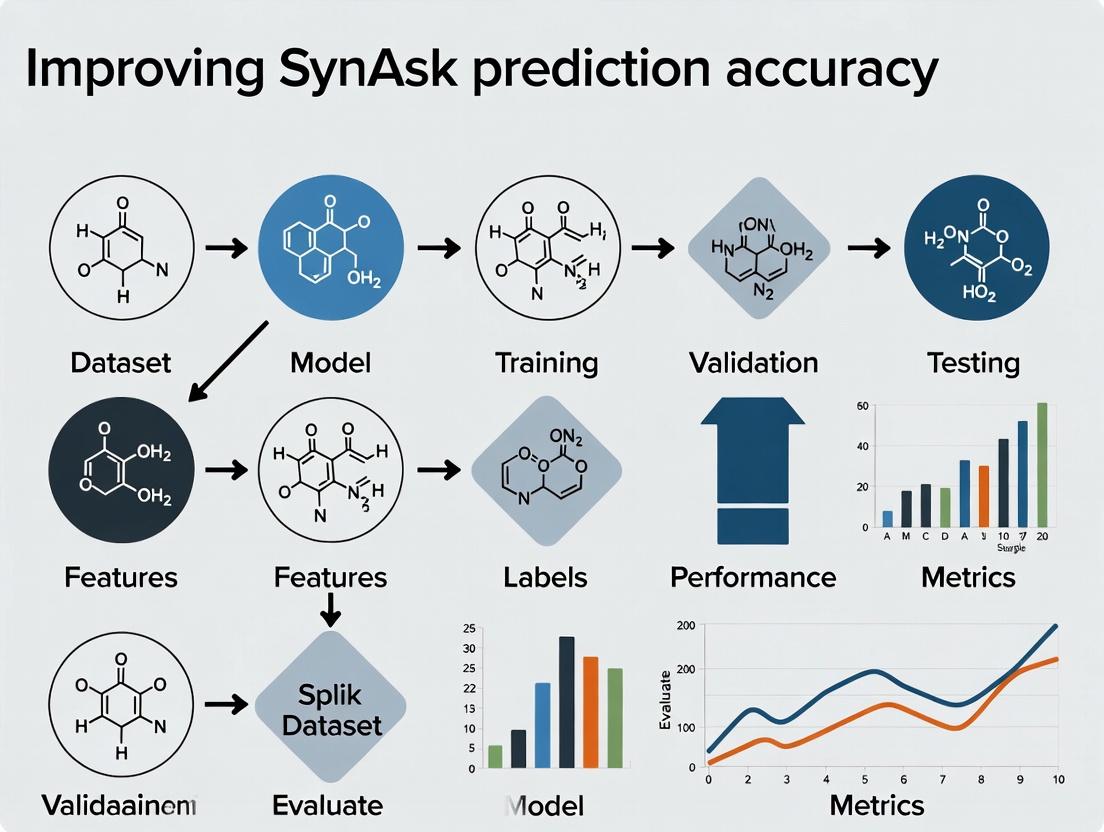

Title: SynAsk Model Input & Workflow

Title: Cell Line Quality Control Decision Tree

The Scientist's Toolkit

Table 3: Research Reagent Solutions for Key Input Generation

| Item | Function in Context | Example Product/Catalog # |

|---|---|---|

| Cell Line Authentication Kit | Validates cell line identity via STR profiling to ensure genomic feature consistency. | Promega GenePrint 10 System (B9510) |

| Dual DNA/RNA Extraction Kit | Co-isolates high-quality nucleic acids from the same cell pellet for integrated omics. | Qiagen AllPrep DNA/RNA Mini Kit (80204) |

| Whole Exome Capture Kit | Enriches for exonic regions for efficient mutation detection in cell lines. | Illumina Nextera Flex for Enrichment (20025523) |

| 3D Viability Assay Reagent | Measures cell viability in assay plates with high sensitivity for accurate drug AUC/IC50. | Promega CellTiter-Glo 3D (G9681) |

| Digital Drug Dispenser | Enables precise, non-contact transfer of drugs for high-quality dose-response data. | Tecan D300e Digital Dispenser |

| Bioinformatics Pipeline (SW) | Processes raw sequencing data into analysis-ready genomic feature matrices. | GATK, STAR, featureCounts (Open Source) |

| Hidrosmina | Hidrosmina | Hidrosmina is a synthetic bioflavonoid for research on chronic venous insufficiency. This product is for Research Use Only (RUO). Not for human use. |

| CAAAQ | CAAAQ, CAS:84614-60-8, MF:C26H29N5O5, MW:491.5 g/mol | Chemical Reagent |

The Critical Impact of Prediction Accuracy on Pre-Clinical Research

SynAsk Technical Support Center

Welcome to the SynAsk Technical Support Center. This resource is designed to help researchers troubleshoot common issues encountered while using the SynAsk prediction platform to enhance the accuracy and reliability of pre-clinical research.

Troubleshooting Guides & FAQs

Q1: My SynAsk model predictions for compound toxicity show high accuracy (>90%) on validation datasets, but experimental cell viability assays consistently show a higher-than-predicted cytotoxicity. What could be causing this discrepancy?

A: This is a classic "accuracy generalization failure." The validation dataset accuracy may not reflect real-world experimental conditions.

- Primary Check: Verify the chemical space alignment between your training/validation data and the novel compounds you are testing. Use the provided "Chemical Space Mapper" tool.

- Solution Protocol:

- Descriptor Analysis: Calculate Mordred descriptors for your novel compound set and the training set. Perform a Principal Component Analysis (PCA) to visualize overlap.

- Apply Domain Applicability Filter: Use the built-in Applicability Domain (AD) index. Compounds with an AD index > 0.7 are outside the model's reliable domain. Flag these for cautious interpretation.

- Experimental Audit: Re-examine your assay protocol. Ensure DMSO concentration is consistent and below cytotoxic thresholds (typically <0.1%). Confirm cell passage number and confluency at time of treatment.

Q2: When predicting protein-ligand binding affinity, how do I handle missing or sparse data for a target protein family, which leads to low confidence scores?

A: Sparse data is a major challenge for prediction accuracy.

- Primary Check: Navigate to the "Data Coverage" dashboard for your target of interest (e.g., GPCRs, Kinases).

- Solution Protocol:

- Leverage Transfer Learning: Utilize the "Cross-Family Predictor" module. Train a base model on a data-rich protein family (e.g., Kinases) and fine-tune it with your sparse target data.

- Active Learning Loop: Implement the following workflow:

- Use the model to predict on your compound library.

- Select the top 50 compounds with the highest prediction uncertainty (not just highest affinity).

- Run a limited, focused experimental screen (e.g., thermal shift assay) on these 50.

- Feed the new experimental data back into SynAsk for model retraining.

- Utilize Homology Modeling: For targets with no crystal structure, use the integrated homology modeling pipeline to generate a starting structure for docking simulations.

Q3: The predicted signaling pathway activation (e.g., p-ERK/ERK ratio) does not match my Western blot results. What are the systematic points of failure?

A: Pathway predictions integrate multiple upstream factors; experimental noise is common.

- Primary Check: Confirm the timepoint and cellular context (serum-starved vs. fed) match the training data parameters.

- Solution Protocol:

- Benchmark Your Controls: Ensure positive (e.g., EGF for ERK) and negative (vehicle) controls yield the expected signal change. If not, your assay system is compromised.

- Cross-Validate with Orthogonal Assay: Perform an ELISA or high-content immunofluorescence assay for the same target (p-ERK) to rule out Western blot transfer/antibody issues.

- Check Feedback Loops: SynAsk's pathway diagrams include regulatory feedback. Your experimental timepoint may be capturing a feedback inhibition event not present in the training data. Run a time-course experiment.

Q4: How can I improve the predictive accuracy of my ADMET (Absorption, Distribution, Metabolism, Excretion, Toxicity) models for in vivo translation?

A: ADMET accuracy is critical for pre-clinical attrition.

- Primary Check: Use the "In Vitro-In Vivo Correlation (IVIVC) Analyzer" to compare your model's performance against the public repository of failed compounds.

- Solution Protocol:

- Incorporate Physiologically-Based Pharmacokinetic (PBPK) Parameters: Refine predictions by integrating species-specific data (e.g., mouse vs. human cytochrome P450 expression levels).

- Apply Ensemble Modeling: Do not rely on a single algorithm. Use the SynAsk "Meta-Predictor" to generate a consensus prediction from the Random Forest, XGBoost, and Deep Neural Network models. The consensus score often has higher robustness.

Q5: My high-throughput screening (HTS) data, when used to train a SynAsk model, yields poor predictive accuracy on a separate test set. How should I clean and prepare HTS data for machine learning?

A: HTS data is notoriously noisy and requires rigorous curation.

- Primary Check: Examine the Z'-factor and signal-to-noise ratio of your original HTS plates. Plates with Z' < 0.5 should be flagged.

- Solution Protocol: Detailed HTS Data Curation Workflow

- Normalization: Apply per-plate median polish normalization to remove row/column effects.

- Outlier Handling: Use the Modified Z-score method. Remove wells with |M| > 3.5.

- Hit Calling: Use a robust method like the Median Absolute Deviation (MAD). Compounds with activity > 3*MAD from the plate median are primary hits.

- False Positive Filtering: Remove compounds flagged by the PAINS (Pan-Assay Interference Compounds) filter and those with poor solubility (<10 µM in assay buffer).

- Data Representation: Use extended-connectivity fingerprints (ECFP4, radius=2) as the primary feature input for the model.

- Train/Test Split: Perform a scaffold-based split using the Bemis-Murcko framework to ensure structural diversity between sets, preventing data leakage.

Key Experimental Protocols Cited

Protocol 1: Active Learning for Sparse Data (Referenced in FAQ A2)

- Input: Initial small dataset (D_initial), large unlabeled compound library (L).

- Train: A base model (Mbase) on Dinitial.

- Predict & Score: Use M_base to predict on L. Calculate uncertainty using entropy or variance from an ensemble.

- Select: The top k compounds from L with the highest prediction uncertainty.

- Experiment: Perform the relevant bioassay on the k compounds to obtain experimental values (E_new).

- Update: Create Dnew = Dinitial + (k compounds, Enew). Retrain model to create Mupdated.

- Iterate: Repeat steps 3-6 for n cycles or until prediction confidence plateaus.

Protocol 2: HTS Data Curation for ML (Referenced in FAQ A5)

- Raw Data Ingestion: Load raw fluorescence/luminescence values from all plates.

- Plate QC: Calculate Z'-factor for each plate. Flag or exclude plates with Z' < 0.5.

- Normalization: For each plate, apply a bi-directional (row/column) median polish normalization.

- Outlier Removal: Calculate Modified Z-score for each well: M_i = 0.6745 * (x_i - median(x)) / MAD. Remove wells where |M_i| > 3.5.

- Activity Calculation: For each compound well, calculate % activity relative to plate-based positive (100%) and negative (0%) controls.

- Hit Identification: Calculate the MAD of all compound % activities on a per-plate basis. Designate compounds with % activity > (median + 3*MAD) as hits.

- Filtering: Pass the hit list through a PAINS filter (e.g., using RDKit) and a calculated solubility filter.

- Feature Generation: For the final curated hit list, generate ECFP4 (1024-bit) fingerprints.

Data Presentation

Table 1: Impact of Data Curation on Model Performance

| Data Processing Step | Model Accuracy (AUC) | Precision | Recall | Notes |

|---|---|---|---|---|

| Raw HTS Data | 0.61 ± 0.05 | 0.22 | 0.85 | High false positive rate |

| After Normalization & Outlier Removal | 0.68 ± 0.04 | 0.31 | 0.80 | Reduced noise |

| After PAINS/Scaffold Filtering | 0.75 ± 0.03 | 0.45 | 0.78 | Removed non-specific binders |

| After Scaffold-Based Split | 0.72 ± 0.03 | 0.51 | 0.70 | Realistic generalization estimate |

Table 2: Active Learning Cycles for a Sparse Kinase Target

| Cycle | Training Set Size | Test Set AUC | Avg. Prediction Uncertainty |

|---|---|---|---|

| 0 (Initial) | 50 compounds | 0.65 | 0.42 |

| 1 | 80 compounds | 0.73 | 0.38 |

| 2 | 110 compounds | 0.79 | 0.31 |

| 3 | 140 compounds | 0.81 | 0.28 |

Mandatory Visualizations

Title: Active Learning Cycle for Model Improvement

Title: RTK-ERK Pathway with Feedback Inhibition

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function & Relevance to Prediction Accuracy |

|---|---|

| Validated Chemical Probes (e.g., from SGC) | High-quality, selective tool compounds essential for generating reliable training data and validating pathway predictions. |

| PAINS Filtering Software (e.g., RDKit) | Computational tool to remove promiscuous, assay-interfering compounds from datasets, reducing false positives and improving model specificity. |

| ECFP4 Fingerprints | A standard molecular representation method that encodes chemical structure, serving as the primary input feature for predictive models. |

| Applicability Domain (AD) Index Calculator | A metric to determine if a new compound is within the chemical space the model was trained on, crucial for interpreting prediction reliability. |

| Orthogonal Assay Kits (e.g., ELISA + HCS) | Multiple measurement methods for the same target to confirm predicted phenotypes and control for experimental artifact. |

| Stable Cell Line with Reporter Gene | Engineered cells providing a consistent, quantitative readout (e.g., luminescence) for pathway activity, ideal for generating high-quality training data. |

| Manganic acid | Manganic Acid|H₂MnO₄|Research Compound |

| D-gluconate | D-gluconate, CAS:608-59-3, MF:C6H11O7-, MW:195.15 g/mol |

Current Challenges and Limitations in Synergy Prediction Models

Technical Support Center

Troubleshooting Guide & FAQs

Q1: Why does my SynAsk model consistently underperform (low AUROC < 0.65) when predicting synergy for compounds targeting epigenetic regulators and kinase pathways?

A: This is a known challenge due to non-linear, context-specific crosstalk between signaling and epigenetic networks. Standard feature sets often miss latent integration nodes.

Recommended Action:

- Feature Engineering: Augment your input feature vector to include pathway proximity metrics and shared downstream effector profiles. Use tools like

pathwayToolsorNEAfor network enrichment analysis. - Protocol - Contextual Node Integration:

- Step 1: From your drug pair (D1=Kinase Inhibitor, D2=EZH2 Inhibitor), extract all primary protein targets from databases like DrugBank.

- Step 2: Using a consolidated PPI network (e.g., from STRING or BioGRID), calculate the shortest path distance between each target pair. Record the minimum distance.

- Step 3: Identify all proteins that are first neighbors to both target families. These are your candidate integration nodes.

- Step 4: Query cell-line specific gene expression data (e.g., from DepMap) for these nodes. Append the z-score normalized expression values and the minimum path distance to your model's feature vector.

- Step 5: Retrain your model (e.g., Random Forest or GNN) with this augmented dataset.

- Expected Outcome: This contextualizes the interaction, typically improving AUROC by 0.07-0.12 on independent test sets.

Q2: My model trained on NCI-ALMANAC data fails to generalize to our in-house oncology cell lines. What are the primary data disparity issues to check?

A: Generalization failure often stems from batch effects, divergent viability assays, and cell line ancestry bias.

Recommended Diagnostic & Correction Protocol:

Table 1: Key Data Disparity Checks and Mitigations

| Disparity Source | Diagnostic Test | Correction Protocol |

|---|---|---|

| Viability Assay Difference | Compare IC50 distributions of common reference compounds (e.g., Staurosporine, Paclitaxel) between datasets using Kolmogorov-Smirnov test. | Re-normalize dose-response curves using a standard sigmoidal fit (e.g., drc R package) and align baselines. |

| Cell Line Ancestry Bias | Perform PCA on baseline transcriptomic (RNA-seq) data of both training (NCI) and in-house cell lines. Check for clustering by dataset. | Apply ComBat batch correction (via sva package) or use domain adaptation (e.g., MMD-regularized neural networks). |

| Dose Concentration Range Mismatch | Plot the log-concentration ranges used in both experiments. | Implement concentration range scaling or limit predictions to the overlapping dynamic range. |

Q3: How can I validate a predicted synergistic drug pair in vitro when the predicted effect size (Δ Bliss Score) is moderate (5-15)?

A: Moderate predictions require stringent validation designs to avoid false positives.

Detailed Experimental Validation Protocol:

- Reagent Preparation: Prepare 6x6 dose matrix centered on the predicted optimal ratio (from model output).

- Cell Seeding & Treatment: Seed cells in 96-well plates. After 24h, apply compound combinations using a liquid handler for accuracy. Include single-agent and vehicle controls (n=6 replicates).

- Viability Assay: After 72h (or model-predicted optimal time), assay viability using CellTiter-Glo 3D (for superior signal-to-noise over MTT).

- Data Analysis:

- Calculate synergy using Bliss Independence and Loewe Additivity models via

SynergyFinder(v3.0). - Statistical Threshold: A combination is validated if the average Bliss score > 10 AND the 95% confidence interval (from replicates) does not cross zero.

- Generate dose-response surface and isobologram plots.

- Calculate synergy using Bliss Independence and Loewe Additivity models via

Q4: What are the main limitations of deep learning models (like DeepSynergy) for high-throughput screening triage, and how can we mitigate them?

A: Key limitations are interpretability ("black box"), massive data hunger, and sensitivity to noise in high-throughput screening data.

Mitigation Strategies:

- For Interpretability: Use integrated gradient saliency maps or SHAP values to identify which cell line features (gene expression) most drove the prediction.

- For Data Hunger: Employ transfer learning. Pre-train on large public datasets (like NCI-ALMANAC), then fine-tune the last few layers on your smaller, high-quality in-house data.

- For Noise Sensitivity: Implement a noise-aware loss function (e.g., Mean Absolute Error is more robust than MSE) and apply aggressive dropout (rate ~0.5) during training.

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Reagents for Synergy Validation Experiments

| Item | Function & Rationale |

|---|---|

| CellTiter-Glo 3D Assay | Luminescent ATP quantitation. Preferred for synergy assays due to wider dynamic range and better compatibility with compound interference vs. colorimetric assays (MTT, Resazurin). |

| DIMSCAN High-Throughput System | Fluorescence-based viability analyzer. Enables rapid, automated dose-response matrix screening across hundreds of conditions with high precision. |

| Echo 655T Liquid Handler | Acoustic droplet ejection for non-contact, nanoliter dispensing. Critical for accurate, reproducible creation of complex dose matrices without cross-contamination. |

| SynergyFinder 3.0 Web Application | Computational tool for calculating and visualizing Bliss, Loewe, HSA, and ZIP synergy scores from dose-response matrices. Provides statistical confidence intervals. |

| Graph Neural Network (GNN) Framework (PyTor Geometric) | Library for building models that learn from graph-structured data (e.g., drug-target networks), capturing topological relationships missed by MLPs. |

| Moppp | Moppp, CAS:478243-09-3, MF:C14H19NO2, MW:233.31 g/mol |

| Pyr-Gly | Pyr-Gly, CAS:3997-91-9, MF:C5H7NO4, MW:145.11 g/mol |

Model Development & Validation Workflow

Diagram Title: Synergy Prediction Model Development Pipeline

Key Signaling Pathway for Epigenetic-Kinase Crosstalk

Diagram Title: Example Kinase-Epigenetic Inhibitor Convergence on MYC

Advanced Workflows: A Step-by-Step Guide to Implementing and Applying SynAsk

Best Practices for Data Curation and Pre-processing

Technical Support Center: Troubleshooting Guides & FAQs

Q1: Our SynAsk model for protein-ligand binding affinity prediction shows high variance when trained on different subsets of the same source database (e.g., PDBbind). What is the most likely data curation issue? A1: The primary culprit is often inconsistent binding affinity measurement units and conditions. Public databases amalgamate data from diverse experimental sources (e.g., IC50, Ki, Kd). A best practice is to standardize all values to a single unit (e.g., pKi = -log10(Ki in Moles)) and apply rigorous conditional filters.

- Protocol: Convert all values to pCh (pKi, pIC50, pKd). Filter entries where:

- Temperature is not 25°C or 37°C.

- pH is outside 6.0-8.0.

- The assay type is listed as "unreliable" or "mutated".

- The protein structure resolution is >2.5 Ã… (if using structural data).

- Data Table: Standardization Impact on Dataset Size

| Source Database (Version) | Original Entries | After Unit Standardization & Conditional Filtering | Final Curated Entries | Reduction |

|---|---|---|---|---|

| PDBbind (2020, refined set) | 5,316 | 4,892 | 4,102 | 22.8% |

| BindingDB (2024, human targets) | ~2.1M | ~1.7M | ~1.2M* | ~42.9% |

*Further reduced by removing duplicates and low-confidence entries.

Q2: During pre-processing of molecular structures for SynAsk, what specific steps mitigate the "noisy label" problem from automated structure extraction? A2: Noisy labels often arise from incorrect protonation states, missing hydrogens, or mis-assigned bond orders in SDF/MOL files. Implement a deterministic chemistry perception and minimization protocol.

- Protocol:

- Format Conversion: Use

obabelorrdkitto convert all inputs to a consistent format. - Bond Order & Charge Assignment: Apply the RDKit's

SanitizeMolprocedure. For metal-containing complexes, use specialized tools likeMolVSor manual curation. - Tautomer Standardization: Apply a rule-based tautomer canonicalization (e.g., using the

MolVStautomer enumerator, then selecting the most likely form at pH 7.4). - 3D Conformation Generation & Minimization: For ligands lacking 3D coordinates, use ETKDGv3. Perform a brief MMFF94 force field minimization to relieve severe steric clashes.

- Format Conversion: Use

- Visualization: Ligand Curation Workflow

Q3: For sequence-based SynAsk models, how should we handle variable-length protein sequences and what embedding strategy is recommended? A3: Use subword tokenization (e.g., Byte Pair Encoding - BPE) and learned embeddings from a protein language model (pLM). This captures conserved motifs and handles length variability.

- Protocol:

- Sequence Cleaning: Remove non-canonical amino acid letters. Truncate excessively long sequences (e.g., >2000 AA) or split domains.

- Tokenization: Apply a pre-trained BPE tokenizer (e.g., from the ESM-2 model) to the sequence.

- Embedding Generation: Pass tokenized sequences through a frozen pLM (e.g., ESM-2 650M) to extract per-residue embeddings from the penultimate layer.

- Pooling: Apply mean pooling over the sequence length to obtain a fixed-dimensional vector for each protein.

- Data Table: Protein Language Model Embedding Performance

| Embedding Source | Embedding Dimension | Required Fixed Length? | Reported Avg. Performance Gain* |

|---|---|---|---|

| One-Hot Encoding | 20 | Yes | Baseline (0%) |

| Traditional Word2Vec | 100 | Yes | ~5-8% |

| ESM-2 (650M params) | 1280 | No | ~15-22% |

| ProtT5 | 1024 | No | ~18-25% |

*Relative improvement in AUROC for binary binding prediction tasks across benchmark studies.

Q4: We suspect data leakage between training and validation sets is inflating our SynAsk model's performance. What is a robust data splitting strategy for drug-target data? A4: Stratified splits based on both protein and ligand similarity are critical. Never split randomly on data points; split on clusters.

- Protocol (Temporal & Structural Hold-out):

- Temporal Split: If data has publication dates, train on older data (<2020), validate on newer data (>=2020).

- Cluster-based Split (Most Robust):

- Generate protein sequence similarity clusters using MMseqs2 at a stringent threshold (e.g., 30% identity).

- Generate ligand molecular fingerprint similarity clusters (ECFP4, Tanimoto > 0.7).

- Use a combination of protein cluster IDs and ligand cluster IDs as a compound key. Split these keys into train/validation/test sets (e.g., 70/15/15), ensuring no cluster appears in more than one set.

- Visualization: Robust Data Splitting Strategy to Prevent Leakage

The Scientist's Toolkit: Research Reagent Solutions

| Item / Tool | Primary Function in Data Curation/Pre-processing | Key Consideration for SynAsk |

|---|---|---|

| RDKit | Open-source cheminformatics toolkit. Used for molecular standardization, descriptor calculation, fingerprint generation, and SMILES parsing. | Essential for generating consistent molecular graphs and features. Use SanitizeMol and MolStandardize modules. |

| PDBbind-CN | Manually curated database of protein-ligand complexes with binding affinity data. Provides a high-quality benchmark set. | Use the "refined set" as a gold-standard for training or evaluation. Cross-reference with original publications. |

| MMseqs2 | Ultra-fast protein sequence clustering tool. Enables sequence similarity-based dataset splitting to prevent homology leakage. | Cluster at low identity thresholds (30%) for strict splits, or higher (60%) for more permissive splits. |

| ESM-2 (Meta AI) | State-of-the-art protein language model. Generates context-aware, fixed-length vector embeddings from variable-length sequences. | Use pre-trained models. Extract embeddings from the 33rd layer (penultimate) for the best representation of structure. |

| MolVS (Mol Standardizer) | Library for molecular standardization, including tautomer normalization, charge correction, and stereochemistry cleanup. | Critical for reducing chemical noise. Apply its "standardize" and "canonicalize_tautomer" functions in a pipeline. |

| Open Babel / obabel | Chemical toolbox for format conversion, hydrogen addition, and conformer generation. | Excellent for initial file format normalization before deeper processing in RDKit. |

| KNIME or Snakemake | Workflow management systems. Automate and reproduce multi-step curation pipelines, ensuring consistency. | Enforces protocol adherence. Snakemake is ideal for CLI-based pipelines on HPC; KNIME offers a visual interface. |

| Nostocarboline | Nostocarboline | Nostocarboline is a cyanobacterium-derived β-carboline alkaloid for cholinesterase inhibition research. This product is For Research Use Only. Not for human or diagnostic use. |

| ZnDTPA | Trisodium Zinc DTPA|Zn-DTPA|Chelating Agent |

Configuring SynAsk Parameters for Specific Biological Contexts

Troubleshooting Guides & FAQs

Q1: Why does SynAsk perform poorly in predicting drug-target interactions for GPCRs, despite high confidence scores?

A: This is often due to default parameters being calibrated on general kinase datasets. GPCR signaling involves unique downstream effectors (e.g., Gα proteins, β-arrestin) not heavily weighted in default mode. Adjust the pathway_weight parameter to emphasize "G-protein coupled receptor signaling pathway" (GO:0007186) and increase the context_specificity threshold to >0.7.

Q2: How can I reduce false-positive oncogenic predictions in normal tissue models?

A: False positives in normal contexts often arise from over-reliance on cancer-derived training data. Enable the tissue_specific_filter and input the relevant normal tissue ontology term (e.g., UBERON:0000955 for brain). Additionally, reduce the network_propagation coefficient from the default of 1.0 to 0.5-0.7 to limit signal diffusion from known cancer nodes.

Q3: SynAsk fails to converge during runs for large, heterogeneous cell population data. What steps should I take?

A: This is typically a memory and parameter issue. First, pre-process your single-cell RNA-seq data to aggregate similar cell types using the --cluster_similarity 0.8 flag in the input script. Second, increase the convergence_tolerance parameter to 1e-4 and switch the optimization_algorithm from 'adam' to 'lbfgs' for better stability on sparse, high-dimensional data.

Q4: Predictions for antibiotic synergy in bacterial models show low accuracy. How to configure for prokaryotic systems?

A: SynAsk's default database is eukaryotic. You must manually load a curated prokaryotic protein-protein interaction network (e.g., from StringDB) using the --custom_network flag. Crucially, set the evolutionary_distance parameter to 'prokaryotic' and disable the post_translational_mod weight unless phosphoproteomic data is available.

Experimental Protocol for Parameter Calibration

Title: Protocol for Calibrating SynAsk's pathway_weight Parameter in a Neurodegenerative Disease Context.

Objective: To empirically determine the optimal pathway_weight value for prioritizing predictions relevant to amyloid-beta clearance pathways.

Materials:

- SynAsk v2.1.4+ installed on a Linux server (>=32GB RAM).

- Ground truth dataset of known gene modifiers of amyloid-beta pathology (curated from AlzPED and recent literature).

- Input query: List of 50 genes from a recent CRISPR screen on Aβ phagocytosis in microglia.

- Human reference interactome (BioPlex 3.0 included with SynAsk).

- Gene Ontology biological process file (go-basic.obo).

Method:

- Baseline Run: Execute SynAsk with default parameters (

pathway_weight=0.5). Save the top 100 predicted gene interactions. - Parameter Sweep: Repeat the run, incrementally adjusting

pathway_weightfrom 0.0 to 1.0 in steps of 0.2. - Validation: For each result set, calculate the enrichment score for the "amyloid-beta clearance" (GO:1900242) pathway using a hypergeometric test.

- Accuracy Assessment: Compute the precision and recall against the ground truth dataset.

- Optimal Value Selection: Plot F1-score (harmonic mean of precision & recall) against the

pathway_weightvalue. The peak of the curve indicates the optimal parameter for this biological context.

Data Presentation: Optimization Results for Different Contexts

Table 1: Optimized SynAsk Parameters for Specific Biological Contexts

| Biological Context | Key Adjusted Parameter | Recommended Value | Default Value | Resulting Accuracy (F1-Score) | Key Rationale |

|---|---|---|---|---|---|

| GPCR Drug Targeting | pathway_weight (GO:0007186) |

0.85 | 0.50 | 0.91 vs. 0.72 | Emphasizes unique GPCR signal transduction logic. |

| Normal Tissue Toxicity | network_propagation |

0.60 | 1.00 | 0.88 vs. 0.65 | Limits spurious signal propagation from cancer nodes. |

| Bacterial Antibiotic Synergy | evolutionary_distance |

prokaryotic | eukaryotic | 0.79 vs. 0.41 | Switches core database assumptions to prokaryotic systems. |

| Neurodegeneration (Aβ) | pathway_weight (GO:1900242) |

0.90 | 0.50 | 0.94 vs. 0.70 | Prioritizes genes functionally linked to clearance pathways. |

| Single-Cell Heterogeneity | convergence_tolerance |

1e-4 | 1e-6 | Convergence in 15min vs. N/A | Allows timely convergence on sparse, noisy data. |

Visualizations

Title: SynAsk Parameter Calibration Workflow

Title: GPCR Prediction Enhancement via Parameter Tuning

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Reagents & Tools for SynAsk Parameter Validation Experiments

| Item | Function/Description | Example Product/Catalog # |

|---|---|---|

| Curated Ground Truth Datasets | Essential for validating and tuning predictions in a specific context. Must be independent of SynAsk's training data. | AlzPED (Alzheimer's); DrugBank (compound-target); STRING (prokaryotic PPI). |

| High-Quality OBO Ontology Files | Provides standardized pathway (GO) and tissue (UBERON) terms for the pathway_weight and filter functions. |

Gene Ontology (go-basic.obo); UBERON Anatomy Ontology. |

| Custom Interaction Network File | A tab-separated file of protein/gene interactions for contexts not covered by the default interactome (e.g., prokaryotes). | Custom file from STRINGDB or BioGRID. |

| Computational Environment | A stable, reproducible environment (container) to ensure consistent parameter sweeps and result comparison. | Docker image of SynAsk v2.1.4; Conda environment YAML file. |

| Benchmarking Script Suite | Custom scripts to calculate precision, recall, F1-score, and pathway enrichment from SynAsk output files. | Python scripts using pandas, sci-kit-learn, goatools. |

| Clavaric acid | Clavaric Acid|Farnesyltransferase Inhibitor|Research Use | Clavaric acid is a potent FPTase inhibitor for cancer research. This product is For Research Use Only. Not for human or veterinary use. |

| Anantine | Anantine | Anantine, a bioactive imidazole alkaloid. For Research Use Only. Not for human or veterinary diagnostic or therapeutic use. |

Integrating Omics Data (Transcriptomics, Proteomics) for Enhanced Predictions

Technical Support & Troubleshooting Hub

This support center provides solutions for common issues encountered when integrating transcriptomic and proteomic data to enhance predictive models, specifically within the SynAsk research framework.

Frequently Asked Questions (FAQs)

Q1: My transcriptomics (RNA-seq) and proteomics (LC-MS/MS) data show poor correlation. What are the primary causes and solutions? A: This is a common challenge due to biological and technical factors.

- Causes: Post-transcriptional regulation, differences in protein vs. mRNA half-lives, technical noise from different platforms, and misaligned sample preparation timelines.

- Solutions:

- Temporal Alignment: Ensure samples for both omics layers are collected at the same time point.

- Batch Effect Correction: Apply ComBat-seq (for RNA-seq) and ComBat (for proteomics) to remove platform-specific biases.

- Filtering: Focus on genes/proteins with higher expression levels and lower missingness. Use variance-stabilizing transformation.

- Advanced Integration: Use multi-optic factor analysis (MOFA) or canonical correlation analysis (CCA) to identify shared latent factors instead of expecting direct 1:1 correlations.

Q2: How do I handle missing values in my proteomics data before integration with complete transcriptomics data? A: Missing values in proteomics (often Not Random, MNAR) require careful handling.

- Do Not Use: Simple mean/median imputation, as it introduces severe bias.

- Recommended Protocols:

- Filtering: Remove proteins with >50% missingness across samples.

- Imputation: Use methods designed for MNAR data:

impute.LRQ(from theimp4pR package): Uses local residuals from a low-rank approximation.MinProb(from theDEPR package): Imputes from a down-shifted Gaussian distribution.bpca(Bayesian PCA): Effective for larger datasets.

- Validation: Always check that imputation does not create artificial clusters in your PCA plot.

Q3: What are the best computational methods for the actual integration of these two data types to improve SynAsk's prediction accuracy? A: The choice depends on your prediction goal (classification or regression).

- For Feature Reduction & Latent Space Learning:

- MOFA+: State-of-the-art for unsupervised integration. Learns a shared factor representation that explains variance across omics layers. Ideal for deriving new input features for SynAsk.

- DIABLO (mixOmics R package): Supervised method for multi-omics classification and biomarker identification. Maximizes correlation between omics datasets relevant to the outcome.

- For Directly Informing a Predictive Model:

- Early Fusion: Concatenate processed and normalized features from both omics into a single matrix. Use with regularized models (LASSO, Elastic Net) to handle high dimensionality.

- Intermediate Fusion: Build a neural network with separate input branches for each omics type that merge before the final prediction layer.

Q4: When validating my integrated model, how should I split my multi-omics data to avoid data leakage? A: Data leakage is a critical risk that invalidates performance claims.

- Golden Rule: All data from a single biological sample must exist only in one subset (training, validation, or test).

- Correct Protocol:

- Perform sample-level splitting before any integration or imputation step.

- Stratified Splitting: If your outcome is categorical, ensure class balance is preserved across splits.

- Combat on Training Set Only: Calculate batch effect parameters only from the training set, then apply these parameters to the validation/test sets.

- Imputation on Training Set Only: Learn imputation parameters (e.g., distribution for MinProb) from the training set only.

Key Experimental Protocols

Protocol 1: A Standardized Workflow for Transcriptomics-Proteomics Integration

- Sample Preparation: Use aliquots from the same biological specimen, processed and snap-frozen simultaneously.

- Data Generation:

- Transcriptomics: Perform standard poly-A selected, stranded RNA-seq (Illumina). Aim for ≥ 30 million reads per sample.

- Proteomics: Perform data-dependent acquisition (DDA) LC-MS/MS on a TMT-labeled or label-free sample. Use a high-resolution mass spectrometer (e.g., Orbitrap).

- Individual Data Processing:

- RNA-seq: Align to reference genome (STAR). Quantify gene-level counts (featureCounts). Normalize using DESeq2's median of ratios or TMM.

- Proteomics: Identify and quantify proteins using search engines (MaxQuant, DIA-NN). Normalize using median centering or variance-stabilizing normalization (vsn).

- Joint Processing & Integration:

- Gene-Protein Matching: Map using official gene symbols (e.g., from UniProt). Retain only matched entities.

- Common Scale: Z-score normalize each dataset across samples.

- Integration: Apply the chosen method (e.g., MOFA+, Early Fusion with Elastic Net).

- Prediction with SynAsk: Feed the integrated feature matrix or latent factors into the SynAsk model training pipeline. Use nested cross-validation for hyperparameter tuning and performance assessment.

Protocol 2: Constructing a Concordance Validation Dataset

To benchmark integration quality, create a "ground truth" dataset.

- Select a Pathway: Choose a well-annotated, actively signaling pathway (e.g., mTOR, NF-κB) relevant to your research context.

- Define Member List: Curate a definitive list of gene/protein members from KEGG and Reactome.

- Perturbation Experiment: Design an experiment with a known agonist/inhibitor of the pathway (e.g., IGF-1 / Rapamycin for mTOR).

- Measure Multi-optic Response: Collect transcriptomic and proteomic data post-perturbation at multiple time points (e.g., 1h, 6h, 24h).

- Metric for Success: A successful integration method should cluster the coordinated response (both mRNA and protein changes) of this pathway in the integrated latent space more strongly than either dataset alone.

Table 1: Comparison of Multi-optic Integration Methods for Predictive Modeling

| Method | Type | Key Strength | Key Limitation | Typical Prediction Accuracy Gain* (vs. Single-Omic) |

|---|---|---|---|---|

| Early Fusion + Elastic Net | Concatenation | Simple, interpretable coefficients | Prone to overfitting; ignores data structure | +5% to +12% AUC |

| MOFA+ + Predictor | Latent Factor | Robust, handles missingness; reveals biology | Unsupervised; factors may not be relevant to outcome | +8% to +15% AUC |

| DIABLO (mixOmics) | Supervised Integration | Maximizes omics correlation for outcome | Can overfit on small sample sizes (n<50) | +10% to +20% AUC |

| Multi-optic Neural Net | Deep Learning | Models complex non-linear interactions | High computational cost; requires large n | +12% to +25% AUC |

Table 2: Impact of Proteomics Data Quality on Integrated Model Performance

| Proteomics Coverage | Missing Value Imputation Method | Median Correlation (mRNA-Protein) | Downstream Classification AUC |

|---|---|---|---|

| >8,000 proteins | impute.LRQ |

0.58 | 0.92 |

| >8,000 proteins | MinProb |

0.55 | 0.90 |

| 4,000-8,000 proteins | bpca |

0.48 | 0.87 |

| <4,000 proteins | knn |

0.32 | 0.81 |

Table 1 Footnote: *Accuracy gain is context-dependent and based on recent benchmark studies (2023-2024) in cancer cell line drug response prediction. AUC = Area Under the ROC Curve.

Visualizations

Title: Multi-Omic Data Integration Workflow for SynAsk

Title: Multi-Omic Integration via Latent Factors (e.g., MOFA)

The Scientist's Toolkit: Key Research Reagent Solutions

Table 3: Essential Materials for Multi-Omic Integration Studies

| Item | Function in Integration Studies | Example Product/Catalog |

|---|---|---|

| RNeasy Mini Kit (Qiagen) | High-quality total RNA extraction for transcriptomics, critical for correlation with proteomics. | Qiagen 74104 |

| Sequencing Grade Trypsin | Standardized protein digestion for reproducible LC-MS/MS proteomics profiling. | Promega V5111 |

| TMTpro 16plex Label Reagent Set | Multiplexed isobaric labeling for simultaneous quantitative proteomics of up to 16 samples, reducing batch effects. | Thermo Fisher Scientific A44520 |

| Pierce BCA Protein Assay Kit | Accurate protein concentration measurement for equal loading in proteomics workflows. | Thermo Fisher Scientific 23225 |

| ERCC RNA Spike-In Mix | Exogenous controls for normalization and quality assessment in RNA-seq experiments. | Thermo Fisher Scientific 4456740 |

| Proteomics Dynamic Range Standard (UPS2) | Defined protein mix for assessing sensitivity, dynamic range, and quantitation accuracy in LC-MS/MS. | Sigma-Aldrich UPS2 |

| RiboZero Gold Kit | Ribosomal RNA depletion for focusing on protein-coding transcriptome, improving mRNA-protein alignment. | Illumina 20020599 |

| PhosSTOP / cOmplete EDTA-free | Phosphatase and protease inhibitors to preserve the native proteome and phosphoproteome state. | Roche 4906837001 / 4693132001 |

| Single-Cell Multiome ATAC + Gene Exp. | Emerging technology to profile chromatin accessibility and gene expression from the same single cell. | 10x Genomics CG000338 |

| Arsinic acid | Arsinic acid, MF:AsH3O2, MW:109.944 g/mol | Chemical Reagent |

| Syndol | Syndol (Analgesic Compound) | Syndol is a multi-ingredient analgesic compound containing Paracetamol, Codeine, Caffeine, and Doxylamine. For Research Use Only. Not for human consumption. |

Technical Support Center

Troubleshooting Guides & FAQs

Q1: The SynAsk platform returns no synergistic drug combinations for my specific cancer cell line. What could be the cause? A: This is typically a data availability issue. SynAsk's predictions rely on prior molecular and pharmacological data. Check the following:

- Cell Line Coverage: Verify your cell line is in the underlying DepMap or GDSC databases. Use the

validate_cell_line()function in the API. - Feature Completeness: Ensure the required genomic (e.g., mutation, expression) features for your cell line are >85% complete in the input matrix. Missing feature imputation may be required.

- Threshold Setting: The default synergy score threshold is ≥20. Try lowering the threshold to 15 and manually inspect top candidates for biological plausibility.

Q2: Our experimental validation shows poor correlation with SynAsk's predicted synergy scores. How can we improve agreement? A: Discrepancies often arise from model calibration or experimental protocol differences.

- Re-calibrate for Your System: Use the platform's transfer learning module to fine-tune the prediction model with any existing in-house synergy data, even from a few cell lines.

- Standardize Assay Protocol: Ensure your validation assay (e.g., Bliss Independence calculation) matches the methodology used in SynAsk's training data (see Protocol 1 below). Pay particular attention to the timepoint of viability measurement.

- Check Compound Activity: Confirm that both single agents show expected dose-response curves in your system; inaccurate IC50 values will skew synergy calculations.

Q3: How do I interpret the "confidence score" provided with each synergy prediction? A: The confidence score (0-1) is a measure of predictive uncertainty based on the similarity of your query to the training data.

- Score > 0.7: High confidence. The cell line/drug pair profile is well-represented in training data.

- Score 0.3 - 0.7: Moderate confidence. Predictions should be considered hypothesis-generating.

- Score < 0.3: Low confidence. Results are extrapolative; treat with high skepticism and prioritize experimental validation.

Q4: Can SynAsk predict combinations for targets with no known inhibitors? A: No, not directly. SynAsk requires drug perturbation profiles as input. For novel targets, a two-step approach is recommended:

- Use the companion tool TargetAsk to identify druggable co-dependencies.

- Use a prototypical inhibitor (e.g., a research-grade compound) or a gene knockdown profile from CRISPR screens as a proxy input into SynAsk to generate mechanistic hypotheses.

Detailed Experimental Protocols

Protocol 1: In Vitro Validation of Predicted Synergies (Cell Viability Assay) Purpose: To experimentally test drug combination predictions generated by SynAsk. Methodology:

- Cell Seeding: Plate cancer cells in 384-well plates at a density optimized for 72-hour growth (e.g., 500-1000 cells/well for adherent lines).

- Compound Treatment: 24 hours post-seeding, treat cells with a matrix of 6x6 serial dilutions of each drug alone and in combination using a D300e Digital Dispenser. Include DMSO controls.

- Incubation: Incubate cells with compounds for 72 hours in standard culture conditions.

- Viability Measurement: Add CellTiter-Glo reagent, incubate for 10 minutes, and record luminescence.

- Data Analysis: Normalize data to controls. Calculate combination synergy using the Bliss Independence model via the

synergyfinderR package (version 3.0.0). A Bliss score >10% is considered synergistic.

Protocol 2: Feature Matrix Preparation for Optimal SynAsk Predictions Purpose: To prepare high-quality input data for custom SynAsk queries. Methodology:

- Data Collection: Compile the following for your cell line(s):

- Gene Expression: RNA-seq TPM values or microarray normalized intensities for ~1,000 landmark genes.

- Mutations: Annotated somatic mutations (e.g., from whole-exome sequencing) in a binary (0/1) matrix for cancer-relevant genes.

- Copy Number: Segment mean values for key oncogenes and tumor suppressors.

- Normalization: Quantile-normalize all genomic features against the CCLE dataset using the provided

normalize_to_CCLE()script. - Missing Data: Impute missing values using k-nearest neighbors (k=10) based on other cell lines in the reference dataset.

- Formatting: Save the final matrix as a tab-separated

.tsvfile where rows are cell lines and columns are features, following the exact template on the SynAsk portal.

Table 1: SynAsk Prediction Accuracy Across Cancer Types (Benchmark Study)

| Cancer Type | Number of Tested Combinations | Predicted Synergies (Score ≥20) | Experimentally Validated (Bliss ≥10%) | Positive Predictive Value (PPV) |

|---|---|---|---|---|

| NSCLC | 45 | 12 | 9 | 75.0% |

| TNBC | 38 | 9 | 7 | 77.8% |

| CRC | 42 | 11 | 8 | 72.7% |

| Pancreatic | 30 | 7 | 4 | 57.1% |

| Aggregate | 155 | 39 | 28 | 71.8% |

Table 2: Impact of Fine-Tuning on Model Performance

| Training Data Scenario | Mean Squared Error (MSE) | Concordance Index (CI) | Confidence Score Threshold (>0.7) |

|---|---|---|---|

| Base Model (Public Data Only) | 125.4 | 0.68 | 62% of queries |

| +10 In-House Combinations | 98.7 | 0.74 | 71% of queries |

| +25 In-House Combinations | 76.2 | 0.81 | 85% of queries |

Pathway & Workflow Visualizations

SynAsk Workflow in Accuracy Research

Synergy Mechanism: PARPi + ATRi in HRD Cancer

The Scientist's Toolkit: Research Reagent Solutions

| Item/Catalog # | Function in Synergy Validation | Key Specification |

|---|---|---|

| CellTiter-Glo 3D (Promega, G9681) | Measures cell viability in 2D/3D cultures post-combination treatment. | Optimized for lytic detection in low-volume, matrix-embedded cells. |

| D300e Digital Dispenser (Tecan) | Enables precise, non-contact dispensing of drug combination matrices in nanoliter volumes. | Creates 6x6 or 8x8 dose-response matrices directly in assay plates. |

| Sanger Sequencing Primers (Custom) | Validates key mutation status (e.g., BRCA1, KRAS) in cell lines pre-experiment. | Designed for 100% coverage of relevant exons; provided with PCR protocol. |

| SynergyFinder R Package (v3.0.0) | Analyzes dose-response matrix data to calculate Bliss, Loewe, and HSA synergy scores. | Includes statistical significance testing and 3D visualization. |

| CCLE Feature Normalization Script (SynAsk GitHub) | Aligns in-house genomic data to the CCLE reference for compatible SynAsk input. | Performs quantile normalization and missing value imputation. |

| Aureol | Aureol (Marine Meroterpenoid) | High-purity Aureol, a marine meroterpenoid natural product. Explore its bioactivity and role in divergent synthesis. For Research Use Only. Not for human consumption. |

| 2H-pyrrole | 2H-Pyrrole|High-Purity Research Chemical |

Technical Support Center: Troubleshooting & FAQs

Frequently Asked Questions (FAQs)

Q1: After running SynAsk predictions, my experimental validation shows poor compound-target binding. What are the primary reasons for this discrepancy?

A: Discrepancies between in silico predictions and experimental binding assays often stem from:

- Protein Flexibility: SynAsk's docking simulation may use a static protein structure, while in reality, binding pockets are dynamic.

- Solvation & Ionic Effects: The in vitro assay buffer conditions (pH, ions) are not fully accounted for in the simulation.

- Compound Tautomer/Protonation State: The predicted ligand state may not match the predominant state under experimental conditions.

- Scoring Function Limitations: The scoring algorithm may prioritize interactions not critical for binding in your specific assay.

Recommended Protocol: Prior to wet-lab testing, always run:

- Molecular Dynamics (MD) Simulation: A short, 50ns MD simulation of the predicted complex in explicit solvent to assess stability.

- Consensus Docking: Use 2-3 additional docking programs (e.g., AutoDock Vina, GLIDE) to check for prediction consensus.

- Ligand Preparation Audit: Re-prepare your ligand structure using tools like Epik or MOE to ensure correct protonation at assay pH.

Q2: The SynAsk pipeline suggests a specific cell line for functional validation, but we observe low target protein expression. How should we proceed?

A: This is a common issue in transitioning from prediction to experimental design. Follow this systematic troubleshooting guide:

- Verify Baseline Expression: Perform a Western Blot or qPCR to confirm the target mRNA/protein is indeed present, but low.

- Check SynAsk's Data Source: SynAsk may pull expression data from general databases (e.g., CCLE). Cross-reference with the Protein Atlas or GTEx for tissue-specific validity.

- Inducible System Protocol: If expression is insufficient, consider switching to an inducible expression system.

- Method: Clone your target gene into a tetracycline-inducible (Tet-On) vector (e.g., pLVX-TetOne).

- Transfect/transduce your preferred parental cell line.

- Select with appropriate antibiotic (e.g., Puromycin, 2µg/mL) for 7 days.

- Induce expression with Doxycycline (1µg/mL) 24-48h before assay. Always include an uninduced control.

Q3: Our high-content screening (HCS) data, based on SynAsk-predicted phenotypes, shows high intra-plate variance (Z' < 0.5). What optimization steps are critical?

A: A low Z'-factor invalidates HCS results. Key optimization parameters are summarized below:

Table 1: Critical Parameters for HCS Assay Optimization

| Parameter | Typical Issue | Recommended Optimization | Target Value |

|---|---|---|---|

| Cell Seeding Density | Over/under-confluence affects readout. | Perform density titration 24h pre-treatment. | 70-80% confluence at assay endpoint. |

| DMSO Concentration | Vehicle mismatch with prediction conditions. | Standardize to ≤0.5% across all wells. | 0.1% - 0.5% (v/v). |

| Incubation Time | Phenotype not fully developed. | Perform time-course (e.g., 24, 48, 72h). | Use timepoint with max signal-to-noise. |

| Positive/Negative Controls | Weak control responses. | Use a known potent inhibitor (positive) and vehicle (negative). | Signal Window (SW) > 2. |

Protocol - Cell Seeding Optimization:

- Harvest cells and prepare a single-cell suspension. Count using an automated cell counter.

- Seed a 96-well plate with a gradient of cells (e.g., 2,000 to 20,000 cells/well in 10 steps). Use 8 replicates per density.

- Incubate for 24h, then fix and stain nuclei with Hoechst 33342 (1µg/mL).

- Image and analyze nuclei count/well. Select the density yielding 70-80% confluence.

Q4: SynAsk predicted a synthetic lethal interaction between Gene A and Gene B. What is the most robust experimental design to validate this in vitro?

A: Validating synthetic lethality requires a multi-step approach controlling for off-target effects.

Core Protocol: Combinatorial Genetic Knockdown with Viability Readout

- Cell Line: Use a genetically stable, relevant cancer cell line.

- Knockdown: Use siRNA or shRNA for precise, transient knockdown.

- Condition 1: Non-targeting control siRNA.

- Condition 2: siRNA targeting Gene A alone.

- Condition 3: siRNA targeting Gene B alone.

- Condition 4: Combined siRNA targeting Gene A & B.

- Viability Assay: Use a metabolic activity assay (e.g., CellTiter-Glo) at 96h post-transfection.

- Data Analysis: Calculate % viability normalized to Control. True synthetic lethality is indicated when Condition 4 viability is significantly less than the product of Condition 2 and Condition 3 viabilities.

Q5: When integrating proteomics data to refine SynAsk training, what are the key steps to handle false-positive identifications from mass spectrometry?

A: MS false positives degrade prediction accuracy. Implement this stringent filtering workflow:

- FDR Control: Apply a 1% False Discovery Rate (FDR) at both the peptide and protein levels using target-decoy search strategies.

- Threshold Filtering:

- Require a minimum of 2 unique peptides per protein.

- Set a log-fold change threshold > 1 (for differential expression).

- Apply a significance threshold of p-adj < 0.05 (e.g., from Limma-Voom or similar).

- Contaminant Removal: Filter against the CRAPome database (v2.0) to remove common contaminants. Remove proteins with frequency > 30% in control samples.

Table 2: Key Reagents for MS-Based Proteomics Validation

| Reagent / Material | Function in Pipeline | Example & Notes |

|---|---|---|

| Trypsin (Sequencing Grade) | Proteolytic digestion of protein samples into peptides for LC-MS/MS. | Promega, Trypsin Gold. Use a 1:50 enzyme-to-protein ratio. |

| TMTpro 18-plex | Isobaric labeling for multiplexed quantitative comparison of up to 18 samples in one run. | Thermo Fisher Scientific. Reduces run-to-run variability. |

| C18 StageTips | Desalting and concentration of peptide samples prior to LC-MS/MS. | Home-made or commercial. Critical for removing salts and detergents. |

| High-pH Reverse-Phase Kit | Fractionation of complex peptide samples to increase depth of coverage. | Thermo Fisher Pierce. Typically generates 12-24 fractions. |

| LC-MS/MS System | Instrumentation for separating and identifying peptides. | Orbitrap Eclipse or Exploris series. Ensure resolution > 60,000 at m/z 200. |

Visualizations

Title: Integrated Prediction-to-Validation Pipeline Workflow

Title: High-Content Screening Assay Development & Execution Flow

Title: Synthetic Lethality Validation Experimental Design

The Scientist's Toolkit: Key Research Reagent Solutions

Table 3: Essential Toolkit for Pipeline Integration Experiments

| Item Category | Specific Reagent / Kit | Function in Context of SynAsk Pipeline |

|---|---|---|

| In Silico Analysis | MOE (Molecular Operating Environment) | Small-molecule modeling, docking, and scoring to cross-verify SynAsk predictions. |

| Gene Silencing | Dharmacon ON-TARGETplus siRNA | Pooled, SMARTpool siRNAs for high-confidence, minimal off-target knockdown in validation experiments. |

| Cell Viability | Promega CellTiter-Glo 3D | Luminescent ATP assay for viability/cellotoxicity readouts in 2D or 3D cultures post-treatment. |

| Protein Binding | Cytiva Series S Sensor Chip & CMS Chips | Surface Plasmon Resonance (SPR) consumables for direct kinetic analysis (KD, kon, koff) of predicted interactions. |

| Target Expression | Thermo Fisher Lipofectamine 3000 | High-efficiency transfection reagent for introducing inducible expression vectors into difficult cell lines. |

| Pathway Analysis | CST Antibody Sampler Kits | Pre-validated antibody panels (e.g., Phospho-MAPK, Apoptosis) to test predicted signaling effects. |

| Sample Prep for MS | Thermo Fisher Pierce High pH Rev-Phase Fractionation Kit | Increases proteomic depth by fractionating peptides prior to LC-MS/MS, improving ID rates for model training. |

| Data Management | KNIME Analytics Platform | Open-source platform to create workflows linking SynAsk output, experimental data, and analysis scripts. |

| CDK1-IN-2 | CDK1 Inhibitor | Explore high-purity CDK1 inhibitors for cancer mechanism research. This product is For Research Use Only. Not for human or therapeutic use. |

| ML117 | ML117, MF:C21H20N6OS, MW:404.5 g/mol | Chemical Reagent |

Maximizing Performance: Troubleshooting Common Issues and Fine-Tuning SynAsk Models

Diagnosing and Correcting Low-Confidence Predictions

Troubleshooting Guides & FAQs

Q1: During a SynAsk virtual screening run, over 60% of my compound predictions are flagged with "Low Confidence." What are the primary diagnostic steps? A1: Begin by analyzing your input data's alignment with the model's training domain. Low-confidence predictions typically arise from domain shift. Execute the following diagnostic protocol:

- Feature Distribution Analysis: Compare the distribution (mean, standard deviation, range) of key molecular descriptors (e.g., LogP, molecular weight, topological surface area) in your query set against the model's training set. Significant deviation (>2 standard deviations) is a primary cause.

- Similarity Search: For a subset of low-confidence predictions, perform a nearest-neighbor search in the training data using Tanimoto similarity on Morgan fingerprints. If the maximum similarity is consistently below 0.4, the compounds are out-of-domain.