The AI and Robotics Revolution in Synthesis: Accelerating Drug Discovery and Nanomaterial Development

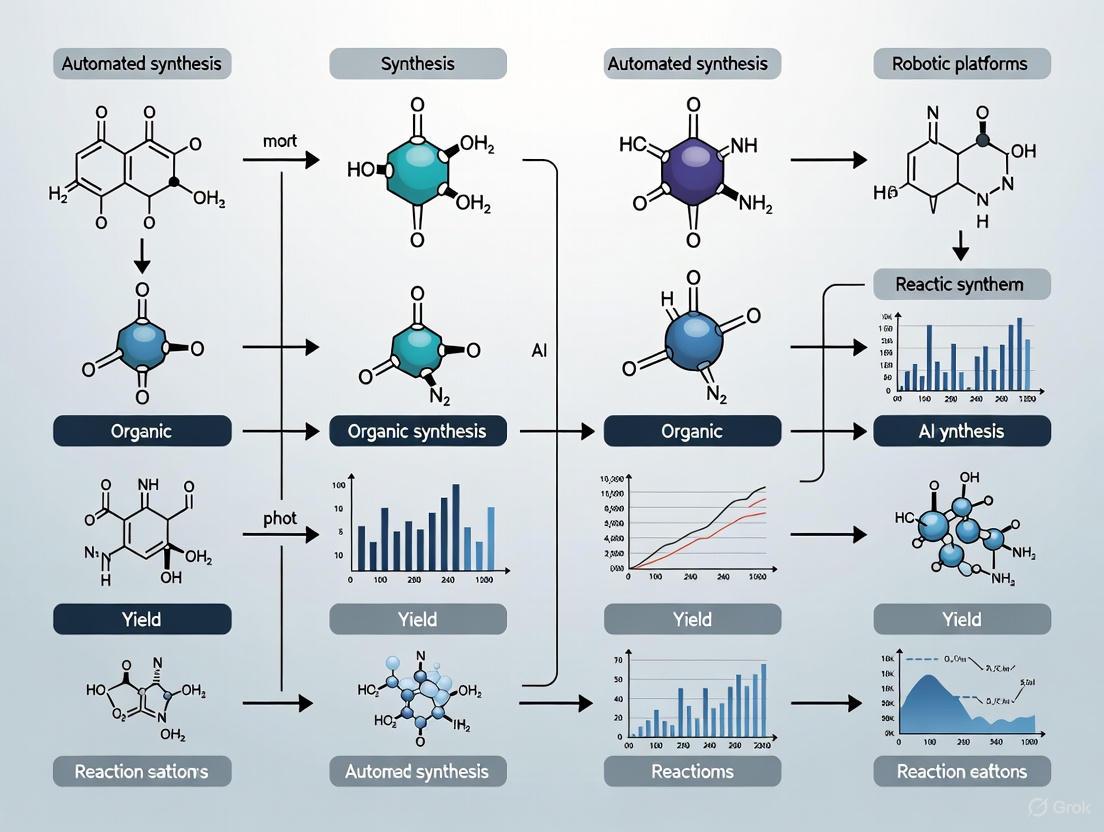

This article explores the transformative integration of artificial intelligence (AI) and robotic platforms in chemical and nanomaterial synthesis.

The AI and Robotics Revolution in Synthesis: Accelerating Drug Discovery and Nanomaterial Development

Abstract

This article explores the transformative integration of artificial intelligence (AI) and robotic platforms in chemical and nanomaterial synthesis. It details the foundational shift from traditional, labor-intensive methods to data-driven, automated workflows that are reshaping research and development in pharmaceuticals and materials science. The scope encompasses an examination of core technologies—from high-throughput experimentation (HTE) platforms and closed-loop optimization to machine learning algorithms for retrosynthetic analysis and reaction prediction. Through methodological case studies and comparative analysis of optimization algorithms, the article provides a practical guide for researchers and drug development professionals seeking to implement these technologies. It also addresses key challenges, such as hardware reliability and data scarcity, while validating the approach with documented successes from industry and academia, including accelerated compound optimization and enhanced reproducibility.

From Manual Trial-and-Error to Automated Discovery: The New Paradigm of AI-Driven Synthesis

Traditional research in chemistry and materials science has long relied on manual, trial-and-error methodologies for material synthesis and labor-intensive testing [1]. This approach is inherently limited by its dependence on human intuition and physical execution, leading to significant challenges in reproducibility, scaling, and overall efficiency. These limitations create bottlenecks in critical fields like drug discovery and materials development. The emergence of automated synthesis, powered by robotic platforms and artificial intelligence (AI), represents a paradigm shift. This document details the specific limitations of traditional synthesis and provides application notes and experimental protocols for implementing automated solutions, framing them within the broader thesis that autonomy is the next frontier in materials research [1] [2].

Quantitative Comparison: Traditional vs. Automated Synthesis

The following tables summarize the core challenges of traditional synthesis and the quantitative benefits of automation, drawing from real-world implementations.

Table 1: Core Limitations of Traditional Synthesis

| Challenge | Impact on Research and Development | Qualitative & Quantitative Consequences |

|---|---|---|

| Labor-Intensity | Relies on highly skilled chemists for repetitive tasks [3]. | High operational costs; one analysis cites annual labor costs for manual production at $560,000 [4]. Diverts expert time from high-value innovation. |

| Low Reproducibility | Prone to human error in execution and subjective data interpretation [3]. | Decreased reliability of experimental data; impedes collaboration and scale-up due to inconsistent results. |

| Scalability Challenges | Manual processes are difficult and costly to scale for high-throughput testing or production [3]. | Inefficient transition from lab-scale to industrial production; limits exploration of large chemical spaces. |

Table 2: Benefits of Automated Synthesis Supported by Quantitative Data

| Benefit | Description | Supporting Data from Case Studies |

|---|---|---|

| Increased Efficiency & Reduced Labor | Robotic systems operate continuously and handle repetitive tasks faster than humans. | An automation case study showed a system reduced labor from 8 workers/shift to 1, projecting savings of $548,000 over two years [4]. |

| Enhanced Reproducibility | Automated platforms perform precise, software-controlled liquid handling and operation sequences [3]. | Enables exhaustive analysis and increases reproducibility by removing human error [3]. |

| Improved Scalability & Quality | Enables high-throughput experimentation and seamless transition from discovery to production. | In a manufacturing example, automation reduced cycle time from over 60 seconds to under 45 seconds while increasing consistency and reducing scrap rates [5]. |

Application Note: Implementing a Modular Robotic Platform for Exploratory Synthesis

Background and Principle

A major hurdle in exploratory chemistry is the open-ended nature of product identification, which typically requires multiple, orthogonal analytical techniques. Traditional autonomous systems often rely on a single, hardwired characterization method, limiting their decision-making capability [2]. This application note details a modular workflow using mobile robots to integrate existing laboratory equipment, enabling autonomous, human-like experimentation that shares resources with human researchers without requiring extensive lab redesign [2].

Experimental Protocol

Objective: To autonomously perform synthetic chemistry, characterize products using multiple techniques, and make heuristic decisions on subsequent experimental steps.

Materials and Equipment (The Researcher's Toolkit)

| Category | Item | Function in the Protocol |

|---|---|---|

| Synthesis Module | Chemspeed ISynth synthesizer or equivalent [2]. | Automated platform for performing chemical reactions in parallel. |

| Analytical Modules | UPLC-MS (Ultrahigh-Performance Liquid Chromatography–Mass Spectrometer) [2]. | Provides data on product molecular weight and purity. |

| Benchtop NMR (Nuclear Magnetic Resonance) Spectrometer [2]. | Provides structural information about the synthesized products. | |

| Robotics & Mobility | Mobile Robotic Agents (multiple task-specific or single multipurpose) [2]. | Transport samples between synthesis and analysis modules. |

| Software & Control | Central Database & Host Computer with Control Software [2]. | Orchestrates workflow, stores data, and runs decision-making algorithms. |

| Heuristic Decision-Maker Algorithm [2]. | Processes UPLC-MS and NMR data to assign pass/fail grades and determine next steps. |

Procedure:

- Synthesis: The Chemspeed ISynth platform executes a batch of reactions as per a pre-defined initial plan. On completion, it automatically takes aliquots of each reaction mixture and reformats them into separate vials for MS and NMR analysis [2].

- Sample Transport: Mobile robotic agents collect the prepared sample vials. The robots then navigate to the respective analytical instruments (UPLC-MS and benchtop NMR) and load the samples for analysis [2].

- Orthogonal Analysis: The UPLC-MS and NMR instruments run their standard analytical methods autonomously. The raw data from both instruments is saved to a central database [2].

- Heuristic Decision-Making: The decision-maker algorithm processes the analytical data for each reaction. Domain-expert-defined criteria are applied to both the MS and NMR data to assign a binary "pass" or "fail" grade for each technique. A reaction must pass both analyses to be considered successful [2].

- Next-Step Execution: Based on the decisions, the control software instructs the synthesis platform on the next set of experiments. This may involve scaling up successful reactions, replicating them to confirm reproducibility, or elaborating them in a divergent synthesis [2].

The following workflow diagram illustrates this cyclic, autonomous process:

Data Interpretation and Heuristic Decision-Making

The heuristic decision-maker is designed to mimic human judgment. For instance, in a supramolecular chemistry screen, pass criteria for MS data might include the presence of a peak corresponding to the target assembly's mass-to-charge ratio. For NMR, a pass could be defined by the appearance of specific diagnostic peaks or a clean, interpretable spectrum. The algorithm combines these orthogonal results to make a conservative, reliable decision on which reactions to advance, thereby navigating complex chemical spaces autonomously [2].

Application Note: An AI-Driven Closed-Loop System for Material Intelligence

Background and Principle

The concept of "Material Intelligence" (MI) is realized by fully embedding AI and robotics into the materials research lifecycle, creating a system that can autonomously plan, execute, and learn from experiments. This approach moves beyond automation to true autonomy, integrating the cycles of data-guided rational design ("reading"), automation-enabled controllable synthesis ("doing"), and autonomy-facilitated inverse design ("thinking") [1].

Experimental Protocol

Objective: To create a closed-loop system where AI directs robotic platforms to discover and optimize materials based on a target property, effectively encoding material formulas into a deployable "material code" [1].

Materials and Equipment (The Researcher's Toolkit)

| Category | Item | Function in the Protocol |

|---|---|---|

| AI/Software Layer | Computer-Aided Synthesis Planning (CASP) Tools (e.g., ChemAIRS, IBM RXN) [6] [7]. | Plans viable synthetic routes for target molecules. |

| Predictive ML Models for reaction outcomes, selectivity, or material properties [8]. | Guides the inverse design process by predicting performance. | |

| Robotic Platform | Integrated Robotic Synthesis System (e.g., Chemspeed, Chemputer) [3]. | Executes the physical synthesis as directed by the AI. |

| In-line or On-line Analytical Instruments (e.g., HPLC, MS, NMR) [2]. | Provides real-time or rapid feedback on reaction outcomes. | |

| Data Infrastructure | Centralized Data Repository with ML-Optimized Data Management. | Stores all experimental data and trains the AI models for continuous improvement. |

Procedure:

- Reading (Rational Design): The cycle begins with existing data. A target material property is defined. AI models screen existing databases and scientific literature to propose candidate molecules or materials that should exhibit the desired property [1].

- Doing (Controllable Synthesis): The proposed candidates are passed to a CASP tool, which generates feasible synthetic routes. The optimal route is selected and translated into machine-readable code. A robotic synthesis platform then executes the synthesis and subsequent purification steps autonomously [1] [3].

- Thinking (Inverse Design): The synthesized material is characterized, and its properties are measured. This new data point is fed back into the central database. Machine learning models are retrained on this expanded dataset, improving their predictive accuracy. The AI then uses these refined models to propose a new, potentially improved, set of candidate materials for the next iteration, effectively "thinking" of what to make next based on what it has learned [1].

This creates a self-improving cycle, as visualized below:

Data Interpretation and AI Learning

The power of this protocol lies in the AI's ability to learn from multimodal data. For example, if the goal is to discover a new organic photocatalyst, the AI would be trained on data linking molecular structure to photocatalytic activity. After each synthesis and performance test, the model updates its understanding of structure-property relationships. Over multiple cycles, it learns to propose molecules that are not just similar to known catalysts but are novel and optimized based on the learned design principles, dramatically accelerating the discovery process [1] [8].

Core Concepts and Definitions

In the landscape of modern scientific research, particularly within drug discovery and materials science, three interconnected paradigms are accelerating the pace of innovation: High-Throughput Experimentation (HTE), Closed-Loop Optimization, and Self-Driving Labs (SDLs). These methodologies leverage automation, data science, and artificial intelligence to create more efficient and predictive research workflows.

High-Throughput Experimentation (HTE) is a method for scientific discovery that uses robotics, data processing software, liquid handling devices, and sensitive detectors to quickly conduct millions of chemical, genetic, or pharmacological tests [9]. In chemistry, HTE allows the execution of large arrays of hypothesis-driven, rationally designed experiments in parallel, requiring less effort per experiment compared to traditional means [10]. It is a powerful tool for reaction discovery, optimization, and for examining the scope of chemical transformations.

Closed-Loop Optimization refers to an automated, iterative process where the results of an experiment are immediately fed back into an AI-driven decision-making system. This system then designs and executes the subsequent set of experiments without human intervention [11] [12]. The core of this process is the Design-Make-Test-Analyze (DMTA) cycle, which is compressed from weeks or days to a matter of hours. The "closed loop" is achieved when the testing results directly influence the next design cycle, creating a continuous, autonomous optimization process [11].

Self-Driving Labs (SDLs) represent the ultimate expression of automation in research. SDLs combine fully automated experiments with artificial intelligence that decides the next set of experiments [13]. Taken to their ultimate expression, SDLs represent a new paradigm where the world is probed, interpreted, and explained by machines for human benefit. They integrate the physical hardware for automated execution (the "Make" and "Test" phases) with the AI "brain" that handles the "Design" and "Analyze" phases, effectively closing the loop [13] [12].

The relationship between these concepts is hierarchical and integrated. HTE provides the foundational technology for rapid, parallelized experimental execution. Closed-loop optimization is the functional process that uses HTE within an iterative, AI-guided cycle. An SDL is a physical and software manifestation that fully embodies closed-loop optimization, making the entire research process autonomous.

Applications in Automated Synthesis and AI Research

The integration of these concepts is transforming research in synthetic chemistry and nanomaterials development, enabling the rapid discovery and optimization of molecules and materials with desired properties.

Small Molecule Discovery in Medicinal Chemistry

The development of the Cyclofluidic Optimisation Platform (CyclOps) exemplifies a closed-loop system for small molecule discovery. This platform was designed to slash the cycle time between designing, making, and testing new compounds from weeks to just hours [11]. The platform seamlessly integrated:

- Flow Synthesis: Utilizing a commercial tube-based reaction platform for flexibility, allowing for the merging of reagent streams and easy adjustment of reactor volume [11].

- Automated Purification and Analysis: An HPLC system with an evaporative light scattering detector (ELSD) for quantitation was integrated to purify and quantify reaction products automatically. The product was captured via a "heart cut" from the HPLC peak [11].

- Flow-Based Biochemical Assay: A custom-built assay using capillary tubing and a nano-HPLC pump to create gradients of reagents enabled high-throughput biological testing in a flow environment [11].

In one demonstration, the platform successfully prepared and assayed 14 thrombin inhibitors in a seamless process in less than 24 hours, a significant milestone in achieving an integrated make-and-test platform [11].

Autonomous Nanomaterial Synthesis

A state-of-the-art application is an autonomous robotic platform that integrates a Generative Pre-trained Transformer (GPT) model for literature mining and an A* algorithm for closed-loop optimization of nanomaterial synthesis [12]. This platform demonstrates the SDL concept for producing nanomaterials like gold nanorods (Au NRs) and silver nanocubes (Ag NCs).

The workflow is as follows:

- Literature Mining: A GPT model, trained on hundreds of papers, retrieves and suggests synthesis methods and initial parameters based on natural language queries [12].

- Automated Execution: A commercial "Prep and Load" (PAL) system, equipped with robotic arms, agitators, a centrifuge, and a UV-vis spectrometer, executes the synthesis and characterization based on the generated scripts [12].

- Closed-Loop Optimization: The UV-vis characterization data (e.g., LSPR peak position) is fed to the A* algorithm, which plans the next set of synthesis parameters to efficiently converge toward the target material properties [12].

This platform showcased its efficiency by comprehensively optimizing synthesis parameters for multi-target Au nanorods across 735 experiments, and for Au nanospheres and Ag nanocubes in just 50 experiments [12]. The A* algorithm was shown to outperform other optimization algorithms like Optuna and Olympus in search efficiency within this discrete parameter space [12].

AI-Driven Drug Discovery Platforms

Companies like Exscientia and Onepot.AI have operationalized these concepts at the industrial level. Exscientia's platform uses AI to design small molecules that meet specific target profiles, which are then synthesized and tested in an automated fashion. The company reports ~70% faster design cycles and requires 10x fewer synthesized compounds than industry norms, compressing the early drug discovery timeline from typically ~5 years to as little as two years in some cases [14]. Their platform has advanced to a state where the "Centaur Chemist" approach combines algorithmic creativity with automated, robotics-mediated synthesis and testing, creating a closed-loop Design-Make-Test-Learn cycle [14].

Similarly, Onepot.AI uses an AI model named "Phil" to plan synthetic routes for molecules, which are then executed by a fully automated system called POT-1. The company claims it can deliver new compounds up to 10 times faster than traditional methods, with an average turnaround of 5 days [15]. The AI learns from every experimental run, whether successful or not, continuously improving its predictive capabilities and closing the loop [15].

Table 1: Performance Metrics of Automated Discovery Platforms

| Platform / Company | Application Area | Reported Efficiency | Key Metric |

|---|---|---|---|

| Cyclofluidic (CyclOps) [11] | Small Molecule Drug Discovery | 14 compounds prepared and assayed in <24 hours | Cycle time slashed from weeks to hours |

| Exscientia [14] | AI-Driven Drug Discovery | Design cycles ~70% faster | 10x fewer compounds synthesized |

| Onepot.AI [15] | Chemical Synthesis | Delivery of compounds up to 10x faster | Average 5-day turnaround |

| Autonomous Nanomaterial Platform [12] | Nanomaterial Synthesis | Multi-target optimization in 735 experiments | High reproducibility (LSPR peak deviation ≤1.1 nm) |

Experimental Protocols

Below are detailed protocols for implementing a closed-loop optimization system, drawing from the methodologies of the platforms described.

Protocol: Closed-Loop Optimization for a Small Molecule SAR

This protocol is adapted from the CyclOps platform for generating structure-activity relationship (SAR) data autonomously [11].

Objective: To autonomously synthesize and test a series of analogues for biochemical activity against a target kinase.

The Scientist's Toolkit Table 2: Key Research Reagent Solutions for Small Molecule SAR

| Item | Function / Explanation |

|---|---|

| Reagent Stock Solutions | Pre-dispensed libraries of building blocks (e.g., aryl halides, boronic acids, amines) in DMSO or other solvents. Allows for rapid, liquid-handling-based setup of reaction arrays [10]. |

| Catalyst/Ligand Plates | Pre-prepared microtiter plates containing common catalysts and ligands. Decouples the effort of weighing solids from experimental setup, dramatically accelerating the process [10]. |

| HPLC with ELSD | High-Performance Liquid Chromatography with an Evaporative Light Scattering Detector. Used for automated purification ("heart cutting") and, crucially, for quantitation of the product without the need for a chromophore [11]. |

| Flow Biochemistry Chip/Capillary | A microfluidic device (e.g., glass chip or 75 µm ID capillary) that serves as the reactor for the biological assay. Enables rapid, continuous-flow testing with minimal reagent consumption [11]. |

Procedure:

- AI-Driven Design:

- The AI (e.g., a generative model) designs a set of novel molecular structures based on the target product profile and prior SAR.

- The system enumerates the required chemical reactions and outputs a list of reagents to be drawn from the stock library.

Automated Synthesis & Purification:

- A liquid handler transfers the designated reagents from the stock library into reaction vials or a flow reactor system.

- Reaction Conditions: Reactions are conducted in a commercial tube-based flow synthesis platform. The system is configured with appropriate reactor volume (material, diameter, length) and temperature control.

- Reaction Example: A sequential Suzuki and Buchwald-Hartwig coupling can be telescoped by introducing the second set of reagents at a designated point in the flow reactor [11].

- The reaction mixture is automatically directed to an integrated HPLC-ELSD system.

- The peak corresponding to the desired product is identified, and a "heart cut" is taken to capture the pure product.

- The product stream is automatically diluted with assay buffer to a concentration suitable for biological testing.

Automated Biochemical Testing:

- The diluted compound solution is injected into the flow-based biochemical assay platform.

- The assay utilizes a nano-HPLC pump to create a precise gradient of the test compound against the target enzyme and substrates within the capillary.

- A detector (e.g., fluorescence) takes a rapidly sampled readout at a single point in the flow path, generating a rich data set for calculating ICâ‚…â‚€ values [11].

Data Analysis and Loop Closure:

- The assay data (e.g., % inhibition, ICâ‚…â‚€) is automatically processed and formatted.

- This data is fed back into the AI design model.

- The AI analyzes the new SAR, updates its internal model, and designs the next, more optimal set of compounds to synthesize, thus closing the loop.

Protocol: Autonomous Synthesis of Gold Nanorods via the A* Algorithm

This protocol is based on the automated nanomaterial platform, highlighting the use of a heuristic search algorithm for optimization [12].

Objective: To autonomously discover synthesis parameters that produce gold nanorods (Au NRs) with a target Longitudinal Surface Plasmon Resonance (LSPR) peak within 600-900 nm.

Procedure:

- Initialization and Literature Mining:

- The researcher provides the target: "Synthesize gold nanorods with an LSPR peak at X nm."

- The integrated GPT model queries its database of scientific literature on Au nanoparticles and suggests an initial synthesis method and a starting set of parameters (e.g., concentrations of gold precursor, capping agents, and reducing agents) [12].

Automated Experimental Execution:

- The platform's control software converts the suggested method into an executable script (mth file) for the PAL robotic system.

- The robotic arms prepare the reaction mixture in a vial by transferring calculated volumes of stock solutions using the liquid handling tools.

- The reaction vial is transferred to an agitator for mixing and incubation under controlled conditions (e.g., temperature, time).

- After the reaction is complete, an aliquot of the product is transferred to a UV-vis spectrophotometer for characterization.

Data Processing and Decision Making:

- The UV-vis spectrum is automatically analyzed to extract key features (LSPR peak wavelength, Full Width at Half Maximum - FWHM).

- The result (current LSPR peak) and the synthesis parameters used are sent to the A* optimization module.

- The A* algorithm, functioning as a heuristic search in a discrete parameter space, calculates the cost-to-target and plans the most efficient path through the parameter space. It then outputs a new, updated set of synthesis parameters predicted to bring the LSPR peak closer to the target [12].

Loop Closure:

- The new parameters are automatically fed back to the robotic system, which prepares and tests the next sample.

- This process repeats autonomously until the LSPR peak of the synthesized Au NRs meets the target specification within a pre-defined tolerance (e.g., ±5 nm). The platform demonstrated the ability to conduct this search across 735 experiments to meet multi-target objectives [12].

Workflow and Signaling Pathways

The following diagrams illustrate the core workflows and decision-making processes that define closed-loop optimization and self-driving labs.

Core Closed-Loop Optimization Workflow (Design-Make-Test-Analyze)

This diagram visualizes the fundamental iterative cycle that forms the backbone of autonomous research systems.

Self-Driving Lab Architecture for Nanomaterial Synthesis

This diagram details the specific architecture of an SDL, incorporating the A* algorithm and GPT model as described in the nanomaterial synthesis platform [12].

Essential Materials and Reagents

Successful implementation of these protocols relies on a core set of reagents and automated hardware.

Table 3: The Scientist's Toolkit for an Automated Synthesis Lab

| Category | Item | Function / Explanation |

|---|---|---|

| AI & Software | Generative AI / LLM (e.g., GPT) | For initial experimental design, literature mining, and route suggestion [12] [15]. |

| Optimization Algorithm (e.g., A*, Bayesian) | The "brain" for closed-loop optimization; decides the next experiment based on results [12]. | |

| Hardware & Robotics | Liquid Handling Robot / Microtiter Plates | Core of HTE; enables parallel dispensing of reagents in 96, 384, or 1536-well formats for massive experimentation [9] [10]. |

| Integrated Robotic Platform (e.g., PAL system) | A modular system with robotic arms, agitators, centrifuges, and parking stations to perform complex, multi-step protocols [12]. | |

| Flow Chemistry Reactor | A tube or chip-based system for continuous synthesis, offering flexibility and control over reaction parameters [11]. | |

| Automated Purification (HPLC/ELSD) | Provides on-line purification and quantitation of synthesis products, a critical step before assay [11]. | |

| In-line Analyzer (e.g., UV-vis) | For real-time, automated characterization of reaction outputs, providing the data for the decision algorithm [12]. | |

| Chemistry & Reagents | Reagent & Catalyst Libraries | Pre-dispensed, curated collections of starting materials, catalysts, and ligands that enable rapid assembly of experimental arrays [10]. |

| Core Reaction Building Blocks | Key reagents for common transformations (e.g., boronic acids for Suzuki coupling, amines for amide coupling) to ensure broad synthetic scope [11] [15]. |

The integration of artificial intelligence (AI), robotic hardware, and seamless data integration is revolutionizing chemical and materials synthesis. This paradigm shift addresses the profound inefficiencies of traditional labor-intensive, trial-and-error methods, enabling accelerated discovery and development across pharmaceuticals and materials science [12] [8]. Automated platforms, often termed Self-Driving Labs (SDLs), combine machine learning with automated experimentation to create closed-loop systems that rapidly navigate complex chemical spaces [16]. This document details the core components and operational protocols for establishing a robust automated synthesis platform, providing a framework for researchers and drug development professionals to harness this transformative technology.

Core Platform Components

An effective automated synthesis platform rests on three interconnected pillars: the robotic hardware that performs physical tasks, the AI algorithms that guide decision-making, and the data infrastructure that connects them.

Robotic Hardware

The hardware component forms the physical backbone of the platform, responsible for the precise execution of synthesis and characterization tasks. Commercial, modular systems are often employed to ensure reproducibility and transferability between laboratories [12].

A representative example is the Prep and Load (PAL) system, which typically includes the following modules [12]:

- Robotic Arms: Z-axis arms for liquid handling and transferring reaction vessels between stations.

- Agitators: Modules for mixing reaction mixtures, often with multiple reaction sites.

- Centrifuge Module: For separating precipitates from solutions.

- Fast Wash Module: To clean injection needles and tools between steps to prevent cross-contamination.

- UV-vis Spectrometer Module: For in-line characterization of synthesized nanomaterials.

- Solution Modules and Tray Holders: For storing and accessing reagents and samples.

This modular design allows the platform to be reconfigured for different experimental tasks, such as vortex mixing or ultrasonication, enhancing its versatility [12]. The use of commercially available equipment helps standardize experimental procedures and ensures the reproducibility of results across different automated platforms [12].

AI and Machine Learning Algorithms

AI algorithms serve as the cognitive core of the platform, planning experiments, interpreting results, and guiding the iterative optimization process. Different algorithms are suited to distinct aspects of the discovery workflow.

Table 1: Key AI Algorithms in Automated Synthesis

| Algorithm | Primary Function | Application Example | Performance Benchmark |

|---|---|---|---|

| Generative Pre-trained Transformer (GPT) | Retrieves synthesis methods and parameters from literature; assists in experimental design [12]. | Generating practical nanoparticle synthesis procedures from academic papers [12]. | N/A |

| A* Algorithm | A heuristic search algorithm for optimal pathfinding in a discrete parameter space [12]. | Comprehensive optimization of synthesis parameters for multi-target Au nanorods [12]. | Outperformed Optuna and Olympus in search efficiency, requiring fewer iterations [12]. |

| Transformer-based Sequence-to-Sequence Model | Converts unstructured experimental procedures from text to structured, executable action sequences [17]. | Translating prose from patents or journals into a sequence of synthesis actions (e.g., Add, Stir, Wash) [17]. | Achieved a perfect (100%) action sequence match for 60.8% of sentences [17]. |

| Active Learning | An ML model iteratively selects the most informative experiments to run based on previous results [18]. | Prioritizing the most relevant studies for screening in evidence synthesis; can be applied to compound screening [18]. | Reduces the number of records requiring human screening in systematic reviews [18]. |

Data Integration and Management

Data integration forms the central nervous system of the platform, enabling the closed-loop operation. It involves the continuous flow of information from experimental planning to execution and analysis.

- Literature Mining and Knowledge Extraction: Large Language Models (LLMs) like GPT and embedding models like Ada can process vast scientific literature. They compress and parse papers, construct a searchable vector database, and retrieve specific synthesis methods and parameters based on user queries [12].

- Real-Time Data Acquisition and Analysis: Characterization data (e.g., from in-line UV-vis spectroscopy) is automatically uploaded to a specified location and fed directly into the AI decision algorithms [12]. Platforms like Berkeley Lab's "Distiller" stream data from instruments like electron microscopes to supercomputers for near-instantaneous analysis, allowing researchers to make real-time decisions [19].

- The Closed-Loop Workflow: This integrated data flow creates a tight "design-make-test-analyze" cycle. For instance, the AI plans an experiment, the robotic platform executes it and collects characterization data, the data is automatically analyzed, and the AI uses the results to plan the next, more optimal experiment [12] [14]. This loop continues until the target material property or molecule is achieved.

Experimental Protocols

Protocol: Closed-Loop Optimization of Nanomaterial Synthesis

This protocol details the procedure for using an AI-driven robotic platform to optimize the synthesis of gold nanorods (Au NRs) with a target longitudinal surface plasmon resonance (LSPR) peak, based on the work of [12].

Research Reagent Solutions

Table 2: Essential Materials for Au NR Synthesis

| Item Name | Function / Explanation |

|---|---|

| Gold Salt Precursor | (e.g., Chloroauric acid) Source of Au(III) ions for reduction to form nanostructures. |

| Reducing Agent | (e.g., Sodium borohydride) Reduces metal ions to their zerovalent atomic state. |

| Structure-Directing Agent | (e.g., Cetyltrimethylammonium bromide, CTAB) Directs crystal growth into specific shapes (e.g., rods) by binding to specific crystal facets. |

| Seed Solution | Small Au nanoparticle seeds to initiate heterogeneous growth of nanorods. |

| Deionized Water | Solvent for all aqueous-phase reactions. |

Methodology

Initialization and Script Editing:

- Use the integrated GPT model to query the literature database for established Au NR synthesis methods. The model will return key reagents and procedural steps [12].

- Manually edit or select the platform's automated operation script (e.g.,

.mthor.pzmfiles) based on the steps generated by the AI. This script defines the hardware operations for the synthesis [12].

Parameter Input and First Experiment:

- Input initial guesses for key synthesis parameters (e.g., concentrations of precursors, reaction temperature, time) into the platform's control software.

- Initiate the automated run. The robotic platform will:

- Use its robotic arms to aspirate and dispense reagents from the solution module in the specified quantities.

- Transfer the reaction vessel to an agitator for mixing.

- Quench the reaction after a set time.

- Transfer an aliquot of the product to the integrated UV-vis spectrometer for characterization [12].

Data Upload and AI Decision Cycle:

- The UV-vis spectrum (including LSPR peak position and Full Width at Half Maximum, FWHM) is automatically uploaded to a designated folder along with the corresponding synthesis parameters.

- This data file serves as the input for the A* optimization algorithm. The algorithm evaluates the result against the target (e.g., LSPR peak between 600-900 nm) and heuristically searches the discrete parameter space to propose a new, more optimal set of synthesis parameters for the next experiment [12].

Iteration and Convergence:

- Steps 2 and 3 are repeated autonomously in a closed loop.

- The A* algorithm continues to navigate the parameter space until the synthesized Au NRs meet the predefined target criteria (e.g., LSPR within a specific narrow range with a minimal FWHM for uniformity) [12].

- The process can be terminated after a fixed number of experiments or when performance plateaus.

Workflow Visualization

Protocol: Translating Textual Procedures to Executable Actions

This protocol describes a method for converting unstructured experimental procedures from scientific literature into a structured, automation-friendly sequence of actions using a deep-learning model [17].

Methodology

Data Preparation and Model Pre-training:

- Gather a large corpus of experimental procedures from patents or journals.

- Use a custom rule-based Natural Language Processing (NLP) approach to automatically generate structured action sequences from this text. This serves as a pre-training dataset [17].

- Pre-train a transformer-based sequence-to-sequence model on this generated data [17].

Model Refinement:

- A smaller set of experimental procedures is manually annotated by experts to create a high-quality validation and test dataset.

- The pre-trained model is further refined (fine-tuned) on this manually annotated data to improve its accuracy and reliability [17].

Prediction and Execution:

- Input a new, unseen experimental procedure written in prose to the trained model.

- The model translates the text into a sequence of structured synthesis actions (e.g.,

Add,Stir,Wash,Dry,Purify), each with its associated properties (e.g., duration, temperature, reagents) [17]. - This structured output can then be formatted into a script to be executed by a robotic synthesis platform, such as those using the XDL (Chemical Descriptive Language) [17].

Performance and Validation

The efficacy of automated synthesis platforms is demonstrated by quantifiable gains in speed, reproducibility, and optimization efficiency.

- Optimization Efficiency: In one study, the A* algorithm comprehensively optimized synthesis parameters for multi-target Au nanorods over 735 experiments, and for Au nanospheres and Ag nanocubes in just 50 experiments, demonstrating superior search efficiency compared to other algorithms like Optuna and Olympus [12].

- Synthesis Reproducibility: Repetitive synthesis of Au nanorods under identical parameters showed high reproducibility, with deviations in the characteristic LSPR peak and FWHM of ≤1.1 nm and ≤2.9 nm, respectively [12].

- Text-to-Action Accuracy: The transformer model for extracting synthesis actions achieved a perfect (100%) match for 60.8% of sentences and a 90% match for 71.3% of sentences when predicting on a test set [17].

- Accelerated Discovery Timelines: Commercially, platforms like Onepot.AI report delivering new compounds with an average turnaround of 5 days, claiming to be up to 10 times faster than traditional methods [15]. Similarly, AI-driven drug discovery companies like Exscientia have reported designing clinical candidates in a fraction of the typical time [14].

The integration of specialized robotic hardware, sophisticated AI decision-making algorithms, and robust data integration frameworks creates a powerful ecosystem for autonomous chemical synthesis. The protocols outlined herein provide a concrete foundation for researchers to implement these technologies, thereby accelerating the discovery and development of novel materials and therapeutic molecules. As these platforms evolve, they promise to fundamentally reshape the scientific research landscape, shifting the researcher's role from manual executor to strategic director of the discovery process.

The traditional research paradigm in materials science and drug development, characterized by labor-intensive, trial-and-error synthesis, is undergoing a profound revolution [12] [1]. This transformation is driven by the convergence of artificial intelligence (AI), robotic platforms, and a structured, data-first approach to experimentation. This article details a standardized workflow that integrates the Design of Experiments (DOE) with AI-driven validation, creating a closed-loop system for accelerated and reproducible discovery. Framed within the broader thesis of automated synthesis, this protocol provides researchers with a detailed roadmap for implementing this next-generation research paradigm, moving from human-centric intuition to a system of material intelligence [1].

The Core Workflow: From Reading to Thinking

The revolutionary workflow can be conceptualized as a unified, automated cycle of three interlinked domains: data-guided rational design ("reading"), automation-enabled controllable synthesis ("doing"), and autonomy-facilitated inverse design ("thinking") [1]. This cycle is orchestrated through the seamless integration of AI decision-making and robotic execution.

Workflow Diagram

The following diagram illustrates the integrated, closed-loop workflow of an AI-driven experimental platform, from objective definition to validated results.

Phase 1: Define & Design — The "Reading" Phase

This initial phase focuses on planning and leverages AI to mine existing knowledge, transforming it into a testable experimental design.

Protocol: Defining the Experiment and AI-Assisted Literature Mining

- 3.1.1 Define Purpose and Variables: Clearly articulate the goal (e.g., "optimize Au nanorod synthesis for LSPR peak at 800nm"). Identify the response variables (e.g., LSPR peak, size, yield) and the factor variables (e.g., reagent concentration, temperature, reaction time) with their realistic high/low levels [20] [21].

- 3.1.2 AI-Powered Literature Synthesis:

- Database Construction: Crawl or access literature databases (e.g., Web of Science) using relevant keywords (e.g., "Au nanoparticle synthesis") [12].

- Text Processing: Use embedding models (e.g., Ada embedding model) to compress and parse papers into structured text, creating a vector database for efficient retrieval [12].

- Knowledge Query: Implement a large language model (LLM) like a Generative Pre-trained Transformer (GPT) to allow researchers to query the database in natural language. The model can retrieve synthesis methods, parameters, and summarize known relationships [12].

- 3.1.3 Generate Experimental Design: Based on the purpose and initial knowledge, select a DOE approach. A fractional factorial design is often used for screening many factors, while a response surface methodology (RSM) is suitable for optimization [20] [21]. The output is a design matrix specifying the factor combinations for each experimental run.

The Scientist's Toolkit: Research Reagent Solutions

Table 1: Essential Reagents and Materials for Automated Nanomaterial Synthesis

| Item | Function in Experiment | Example from Context |

|---|---|---|

| Metal Precursors (e.g., HAuCl₄, AgNO₃) | Source of metal atoms for nanoparticle formation. | Synthesis of Au, Ag, PdCu nanocages [12]. |

| Reducing Agents (e.g., NaBHâ‚„, Ascorbic Acid) | Catalyze the reduction of metal ions to their zero-valent atomic state. | Critical for controlling nucleation and growth of Au NRs and NSs [12]. |

| Shape-Directing Surfactants (e.g., CTAB) | Bind selectively to crystal facets, guiding anisotropic growth into rods, cubes, etc. | Key factor for controlling morphology of Au NRs and Ag NCs [12]. |

| AI/DOE Software Platform | Plans experiments, analyzes data, and updates parameters via optimization algorithms. | GPT model for method retrieval; A* algorithm for closed-loop optimization [12]. |

| Automated Robotic Platform | Executes liquid handling, mixing, reaction quenching, and sample preparation. | PAL DHR system with Z-axis robotic arms, agitators, and a centrifuge module [12]. |

| Denv-IN-8 | Denv-IN-8, MF:C21H18O7, MW:382.4 g/mol | Chemical Reagent |

| Antibacterial agent 97 | Antibacterial agent 97, MF:C19H23N5S, MW:353.5 g/mol | Chemical Reagent |

Phase 2: Execute & Analyze — The "Doing" Phase

This phase involves the robotic execution of the designed experiment and the subsequent analysis of the collected data.

Protocol: Automated Synthesis and Characterization

- 4.1.1 Robotic Platform Setup: Utilize a commercial automated platform like the PAL DHR system. Key modules include [12]:

- Z-axis robotic arms for liquid handling.

- Agitators for mixing reaction bottles.

- Centrifuge module for product separation.

- In-line characterization (e.g., UV-vis spectrometer).

- 4.1.2 Script Execution: The experimental steps generated from the "Reading" phase are converted into executable scripts (e.g., .mth or .pzm files). The robotic platform follows this script to perform tasks like reagent addition, vortexing, heating, and quenching with high reproducibility [12].

- 4.1.3 In-line Data Collection: After synthesis, the system automatically transfers samples to a characterization module. UV-vis spectroscopy is a common first-line technique for rapid analysis of optical properties like LSPR peaks [12].

Data Analysis and AI Optimization

- 4.2.1 Statistical Modeling: Fit a statistical model (e.g., multiple linear regression) to the experimental data. The analysis identifies which factors and interactions have a significant effect on the response [20].

- 4.2.2 AI-Driven Parameter Update: Instead of a human interpreting the results, an AI optimization algorithm takes the data and proposes the next set of parameters. The A* algorithm, a heuristic search method, has been shown to be highly efficient for this in a discrete parameter space, outperforming other methods like Bayesian optimization (Optuna) in specific synthesis tasks [12]. This creates the core feedback loop of the autonomous system.

Phase 3: Validate & Predict — The "Thinking" Phase

The final phase focuses on validating the optimized results and using the confirmed model for prediction and inverse design.

Protocol: Validation and Model Deployment

- 5.1.1 Confirmatory Runs: Conduct a small set of experiments at the optimized conditions predicted by the model to confirm performance. In automated platforms, reproducibility is high; for example, deviations in the LSPR peak of Au nanorods were ≤1.1 nm in repetitive tests [12].

- 5.1.2 Advanced Characterization: Perform targeted sampling for techniques like Transmission Electron Microscopy (TEM) to validate product morphology and size, providing ground-truth feedback on the synthesis outcome [12].

- 5.1.3 Prediction and Inverse Design: The validated model becomes a predictive tool. Researchers can now use it in an "inverse" manner: specifying a desired material property (e.g., an LSPR peak at 850 nm), and allowing the model to recommend the necessary synthesis parameters to achieve it [1].

Case Study & Data Presentation

A referenced case study demonstrates the efficiency of this workflow. An AI-driven platform was tasked with comprehensively optimizing synthesis parameters for multi-target Au nanorods (Au NRs). The system employed the A* algorithm to navigate the parameter space [12].

Table 2: Performance Comparison of AI Optimization in Automated Synthesis [12]

| Nanomaterial Target | Key Response Variable | AI Algorithm Used | Number of Experiments | Result / Performance |

|---|---|---|---|---|

| Au Nanorods (Au NRs) | LSPR Peak (600-900 nm) | A* Algorithm | 735 | Comprehensive parameter optimization achieved. |

| Au Nanospheres (Au NSs) / Ag Nanocubes (Ag NCs) | Not Specified | A* Algorithm | 50 | Target synthesis achieved. |

| Au Nanorods | Reproducibility of LSPR | N/A (Validation) | N/A | Peak deviation ≤ 1.1 nm; FWHM deviation ≤ 2.9 nm. |

| Au Nanorods | Search Efficiency | A* vs. Optuna/Olympus | Significantly fewer iterations | A* algorithm required fewer experiments to converge. |

The integration of a standardized DOE-to-validation workflow within AI-driven robotic platforms represents a fundamental shift in research methodology. This "Workflow Revolution" replaces inefficient, manual processes with a closed-loop system of "reading-doing-thinking" [1]. It demonstrably accelerates discovery, enhances reproducibility, and enables the inverse design of materials—a critical capability for advancing fields from nanotechnology to drug development. As these platforms become more accessible and their reaction libraries expand [15], this standardized process is poised to become the new benchmark for scientific research and development.

Inside the Self-Driving Lab: Hardware, Algorithms, and Real-World Applications in Pharma and Nanotech

The integration of robotic platforms into chemical and pharmaceutical research represents a paradigm shift, enabling unprecedented levels of throughput, reproducibility, and efficiency in drug discovery and development. These systems form the core of autonomous laboratories, where artificial intelligence (AI) and automation create closed-loop design-make-test-analyze cycles [22]. By automating repetitive, time-consuming, or hazardous tasks, these platforms free researchers to focus on higher-level scientific reasoning and experimental design, thereby accelerating the journey from initial concept to clinical candidate [22] [23]. The operational and economic implications are significant, addressing the pharmaceutical industry's challenge of rising research and development expenditures against stagnant clinical success rates [24]. This document provides detailed application notes and protocols for the three predominant robotic architectures—batch reactors, microfluidic systems, and modular workstations—framed within the context of AI-driven, automated synthesis.

Comparative Analysis of Robotic Platform Architectures

The selection of an appropriate robotic architecture is critical for project success. Each platform type offers distinct advantages and is suited to specific stages of the research and development workflow. The table below provides a quantitative comparison of their core characteristics.

Table 1: Quantitative Comparison of Robotic Platform Architectures

| Platform Architecture | Typical Reaction Volume | Throughput (Experiments/Day) | Key Strengths | Common Applications |

|---|---|---|---|---|

| Modular Workstations (e.g., Chemspeed) | 1 mL - 100 mL [25] | Dozens to hundreds (configurable) [25] | High flexibility, modularity, and scalability; seamless software integration [25] [26] | Automated gravimetric solid dispensing, reaction screening, catalyst testing, synthesis optimization [25] |

| Batch Reactors | 5 mL - 250+ mL | Moderate to High (parallel arrays) | Well-established protocols, simple operation, easy sampling | Reaction optimization, method development, small-scale synthesis |

| Microfluidic Systems | µL - nL scale [27] | Very High (parallelized channels) [27] | Superior mass/heat transfer, minimal reagent use, fast reaction screening, precise parameter control [27] | High-throughput biocatalyst screening, process optimization, hazardous chemistry [27] |

Application Notes & Protocols

Modular Workstations: Chemspeed Platforms

Application Note: Chemspeed platforms exemplify the modular workstation architecture, designed for flexibility and scalability in automated synthesis and formulation [25]. Their core strength lies in the integration of base systems with a wide array of robotic tools, modules, reactors, and software, allowing a setup to be tailored to exact needs and to grow alongside research objectives [25]. A significant advancement in the accessibility and programmability of these systems is the development of Chemspyd, an open-source Python interface that enables dynamic communication with the Chemspeed platform [26]. This tool facilitates integration into higher-level, customizable AI-driven workflows and even allows for the creation of natural language interfaces using large language models [26].

Protocol: Automated Reaction Screening and Solid Dispensing on a Chemspeed Platform

Objective: To autonomously screen a set of catalytic reactions using precise, gravimetric solid and liquid dispensing.

Materials & Reagents:

- Chemspeed platform (e.g., CRYSTAL or larger series) equipped with:

- Gravimetric solid dispensing unit [25]

- Liquid handling arm with syringe pumps

- Robotic gripper

- Modular reactor block (e.g., for 4-16 parallel reactions)

- Integrated stirring and temperature control

- Candidate catalyst libraries (as solids)

- Substrate solutions

- Solvents

- Vials or reactors compatible with the platform's rack systems [25]

Procedure:

- Workflow Programming: Using the AUTOSUITE software or via the Chemspyd Python API, define the experimental workflow [25] [26]. The script should specify:

- The location of all reagents and catalysts in the platform's storage racks.

- The target mass for each solid catalyst and the volumes for all liquid components for each reaction vessel.

- Reaction parameters: temperature, stir speed, and duration.

- Any sampling or quenching steps.

System Initialization: The platform initializes, with the gripper moving to calibrate its position. The solid dispensing unit and liquid handler are primed and calibrated.

Vial Taring: The robotic gripper transports empty reaction vials to the integrated balance. The balance records the tare weight for each vial.

Gravimetric Solid Dispensing: For each vial, the platform moves the solid dispensing unit to dispense the specified catalyst directly into the vial. The dispensing is monitored gravimetrically in real-time to ensure high precision [25].

Liquid Handling: The liquid handling arm aspirates the required volumes of substrate solutions and solvents from source vials and dispenses them into the reaction vials.

Reaction Initiation: The gripper places the sealed vials into the temperature-controlled reactor block. Stirring is initiated simultaneously across all reactions according to the programmed parameters.

Process Monitoring & Sampling (Optional): If the platform is equipped with inline analytics (e.g., Raman probe), data is collected throughout the reaction. Alternatively, the robot can perform scheduled sampling by withdrawing aliquots for offline analysis.

Reaction Quenching & Work-up: Upon completion, the robot adds a quenching solution to stop the reactions. The gripper may transport the vials to a purification module or prepare them for analysis.

Data Digitalization: All experimental actions, including exact masses, liquid volumes, timestamps, and process data, are automatically recorded by the software, ensuring data integrity and reproducibility [25] [23].

Microfluidic Systems

Application Note: Microfluidic systems manipulate small sample volumes (µL to nL) in miniaturized channels and reactors, offering significant advantages for screening and process development [27]. The high surface-to-volume ratio enables exceptionally fast mass and heat transfer, allowing for precise control over reaction parameters and the safe execution of hazardous reactions. A modular approach to microfluidics, where different unit operations (e.g., reactor, dilution, inactivation) are on separate, interconnectable chips, provides maximum flexibility for building complex screening platforms tailored to specific biocatalytic or chemical processes [27].

Protocol: High-Throughput Biocatalyst Screening in a Modular Microfluidic Platform

Objective: To screen a library of enzyme variants for oxygen-dependent activity using a modular microfluidic system with integrated oxygen sensors.

Materials & Reagents:

- Modular microfluidic platform comprising [27]:

- Microreactor module with integrated oxygen sensors.

- Microfluidic dilution and quantification module compatible with electrochemical sensors.

- Module for continuous thermal inactivation of enzymes.

- Library of enzyme variants (whole cell or purified).

- Substrate solution.

- Buffer solutions.

- Calibration standards for oxygen and product.

Procedure:

- System Assembly & Calibration: Interconnect the microreactor, dilution, and inactivation modules using standardized fluidic fittings [27]. Calibrate the integrated oxygen sensors and any electrochemical sensors in the quantification module using standard solutions.

Enzyme Loading & Reaction Initiation: The enzyme variant and substrate solutions are loaded into separate syringes and introduced into the microreactor module via precisely controlled pumps. The streams meet and mix within the microreactor channel.

Continuous Monitoring: The dissolved oxygen concentration is monitored in real-time by the integrated oxygen sensors as the reaction proceeds. A decrease in the oxygen level serves as a proxy for enzyme activity in oxidation reactions [27].

Controlled Inactivation: The reaction mixture flows from the reactor module to the thermal inactivation module. By precisely controlling the temperature and residence time in this module, the enzyme is irreversibly denatured, halting the reaction at a defined time point [27].

Online Dilution & Quantification: The quenched reaction mixture may be automatically diluted in the dilution module to bring the product concentration within the detection range of the electrochemical sensor. The product is then quantified in the quantification module.

Data Integration & Analysis: Oxygen consumption rates and product concentration data are streamed to a connected computer. Computational fluid dynamics (CFD) models can be coupled with the experimental data to gain deeper insight into reaction kinetics and system performance [27]. The data is analyzed to rank enzyme variants based on their activity.

Batch Reactor Systems

Application Note: Automated batch reactor systems, often configured as parallel arrays, bring automation and high-throughput capabilities to traditional flask-based chemistry. They are particularly well-suited for reaction optimization and method development where varying parameters like temperature, pressure, and stir speed is required. These systems can function as standalone units or be integrated as specialized modules within larger robotic workstations.

Protocol: Automated Solvent and Temperature Screening in a Parallel Batch Reactor Array

Objective: To determine the optimal solvent and temperature conditions for a novel catalytic reaction.

Materials & Reagents:

- Parallel batch reactor system (e.g., 6-24 parallel vessels) with individual temperature and pressure control, and overhead stirring.

- Reagent stock solutions.

- Library of solvent candidates.

- Catalyst.

Procedure:

- Reactor Charging: The liquid handling robot or a fixed dispenser allocates a specified volume of each candidate solvent to the individual reactor vessels.

Reagent & Catalyst Addition: A common reagent stock solution and catalyst are dispensed into each reactor.

Sealing and Purging: The reactor block is sealed, and an inert atmosphere is established by purging with nitrogen or argon.

Parameter Setting & Reaction Start: Each reactor is set to a specific temperature according to the experimental design. Stirring is initiated simultaneously across the array, marking time zero.

Pressure Monitoring & Control: The system continuously monitors internal pressure in each vessel. If the pressure exceeds a safety threshold, a pressure release valve opens or the system automatically cools the offending reactor [23].

Automated Sampling: At predetermined time points, the system automatically withdraws small aliquots from each reactor, depressurizing if necessary, and transfers them to analysis vials.

Reaction Quenching & Work-up: After the set reaction time, the entire system is cooled. The robotic gripper transports the reaction vessels to a work-up station where quenching solutions may be added.

Analysis & Data Reporting: The samples are analyzed by inline chromatography (e.g., UPLC) or prepared for offline analysis. Conversion and yield data for each condition are compiled into a report for analysis.

Workflow Visualization with Graphviz Diagrams

Autonomous Discovery Workflow

Modular Microfluidic Screening

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 2: Key Reagents and Materials for Automated Synthesis Platforms

| Item | Function & Application Note |

|---|---|

| Screen-Printed Electrochemical Sensors | Integrated into microfluidic dilution modules for online quantification of reaction products. Their modular design allows for easy replacement and re-use of the microfluidic platform [27]. |

| Tetramethyl N-methyliminodiacetic acid (TIDA) Boronate Esters | Function as automated building blocks in iterative cross-coupling synthesis machines. They enable the automated, robotic synthesis of diverse small molecules from commercial building blocks [28]. |

| Sodium N-(8-[2-hydroxybenzoyl]amino)caprylate (SNAC) | A permeability enhancer used in advanced formulations. In automated formulation platforms, it is dispensed with APIs like oral semaglutide to improve absorption and bioavailability [24]. |

| Fumaryl Diketopiperazine (FDKP) | A carrier molecule used in the automated preparation of inhalable dry powder formulations (e.g., for insulin). It stabilizes the API and forms effective microspheres for inhalation [24]. |

| Functionalized Resins | Used in automated solid-phase peptide synthesis (SPPS) and other polymer-supported reactions. The API or building block is attached to the resin, enabling automated pumping of reagents for sequential deprotection, acylation, and purification steps [28]. |

| Mcl-1 inhibitor 9 | Mcl-1 inhibitor 9, MF:C32H39ClN2O5S, MW:599.2 g/mol |

| Antitumor agent-71 | Antitumor agent-71, MF:C26H31N5O4S, MW:509.6 g/mol |

The integration of artificial intelligence (AI) with automated robotic platforms is revolutionizing research and development in fields ranging from nanomaterial synthesis to drug discovery. Traditional trial-and-error approaches are often inefficient, struggling to navigate vast experimental spaces and leading to suboptimal results. AI-driven autonomous laboratories address these challenges by closing the predict-make-measure discovery loop, dramatically accelerating the pace of innovation. Among the diverse AI methodologies available, three core algorithms have demonstrated particular efficacy for parameter search and optimization in experimental settings: Bayesian optimization, the A* search algorithm, and reinforcement learning. This article provides detailed application notes and protocols for implementing these algorithms within the context of automated synthesis platforms, serving as a practical guide for researchers and drug development professionals.

Algorithm Fundamentals & Comparative Analysis

The selection of an appropriate optimization algorithm depends on the nature of the parameter space, the cost of experimentation, and the specific objectives of the research. The table below summarizes the core characteristics, strengths, and ideal use cases for each algorithm.

Table 1: Core AI Algorithms for Parameter Optimization in Automated Synthesis

| Algorithm | Core Principle | Parameter Space | Key Strengths | Ideal Application Context |

|---|---|---|---|---|

| Bayesian Optimization [29] [30] | Uses probabilistic surrogate models and acquisition functions to balance exploration and exploitation. | Continuous | Highly sample-efficient; handles noisy data; provides uncertainty estimates. | Optimizing chemical formulations and reaction conditions with expensive experiments. |

| A* Search [31] | Guided graph search using a cost function and heuristic to navigate from start to goal. | Discrete | Guarantees finding an optimal path in a discrete space; highly efficient with a good heuristic. | Synthesizing nanomaterials with specific target properties from a set of known protocols. |

| Reinforcement Learning (RL) [32] [33] [34] | Agent learns a policy to maximize cumulative reward through environment interaction. | Both | Adapts to complex, sequential decision-making tasks; can learn entirely new strategies. | Designing novel drug molecules or optimizing multi-step synthesis processes. |

Quantitative Performance Comparison

In practical applications, these algorithms demonstrate significant performance improvements over traditional methods. The following table summarizes quantitative results from published studies.

Table 2: Documented Algorithm Performance in Research Applications

| Algorithm | Application Context | Reported Performance | Comparative Benchmark |

|---|---|---|---|

| A* [31] | Optimization of Au nanorods (Au NRs) and other nanomaterials. | Comprehensive optimization achieved in 735 experiments for Au NRs; Au NSs/Ag NCs in 50 experiments. | Outperformed Optuna and Olympus in search efficiency, requiring significantly fewer iterations. |

| Bayesian Optimization [29] | Vaccine formulation development for live-attenuated viruses. | Model predictions showed high R² and low root mean square errors, confirming reliability for stability attributes. | Outperformed labor-intensive "trial and error" and traditional Design of Experiments (DoE) approaches. |

| Reinforcement Learning [30] | Large-scale combination drug screening (BATCHIE platform). | Accurately predicted unseen combinations and detected synergies after exploring only 4% of the 1.4M possible experiments. | Outperformed fixed experimental designs in retrospective simulations, better prioritizing effective combinations. |

Detailed Experimental Protocols

Protocol 1: A* Algorithm for Nanomaterial Synthesis

This protocol is adapted from an automated platform that synthesizes metallic nanoparticles (Au, Ag, Cuâ‚‚O, PdCu) with controlled properties [31].

1. Research Reagent Solutions & Materials Table 3: Essential Reagents for Robotic Nanomaterial Synthesis

| Item | Function / Explanation |

|---|---|

| HAuClâ‚„ (Gold Salt) | Primary precursor for gold nanoparticle synthesis. |

| CTAB (Surfactant) | Structure-directing agent that controls nanoparticle morphology. |

| AgNO₃ | Modifies crystal growth habit, crucial for nanorod formation. |

| NaBHâ‚„ | A strong reducing agent used to form initial gold seed nanoparticles. |

| Ascorbic Acid | A mild reducing agent that facilitates the growth of seeds into nanorods. |

2. Equipment Setup

- Robotic Platform: A commercially available platform such as the "Prep and Load" (PAL) system with Z-axis robotic arms, agitators, a centrifuge module, and a UV-vis spectrometer for in-situ characterization [31] [35].

- Software Interface: The platform is controlled via executable files (e.g.,

.mthor.pzm), which can be edited by users without extensive programming skills to define experimental steps.

3. Workflow Diagram

4. Procedure

- Step 1: Target Definition. Input the target nanomaterial property into the system (e.g., a Longitudinal Surface Plasmon Resonance (LSPR) peak for Au nanorods between 600-900 nm).

- Step 2: Initial Parameter Generation. Use the integrated GPT model to mine existing literature and generate a set of initial synthesis parameters and methods [31].

- Step 3: Robotic Experiment Execution. The robotic platform executes the synthesis protocol. Key steps include:

- Liquid handling operations to mix reagents in specific sequences.

- Incubation on agitators for controlled reaction times.

- In-situ characterization via UV-vis spectroscopy to measure the LSPR peak.

- Step 4: Data Integration. The system automatically uploads the synthesis parameters and corresponding UV-vis data to a specified location.

- Step 5: A* Algorithm Optimization. The A* algorithm processes the results to propose a new set of parameters. It uses a heuristic to guide the search through the discrete parameter space (e.g., concentrations of CTAB, AgNO₃, ascorbic acid) towards the target property [31].

- Step 6: Iteration. Steps 3-5 are repeated in a closed loop until the synthesized nanoparticles meet the target property specifications. The system reported high reproducibility, with deviations in the characteristic LSPR peak ≤1.1 nm under identical parameters [31].

Protocol 2: Bayesian Optimization for Vaccine Formulation

This protocol is based on a proof-of-concept study that used Bayesian optimization to develop stable vaccine formulations for live-attenuated viruses [29].

1. Research Reagent Solutions & Materials Table 4: Key Components for Vaccine Formulation Screening

| Item | Function / Explanation |

|---|---|

| Live-attenuated Virus | The vaccine candidate whose stability is being optimized. |

| Excipients (Sugars, Amino Acids, Polymers) | Stabilizing agents that protect the viral structure during storage or freeze-drying. |

| rHSA (Human Serum Albumin) | A common protein excipient that stabilizes live-attenuated viruses. |

2. Equipment Setup

- Stability Chambers: For incubating formulations at elevated temperatures (e.g., 37°C) to accelerate stability studies.

- Analytical Instruments: Equipment for measuring Critical Quality Attributes (CQAs), such as instruments for measuring infectious titer (for liquid forms) or glass transition temperature, Tg' (for freeze-dried forms).

3. Workflow Diagram

4. Procedure

- Step 1: Problem Formulation. Define the optimization objective and constraints. For a liquid vaccine (Case Study 1), the objective could be to minimize infectious titer loss after one week at 37°C. For a freeze-dried vaccine (Case Study 2), the objective could be to maximize the glass transition temperature (Tg') [29].

- Step 2: Initial Design of Experiments (DoE). Execute a small, space-filling initial set of experiments (e.g., varying excipient types and concentrations) to gather preliminary data.

- Step 3: Model Initialization. Use the initial data to train a Gaussian Process (GP) model, which serves as a probabilistic surrogate for the unknown response landscape.

- Step 4: Iterative Optimization Loop. For each subsequent batch:

- Step 4a: Suggestion. The acquisition function (e.g., Expected Improvement) uses the GP model's predictions and uncertainty to propose the next most informative experiment.

- Step 4b: Execution. Conduct the proposed experiment and accurately measure the CQA.

- Step 4c: Update. Augment the training data with the new result and update the GP model.

- Step 5: Termination and Validation. The loop continues until the model converges or the experimental budget is exhausted. The final optimal formulation predicted by the model should be validated with confirmatory experiments.

Protocol 3: Reinforcement Learning for Drug Target Affinity Prediction

This protocol outlines the use of the Adaptive-DTA framework, which employs Reinforcement Learning (RL) to automate the design of graph neural networks for predicting drug-target affinity (DTA) [34].

1. Research Reagent Solutions & Materials Table 5: Computational Resources for RL-based DTA Prediction

| Item | Function / Explanation |

|---|---|

| Benchmark Datasets (Davis, KIBA, BindingDB) | Curated datasets containing known drug-target pairs and their binding affinities (Kd, KIBA scores) for model training and validation. |

| Computational Environment | High-performance computing resources with GPUs to handle the intensive search and training processes. |

| Molecular Representation | Software to represent drugs and targets as graphs or sequences, which serve as the input for the neural network. |

2. Equipment Setup

- Software Framework: The Adaptive-DTA framework is implemented in a deep learning environment (e.g., Python with PyTorch/TensorFlow).

- Search Space Definition: The framework defines a search space of possible Graph Neural Network (GNN) architectures using a Directed Acyclic Graph (DAG).

3. Workflow Diagram

4. Procedure

- Step 1: Problem Formulation. Define the goal: to automatically find a GNN architecture that achieves high predictive accuracy on a DTA benchmark dataset.

- Step 2: Search Space Definition. Construct a flexible search space based on a Directed Acyclic Graph (DAG), which includes various operations and connection patterns for potential GNN layers [34].

- Step 3: RL-Guided Search. The core loop involves:

- Step 3a: Action. The RL agent (with a policy network) samples a new GNN architecture from the search space.

- Step 3b: Training and Evaluation. The sampled architecture is trained on the training set and its performance is evaluated on a validation set.

- Step 3c: Reward. The performance metric (e.g., Concordance Index) is used as a reward signal.

- Step 3d: Policy Update. The agent's policy is updated using the REINFORCE algorithm or another policy gradient method to maximize the expected reward, making it more likely to propose high-performing architectures in the future [34].

- Step 4: Model Selection and Deployment. After the search concludes, the best-performing architecture identified during the search is retrained and can be deployed for predicting affinities of novel drug-target pairs.

Application Note

Astellas Pharma's "Human-in-the-Loop" drug discovery platform represents a transformative approach to small-molecule synthesis, integrating artificial intelligence (AI), robotics, and researcher expertise into a single, cohesive system. This platform was developed to address the profound inefficiencies of traditional drug discovery, a process that typically spans 9 to 16 years with a success rate for small molecules as low as 1 in 23,000 compounds in Japan [36]. By creating a closed-loop system where AI designs compounds and robotic platforms execute their synthesis, Astellas has demonstrated a capability to reduce the hit-to-lead optimization timeline by approximately 70% compared to traditional methods [36]. This acceleration allows the company to deliver greater value to patients faster and has already resulted in an AI-designed, robot-synthesized compound advancing to clinical trials [36].

The platform's core innovation lies in its "Human-in-the-Loop" architecture, which strategically balances automation with human oversight. Researchers delegate repetitive tasks to AI and robotics, such as data collection and research material preparation, freeing up their time for creative problem-solving and deriving deeper insights from experimental results [36]. This integration was key to overcoming initial researcher skepticism and has led to unexpected discoveries, with the AI identifying promising compounds that might have been overlooked using traditional selection methods [36].

Key Performance Data and Outcomes

The table below summarizes the key quantitative outcomes from the implementation of Astellas's AI-driven platform.

Table 1: Key Performance Metrics of Astellas's AI-Driven Drug Discovery Platform

| Metric | Traditional Workflow | Astellas AI-Driven Platform | Improvement/Outcome |

|---|---|---|---|

| Hit-to-Lead Optimization Time | Baseline | ~70% reduction | Accelerated timeline [36] |

| Clinical Trial Milestone | 4-5 years | 12 months (for one molecule) | Record time to trial [36] |

| Researcher Workload | High manual effort | Significant reduction | Automation of data collection and compound synthesis [36] |

| Compound Identification | Traditional selection methods | AI identifies novel, promising compounds | Unexpected discoveries with high efficacy potential [36] |

Experimental Protocol

This protocol details the operational workflow for a single, automated Design-Make-Test-Analyze (DMTA) cycle within the Astellas "Human-in-the-Loop" platform.

Stage 1: AI-Driven Compound Design and Prioritization

Objective: To generate and prioritize novel small-molecule compounds with optimized properties for a defined therapeutic target.

Procedure:

- Target Input and Constraint Definition: Researchers define the target product profile, including desired potency, selectivity, solubility, and ADMET (Absorption, Distribution, Metabolism, Excretion, Toxicity) properties. This human input sets the strategic direction [36].

- In Silico Compound Generation: The platform's AI, leveraging techniques such as reinforcement learning, generates thousands of novel virtual compound structures. These algorithms are trained to optimize molecular structures against the defined constraints, balancing multiple pharmacological properties simultaneously [37] [38].

- Synthetic Feasibility Assessment: A critical parallel step involves predicting the synthetic pathway for the top-generated compounds. The AI consults integrated chemical knowledge bases and retrosynthetic tools (e.g., tools akin to SYNTHIA [39]) to rank compounds based on the ease and efficiency of their synthesis.

- Researcher-in-the-Loop Review: The platform presents the prioritized list of AI-designed compounds, along with their predicted properties and synthetic routes, in a clear format to the research team. The scientist provides final approval, selecting the compounds for synthesis based on their expert judgment [36].

Stage 2: Robotic Synthesis and Purification

Objective: To automate the physical synthesis and purification of the AI-designed compounds.

Procedure:

- Workflow Scripting: The approved synthesis plan is translated into an automated operation script (e.g., an

.mthor.pzmfile). This script contains machine-readable commands for the robotic platform [12]. - Automated Liquid Handling and Reaction Execution:

- A robotic platform (e.g., a system analogous to the "Prep and Load" or PAL system [12]) executes the script.

- Z-axis robotic arms perform liquid handling, transferring reagents and solvents from a solution module to reaction vials.

- Reaction vials are transported to agitator modules for mixing under controlled temperature and duration.

- Reaction Monitoring and Purification: The platform incorporates inline monitoring techniques, such as infrared (IR) spectroscopy or thin-layer chromatography (TLC) [28], to track reaction progress. Subsequently, integrated purification modules, such as centrifuges or fast-wash systems, isolate the final products [12].

Stage 3: Automated Bioactivity and Property Testing

Objective: To characterize the synthesized compounds for target engagement and pharmacological properties.

Procedure:

- High-Throughput Screening: The robotic system prepares diluted samples of the synthesized compounds for bioactivity assays.

- Target Engagement Validation: Assays such as the Cellular Thermal Shift Assay (CETSA) are used to confirm direct binding of the compound to the intended target in a physiologically relevant cellular environment [40].